How to test drive view in iOS

Issue #303

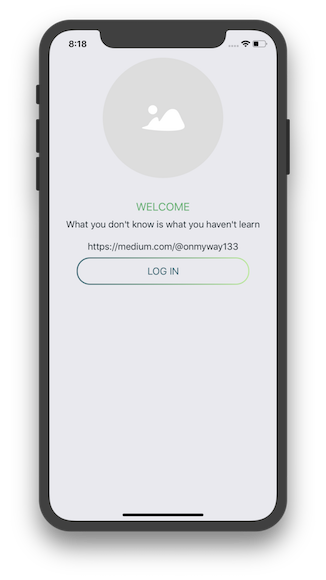

Instead of setting up custom framework and Playground, we can just display that specific view as root view controller

1 | window.rootViewController = makeTestPlayground() |

Issue #303

Instead of setting up custom framework and Playground, we can just display that specific view as root view controller

1 | window.rootViewController = makeTestPlayground() |

Issue #302

1 | final class CarouselLayout: UICollectionViewFlowLayout { |

How to use

1 | let layout = CarouselLayout() |

We can inset cell content and use let scale: CGFloat = 1.0 to avoid scaling down center cell

1 | import UIKit |

Issue #301

Make it more composable using UIViewController subclass and ThroughView to pass hit events to underlying views.

1 | class PanViewController: UIViewController { |

Issue #300

Good to know

Code

Issue #297

1 | let button = NSButton() |

To make it have native rounded rect

1 | button.imageScaling = .scaleProportionallyDown |

1 | import AppKit |

Issue #293

Answer https://stackoverflow.com/a/55119208/1418457

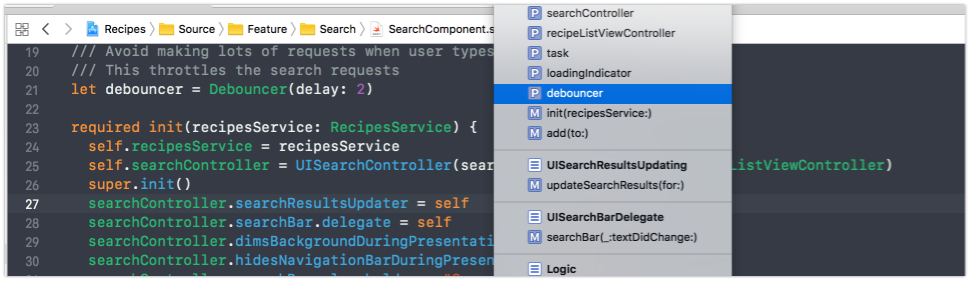

This is useful to throttle TextField change event. You can make Debouncer class using Timer

1 | import 'package:flutter/foundation.dart'; |

Declare and trigger

1 | final _debouncer = Debouncer(milliseconds: 500); |

Issue #288

Use this property to specify a custom selection image. Your image is rendered on top of the tab bar but behind the contents of the tab bar item itself. The default value of this property is nil, which causes the tab bar to apply a default highlight to the selected item

tabBar tabBar.isHidden = true1 | import UIKit |

func tabBar(_ tabBar: UITabBar, didSelect item: UITabBarItem)tabBar.subviews contains 1 private UITabBarBackground and many private UITabBarButton1 | import UIKit |

In iOS 13, we need to use viewDidAppear

1 | override func viewDidAppear(_ animated: Bool) { |

Issue #287

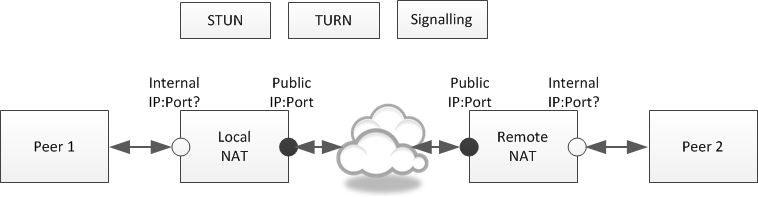

When digging into the world of TCP, I get many terminologies I don’t know any misconceptions. But with the help of those geeks on SO, the problems were demystified. Now it’s time to sum up and share with others :D

There are 5 elements that identify a connection. They call them 5-tuple

Protocol. This is often omitted as it is understood that we are talking about TCP, which leaves 4.

Source IP address.

Source port.

Target P address.

Target port.

No. This is a common misconception. TCP listens on 1 port and talk on that same port. If clients make multiple TCP connection to server. It’s the client OS that must generate different random source ports, so that server can see them as unique connections

A single listening port can accept more than one connection simultaneously.There is a ‘64K’ limit that is often cited, but that is per client per server port, and needs clarifying.

If a client has many connections to the same port on the same destination, then three of those fields will be the same — only source_port varies to differentiate the different connections. Ports are 16-bit numbers, therefore the maximum number of connections any given client can have to any given host port is 64K.

However, multiple clients can each have up to 64K connections to some server’s port, and if the server has multiple ports or either is multi-homed then you can multiply that further

So the real limit is file descriptors. Each individual socket connection is given a file descriptor, so the limit is really the number of file descriptors that the system has been configured to allow and resources to handle. The maximum limit is typically up over 300K, but is configurable e.g. with sysctl

When clients want to make TCP connection with server, this request will be queued in server ‘s backlog queue. This backlog queue size is small (about 5–10), and this size limits the number of concurrent connection requests. However, server quickly pick connection request from that queue and accept it. Connection request which is accepted are called open connection. The number of concurrent open connections is limited by server ‘s resources allocated for file descriptor.

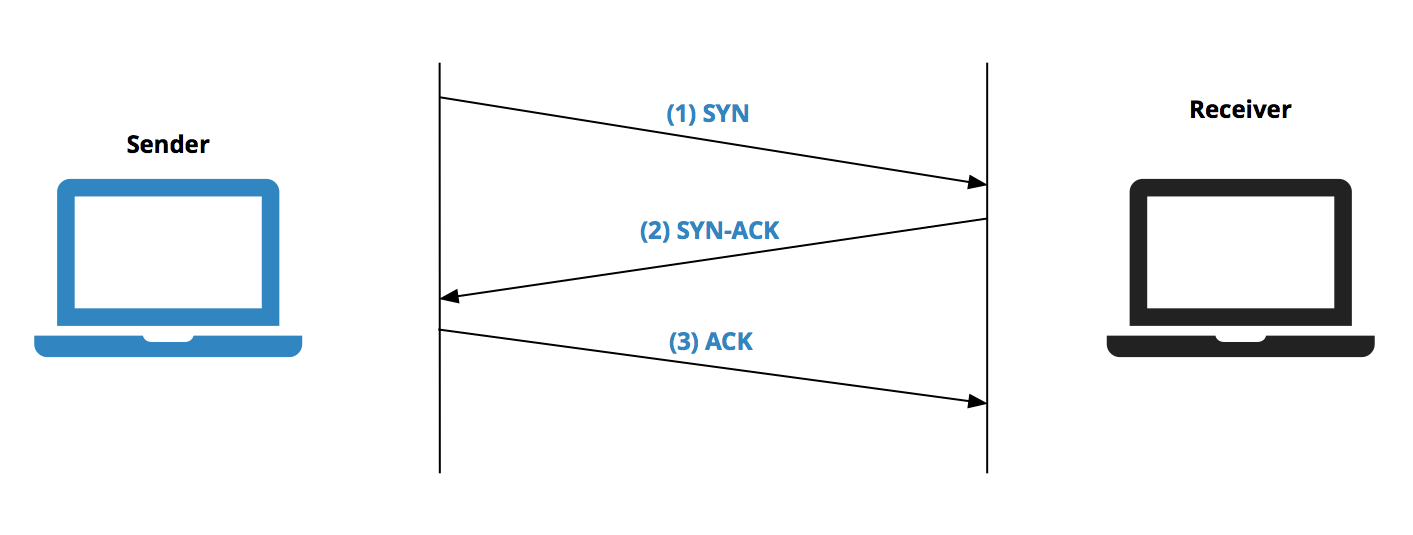

It’s normal. When server receive connection request from client (by receiving SYN), it will then response with SYN, ACK, hence cause successful TCP handshake. But this request are stills in backlog queue.

But, if the application process exceeds the limit of max file descriptors it can use, then when server calls accept, then it realizes that there are no file descriptors available to be the allocated for the socket and fails the accept call and the TCP connection sending a FIN to other side

Sockets come in two primary flavors. An active socket is connected to a remote active socket via an open data connection… A passive socket is not connected, but rather awaits an incoming connection, which will spawn a new active socket once a connection is established

Each port can have a single passive socket binded to it, awaiting incoming connections, and multiple active sockets, each corresponding to an open connection on the port. It’s as if the factory worker is waiting for new messages to arrive (he represents the passive socket), and when one message arrives from a new sender, he initiates a correspondence (a connection) with them by delegating someone else (an active socket) to actually read the packet and respond back to the sender if necessary. This permits the factory worker to be free to receive new packets

Here are some more links to help you explore further

Issue #285

settings.gradle.kts

1 | include(":app") |

build.gradle.kts

1 | import org.gradle.kotlin.dsl.apply |

tools/quality.gradle.kts

1 | plugins { |

Issue #284

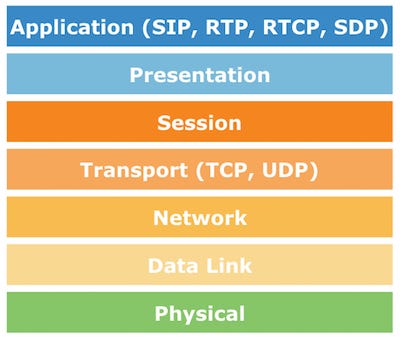

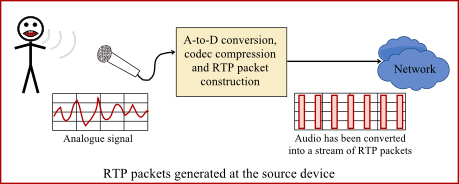

Before working with Windows Phone and iOS, my life involved researching VoIP. That was to build a C library for voice over IP functionality for a very popular app, and that was how I got started in open source.

The library I was working with were Linphone and pjsip. I learn a lot of UDP and SIP protocol, how to build C library for consumption in iOS, Android and Windows Phone, how challenging it is to support C++ component and thread pool in Windows Phone 8, how to tweak entropy functionality in OpenSSL to make it compile in Windows Phone 8, how hard it was to debug C code with Android NDK. It was time when I needed to open Visual Studio, Xcode and Eclipse IDE at the same time, joined mailing list and followed gmane. Lots of good memories.

Today I find that those bookmarks I made are still available on Safari, so I think I should share here. I need to remove many articles because they are outdated or not available anymore. These are the resources that I actually read and used, not some random links. Hopefully you can find something useful.

This post focuses more about resources for pjsip on client and how to talk directly and with/without a proxy server.

Here are some of the articles and open sources made by me regarding VoIP, hope you find it useful

rtpproxy: I forked from http://www.rtpproxy.org/ and changed code to make it support for IP handover. It means the proxy can handle when IP changes from 3G, 4G to Wifi and to reduce chances of attacks

Voice over Internet Protocol (also voice over IP, VoIP or IP telephony) is a methodology and group of technologies for the delivery of voice communications and multimedia sessions over Internet Protocol (IP) networks, such as the Internet

Voice over IP Overview: introduction to VoIP concepts, H.323 and SIP protocol

Voice over Internet Protocol the wikipedia article contains very foundation knowledge

Open Source VOIP Software: this is a must read. Lots of foundation articles about client and server functionalities, SIP, TURN, RTP, and many open sources framworks

VOIP call bandwidth: a very key factor in VoIP application is bandwidth consumption, it’s good to not going far beyond the accepted limit

Routers SIP ALG: this is the most annoying, because there is NAT and many types of NAT, also router with SIP ALG

SIP SIMPLE Client SDK: introduction to SIP core library, but it gives an overview of how

The Session Initiation Protocol (SIP) is a communications protocol for signaling and controlling multimedia communication sessions in applications of Internet telephony for voice and video calls, in private IP telephone systems, as well as in instant messaging over Internet Protocol (IP) networks.

RFC 3261: to understand SIP, we need to read its standard. I don’t know how many times I read this RFC.

OpenSIPS: OpenSIPS is a multi-functional, multi-purpose signaling SIP server

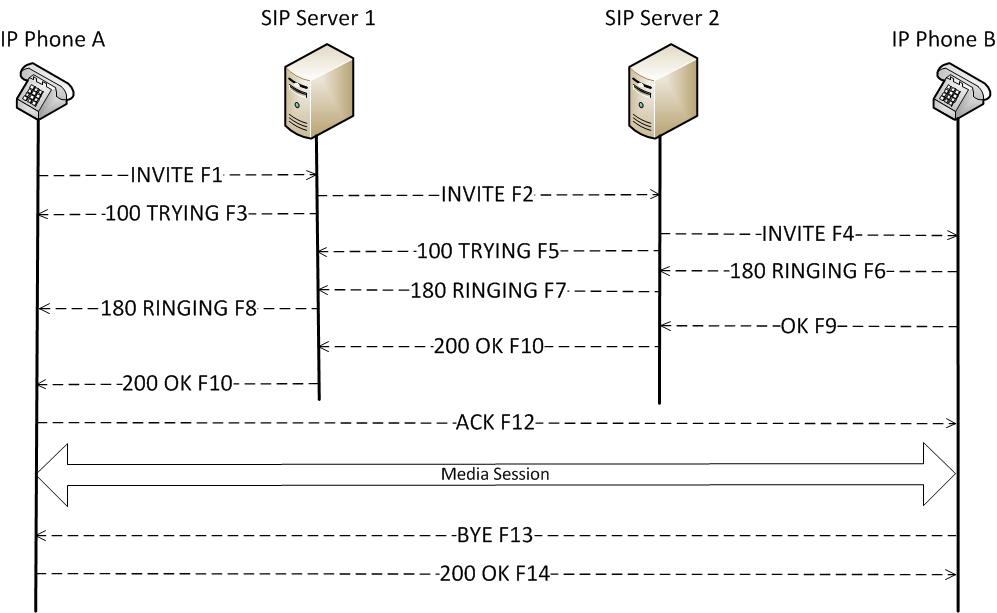

SIP protocol structure through an example: this is a must read, it shows very basic but necessary knowledge

Relation among Call, Dialog, Transaction & Message: basic concepts about call, dialog, transaction and message

microSIP: Open source portable SIP softphone for Windows based on PJSIP stack. I used to use this to test my pjsip tweaked library before building it for mobile

What is SIP: introduction to SIP written by the author of CSipSimple

SIP by Wireshack: introduction to SIP written by Wireshack. I used Wireshack a lot to intercept and debug SIP sessions

Solving the Firewall/NAT Traversal Issue of SIP: this shows how NAT can be a problem to SIP applications and how NAT traversal works

SipML5 SIP client written in Javascript

SIP Retransmissions: what and how to handle retransmission

draft-ietf-sipping-dialogusage-06: this is a draft about Multiple Dialog Usages in the Session Initiation Protocol

Creating and sending INVITE and CANCEL SIP text messages: SIP also supports sending text message, not just audio and video packages. This isa good for chat application

Configuring NAT traversal using Kamailio 3.1 and the Rtpproxy server: I don’t know how many times I had read this post

How to set up and use SIP Server on Windows: I used this to test a working SIP server on Windows

OpenSIPS/Kamailio serving far end nat traversal: discussion about how Kamailio deals with NAT traversal

NAT Traversal Module: how NAT traversal works in Kamailio as a module

RTP, SIP clients and server need to conform to some predefined protocols to meet standard and to be able to talk with each other. You need to read RFC a lot, besides you need to read some drafts.

NAT solves the problem with lack of IP, but it causes lots of problem for SIP applications, and for me as well 😂

Network address translation: Network address translation (NAT) is a method of remapping one IP address space into another by modifying network address information in the IP header of packets while they are in transit across a traffic routing device

Configuring Port Address Translation (PAT): how to configure port forwarding

Types Of NAT Explained (Port Restricted NAT, etc): This is a must read. I didn’t expect there’s many kinds of NAT in real life, and how each type affects SIP application in its own way

One Way Audio SIP Fix: sometimes we get the problem that only 1 person can speak, this talks about why

NAT traversal for the SIP protocol: explains RTP, SIP and NAT

SIP NAT Traversal: This is a must read. How to make SIP work under NAT

NAT and Firewall Traversal with STUN / TURN / ICE: pjsip and Kamailio actually supports STUN, TURN and ICE protocol. Learn about these concepts and how to make it work

Introduction to Network Address Translation (NAT) and NAT Traversal

Learn how TCP helps SIP in initiating session and to turn in TCP mode for package sending

Transmission Control Protocol: The Transmission Control Protocol (TCP) is one of the main protocols of the Internet protocol suite. It originated in the initial network implementation in which it complemented the Internet Protocol (IP)

Datagram socket: A datagram socket is a type of network socket which provides a connectionless point for sending or receiving data packets.[2] Each packet sent or received on a datagram socket is individually addressed and routed

TCP RST packet details: learn the important of RST bit

RST packet sent from application when TCP connection not getting closed properly

Why will a TCP Server send a FIN immediately after accepting a connection?

Where do resets come from? (No, the stork does not bring them.): learn about 3 ways handshake in TCP connection

Sockets and Ports: Do not confuse between socket and port

TCP Wake-Up: Reducing Keep-Alive Traffic in Mobile IPv4 and IPsec NAT Traversal

Learn about Transport Layer Security and SSL, especially openSSL for how to secure SIP connection. The interesting thing is to read code in pjsip about how it uses openSSL to encrypt messages

Configuring TLS support in Kamailio 3.1 — Howto: learn how to enable TLS mode in Kamailio

SIP TLS: how to configure TLS in Asterisk

Learn about Interactive Connectivity Establishment, another way to workaround NAT

Learn about Session Traversal Utilities for NAT and Traversal Using Relays around NAT, another way to workaround NAT

STUN: STUN (Simple Traversal of UDP through NATs (Network Address Translation)) is a protocol for assisting devices behind a NAT firewall or router with their packet routing. RFC 5389 redefines the term STUN as ‘Session Traversal Utilities for NAT’.

Learn about [Application Layer Gateway](http://Application Layer Gateway) and how it affects your SIP application. This component knows how to deal and modify your SIP message, so it might introduce unexpected behaviours.

What is SIP ALG and why does Gradwell recommend that I turn it off?

Understanding SIP with Network Address Translation (NAT): This is a must read, a very thorough document

Learn about voice quality, bandwidth and fixing delay in audio

RTP, Jitter and audio quality in VoIP: learn about the important of jitter and RTP

An Adaptive Codec Switching Scheme for SIP-based VoIP: explain codec switching during call in SIP based VoIP

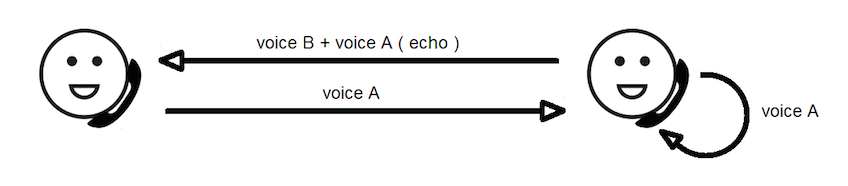

This is a very common problem in VoIP, sometimes we hear voice from the other and also from us. Learn how echo is made, and how to effectively do echo cancellation

Echo Cancellation: How to use Speex to cancel echo

Echo and Sidetone: A telephone is a duplex device, meaning it is both transmitting and receiving on the same pair of wires. The phone network must ensure that not too much of the caller’s voice is fed back into his or her receiver

How software echo canceller works?: I asked about how we use software to do echo cancellation

Learn how to generate dual tone to make signal in telecommunication

PJSIP is a free and open source multimedia communication library written in C language implementing standard based protocols such as SIP, SDP, RTP, STUN, TURN, and ICE. It combines signaling protocol (SIP) with rich multimedia framework and NAT traversal functionality into high level API that is portable and suitable for almost any type of systems ranging from desktops, embedded systems, to mobile handsets.

PJSUA API — High Level Softphone API: high level usage of pjsip

Stateful Operations: common functions to send request statefully

Message Creation and Stateless Operations: functions related to send and receive messages

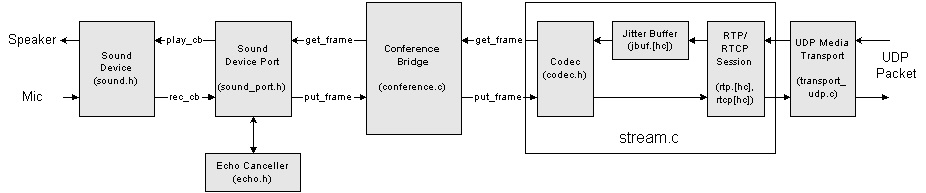

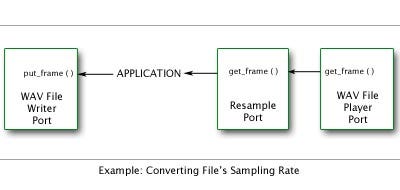

Understanding Media Flow: this is a must read. The media layer is so important, it controls sound, codec and conference bridge.

Getting Started: Building and Using PJSIP and PJMEDIA: This article describes how to download, customize, build, and use the open source PJSIP and PJMEDIA SIP and media stack

Codec Framework: pjsip supports multiple codec

Adaptive jitter buffer: this takes sometime to understand, but it plays an important part in making pjsip work properly regarding buffer handling

PJSUA-API Accounts Management: how to register account in pjsua

Building Dynamic Link Libraries (DLL/DSO): how to build pjsip as a dynamic library

Compile time configuration: lots of configuration we can apply to pjsip

Fast Memory Pool: pjsip has its own memory pool. It’s very interesting to look at the source code and learn something new

Using SIP TCP Transport: How to enable TCP mode in SIP and to initiate SIP session

Monochannel and multichannel audio frame converter: interesting read about mono and multi channel

IOQueue: I/O Event Dispatching with Proactor Pattern: the code for this is very interesting and plays a fundamental in how pjsip handles events

DNS Asynchronous/Caching Resolution Engine: how pjsip handles DNS resolution by itself

Secure socket I/O: the code for this is important if you want to learn how to use SSL under the hood

Multi-frequency tone generator: I learn a lot how pjsip uses sin wave to generate tone

SIP SRV Server Resolution (RFC 3263 — Locating SIP Servers): learn the mechanism for how pjsip finds a particular SIP server

Exception Handling: how to do Try Catch in C

Mutex Locks Order in PJSUA-LIB: how multiple locks at each layer helps ensure correction and avoid deadlocks. I had lots of nightmare debugging deadlocks with pjsip 😱

pjsip uses Local Thread Storage which introduces very cool behaviors

Threads question: how pjlib handles thread

Using Thread Local Storage: how to use TlsAlloc and TlsFree in Windows

Example: Thread local storage in a Pthread program: how Pthread works

Thread Local Storage: learn about pj_thread

How to work with sample rate of the media stream

Resample Port: how to perform resampling in pjmedia

Resampling Algorithm: code to perform resampling

Samples: Using Resample Port: very straightforward example to change sample rate of the media stream

How to Record Audio with pjsua: how to use pjsua to record audio.

Memory/Buffer-based Capture Port: believe me, you will jump into pjmedia_mem_capture_create a lot

File Writer (Recorder): record audio to .wav file

AMR Audio Encoding: understands AMR encoding

Audio Device API: how pjsip detects and use Audio device

Sound Device Port: Media Port Connection Abstraction to the Sound Device

Audio Manipulation Algorithms: lots of cool algorithm written in C for audio manipulation. The hardest and most imporant one is probably Adaptive jitter buffer

bad quality on iphone 2G with os 3.0: No one would use iPhone 2G now, but it’s good to be aware of older phones

getting Underflow, buf_cnt=0, will generate 1 frame continuessly: how to handle underflow in pjmedia

Measuring Sound Latency: This article describes how to measure both sound device latency and overall (end-to-end) latency of pjsua

Master/sound: How master sound works and deal with no sound on the mic input port

I learn a lot regarding video capture, ffmpeg and color space, especially YUV

siphon — VIdeoSupport.wiki: How siphon deals with video before pjsip 2.0

Video Device API; PJMEDIA Video Device API is a cross-platform video API appropriate for use with VoIP applications and many other types of video streaming applications.

PJSUA-API Video: Uses video APIs in pjsua with pjsip 2.1.0

PJSIP Video User’s Guide: all you need to know about video support in pjsip

Video streams: I can’t never forget pjmedia_vid_stream_create

Video source duplicator: duplicate video data in the stream.

AVI File Player: Video and audio playback from AVI file

PJSIP Version 2.0 Release Notes: starting with 2.0, pjsip supports video. Good to read

FFmpeg-iOS-build-script: details how to build ffmpeg for iOS

There are many SIP client for mobile and desktop, microSIP, Jitsi, Linphone, Doubango, … They all follow strictly SIP standard and may have their own SIP core, for example microSIP uses pjsip, Linphone uses liblinphone, …

Among that, I learn a lot from the Android client, CSipSimple, which offers very nice interface and have good functionalities. Unfortunately Google Code was closed, so I don’t know if the author has plan to do development on GitHub.

I also participated a lot on the Google forum for user and dev. Thanks for Regis, I learn a lot about open source and that made me interested in open source.

You can read What is a branded version

I don’t make any money from csipsimple at all. It’s a pure opensource and free as in speech project.

I develop it on my free time and just so that it benefit users.

That’s the reason why the project is released under GPL license terms. I advise you to read carefully the license (you’ll learn a lot of things on the spirit of the license and the project) : http://www.gnu.org/licenses/gpl.html

To sump up, the spirit of the GPL is that users should be always allowed to see the source code of the software they use, to use it the way they want and to redistribute it.

Because of NAT or in case users want to talk via a proxy, then a RTP proxy is needed. RTPProxy follows standard and works well with Kamailio

multiple audio devices, multiple calls, conferencing, recording and mix all of the above

Conference bridge should transmit silence frame when level is zero

Add user defined NAT hole-punching and keep-alive mechanism to media stream

IP change during call can cause problem, such as when user goes from Wifi to 4G mode

Learn about [Realtime transport control protocol](http://Real-time Transport Protocol) and how that works with RTP

To reduce payload size, we need to encode and decode the audio and video package. We usually use Speex and Opus. Also, it’s good to understand the .wav format

Windows Phone 8 introduces C++ component , changes in threading, VoIP and audio background mode. To do this I need to find another threadpool component and tweak openSSL a bit to make it compile on Windows Phone 8. I lost the source code so can’t upload the code to GitHub 😢. Also many links broke because Nokia was not here any more

Porting to New CPU Architecture: pjlib is the foundation of pjsip. Learn how to port it to another platform

How to implement audio streaming for VoIP calls for Windows Phone 8

Firstly, learn how to compile, use OpenSSL. How to call it from pjsip, and how to make it compile in Visual Studio for Windows Phone 8. I also learn the important of Winsock, how to port a library. I struggled a lot with porting openSSL to Windows RT, then to Windows Phone 8

A lot of links were broken 😢 so I can’t paste them all here.

Since pjsip, rtpproxy and kamailio are all C and C++ code. I needed to have a good understanding about them, especially pointer and memory handling. We also needed to learn about compile flags for debug and release builds, how to use Make, how to make static and dynamic libraries.

comp.lang.c Frequently Asked Questions: there’s lot of things about C we haven’t known about

Bit Twiddling Hacks: how to apply clever hacks with bit operators. Really really good reading here

Better types in C++11 — nullptr, enum classes (strongly typed enumerations) and cstdint

Issue #283

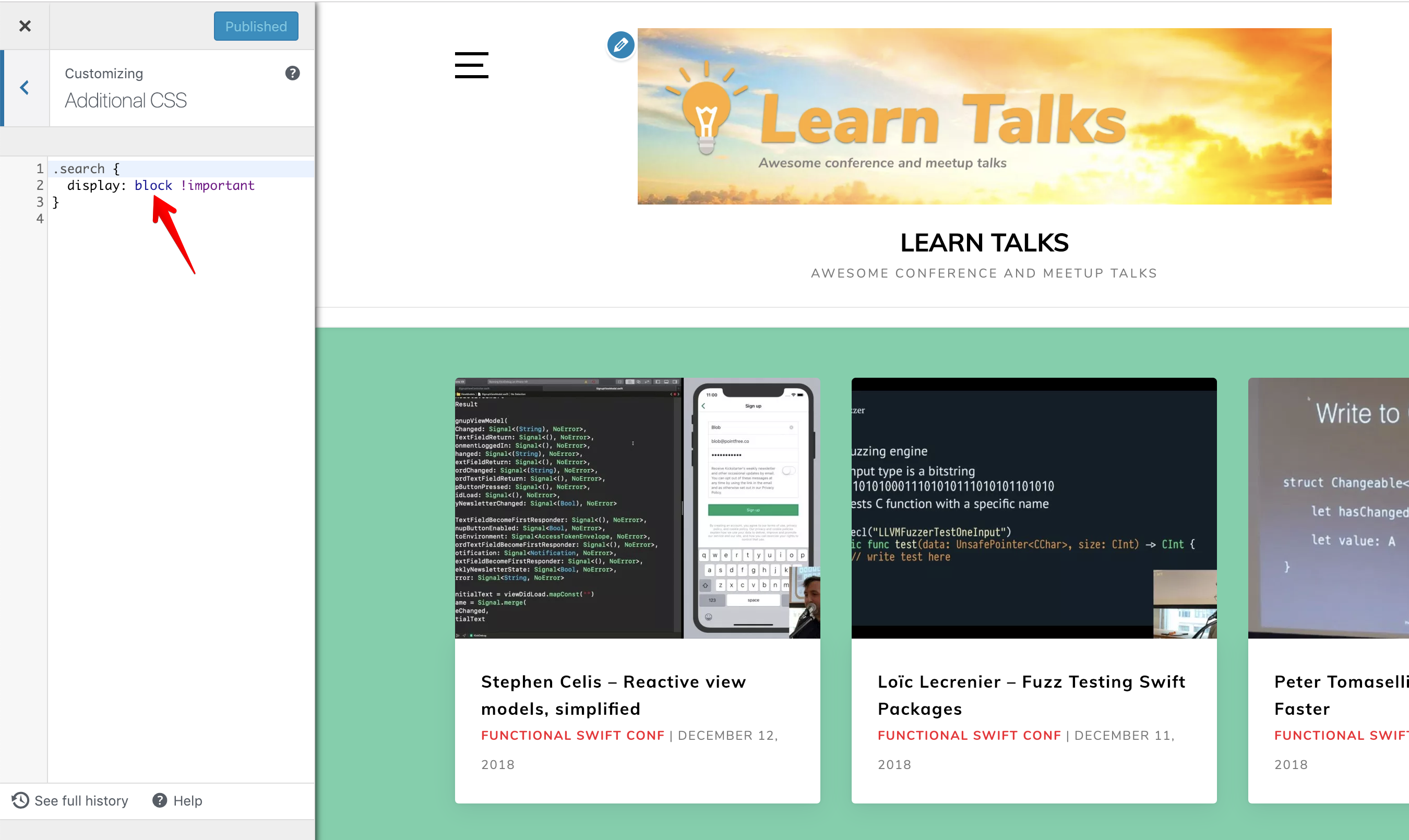

Original post https://medium.com/fantageek/dealing-with-css-responsiveness-in-wordpress-5ad24b088b8b

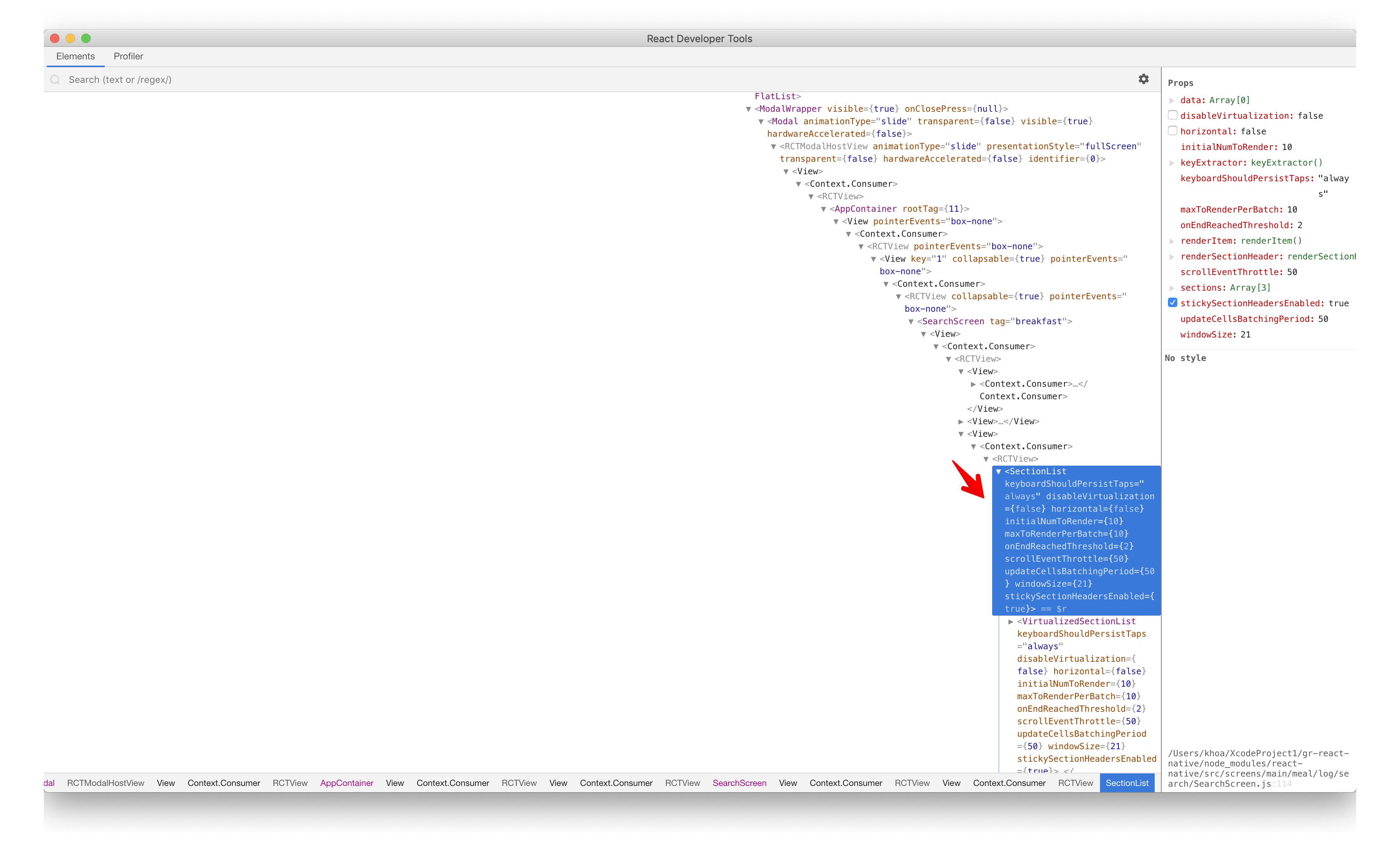

During the alpha test of LearnTalks, some of my friends reported that the screen is completely blank in search page, and this happened in mobile only. This article is how I identify the problem and found a workaround for the issue, it may not be the solution, but at least the screen does not appear blank anymore.

As someone who likes to keep up with tech via watching conference videos, I thought it might be a good idea to collect all of these to better search and explore later. So I built a web app with React and Firebase, it is a work in progress for now. Due to time constraints, I decided to go first with Wordpress to quickly play with the idea. So there is LearnTalks.

The theme Marinate that I used didn’t want to render the search page correctly. So I head over to Chrome Dev Tool for Responsive Viewport Mode and Safari for Web Inspector

The tests showed that the problem only happened on certain screen resolutions. This must be due to CSS @media query which display different layouts for different screen sizes. And somehow it didn’t work for some sizes.

[@media](http://twitter.com/media) screen and (max-width: 800px) {

#site-header {

display: none;

}

}The @media rule is used in media queries to apply different styles for different media types/devices.

Media queries can be used to check many things, such as:

width and height of the viewport

width and height of the device

orientation (is the tablet/phone in landscape or portrait mode?)

resolution

Using media queries are a popular technique for delivering a tailored style sheet (responsive web design) to desktops, laptops, tablets, and mobile phones.

So I go to Wordpress Dashboard -> Appearance -> Editor to examine CSS files. Unsurprisingly, there are a bunch of media queries

[@media](http://twitter.com/media) (min-width: 600px) and (max-width: 767px) {

.main-navigation {

padding: 0;

}

.site-header .pushmenu {

margin-top:0px;

}

.social-networks li a {

line-height:2.1em !Important;

}

.search {

display: none !important;

}

.customer blockquote {

padding: 10px;

text-align: justify;

}

}The .search selector is suspicious display: none !important;

The important directive is, well, quite important

It means, essentially, what it says; that ‘this is important, ignore subsequent rules, and any usual specificity issues, apply this rule!’

In normal use a rule defined in an external stylesheet is overruled by a style defined in the head of the document, which, in turn, is overruled by an in-line style within the element itself (assuming equal specificity of the selectors). Defining a rule with the !important ‘attribute’ (?) discards the normal concerns as regards the ‘later’ rule overriding the ‘earlier’ ones.

Luckily, this theme allows the ability to Edit CSS , so I can override that to a block attribute to always show search page

Issue #281

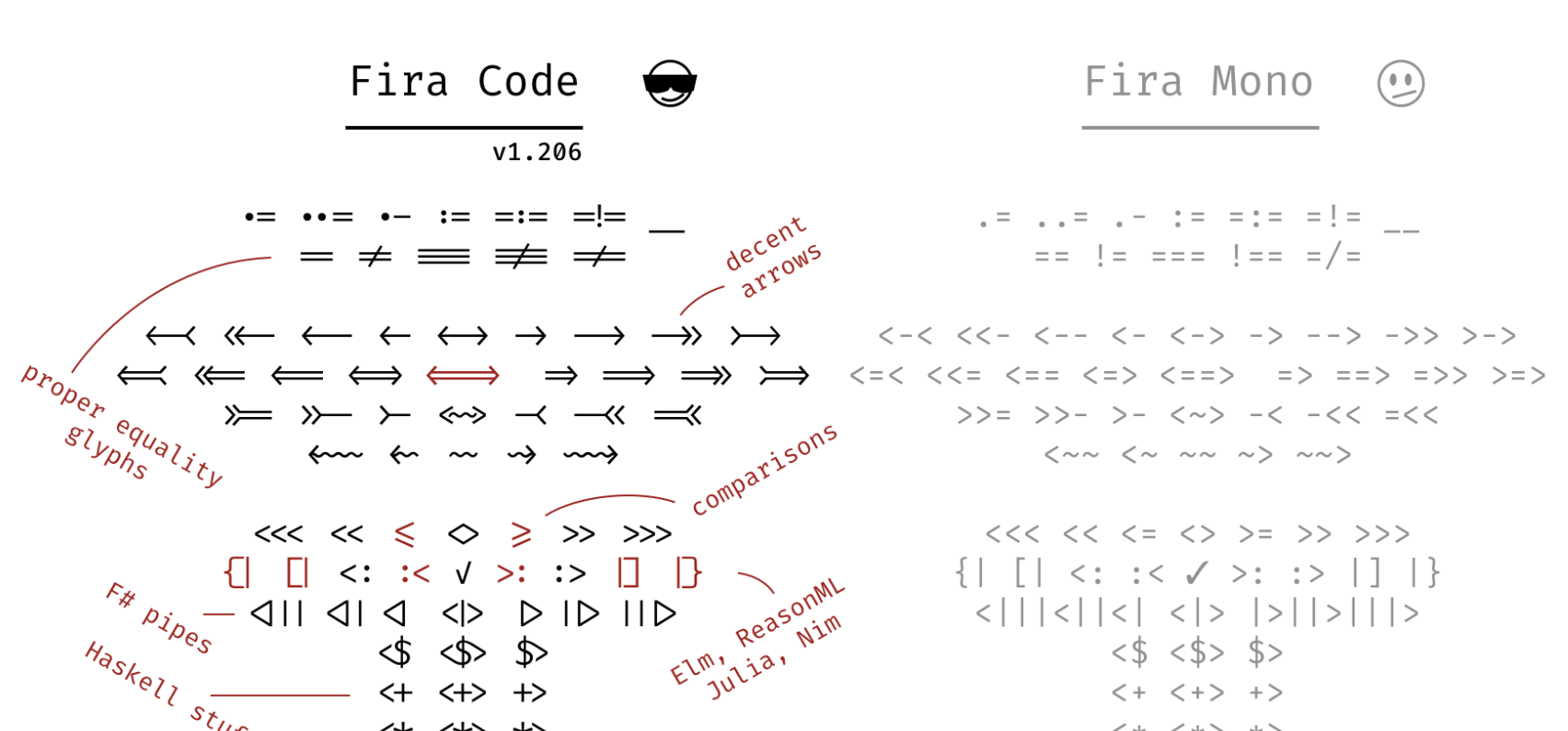

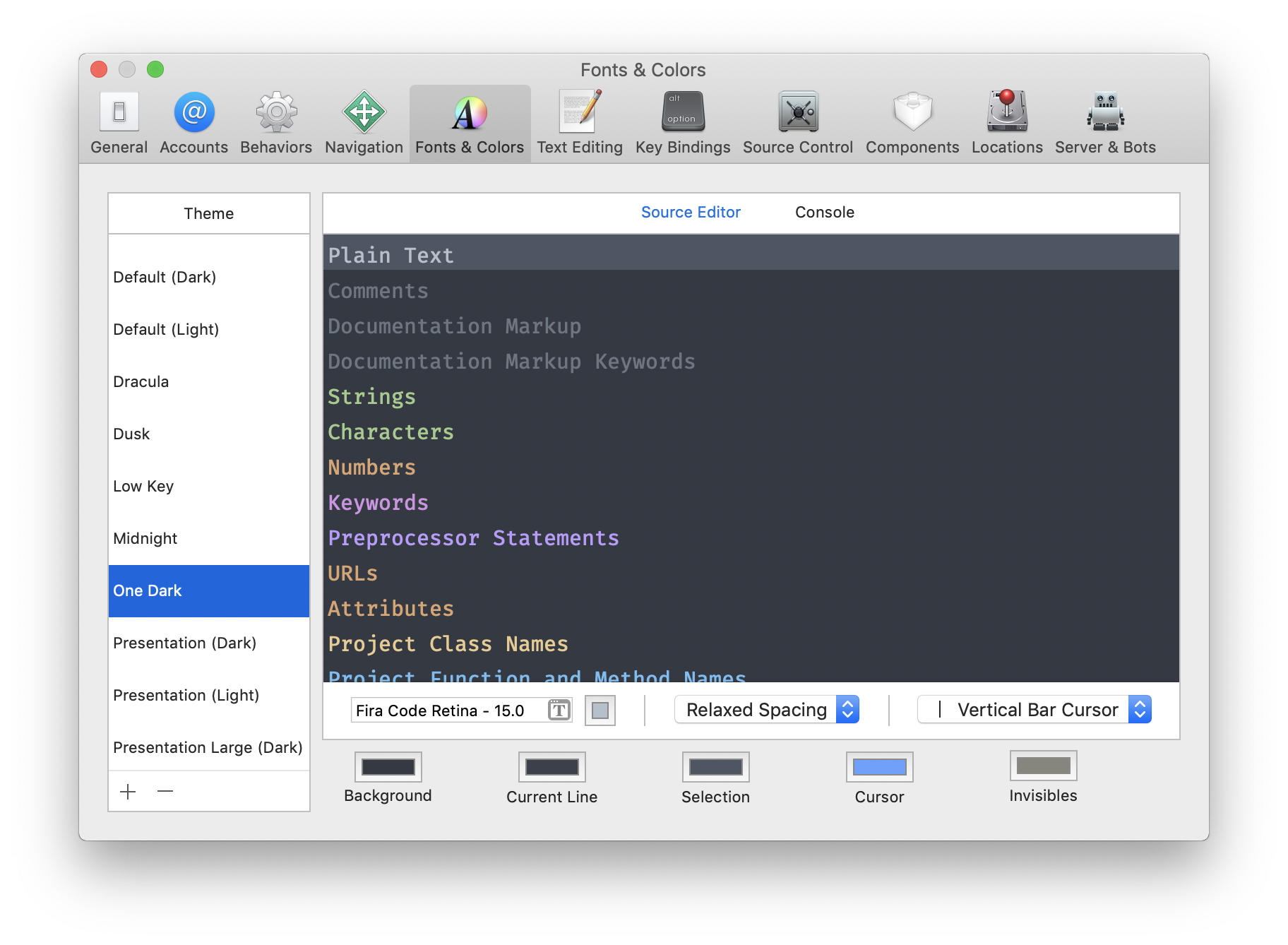

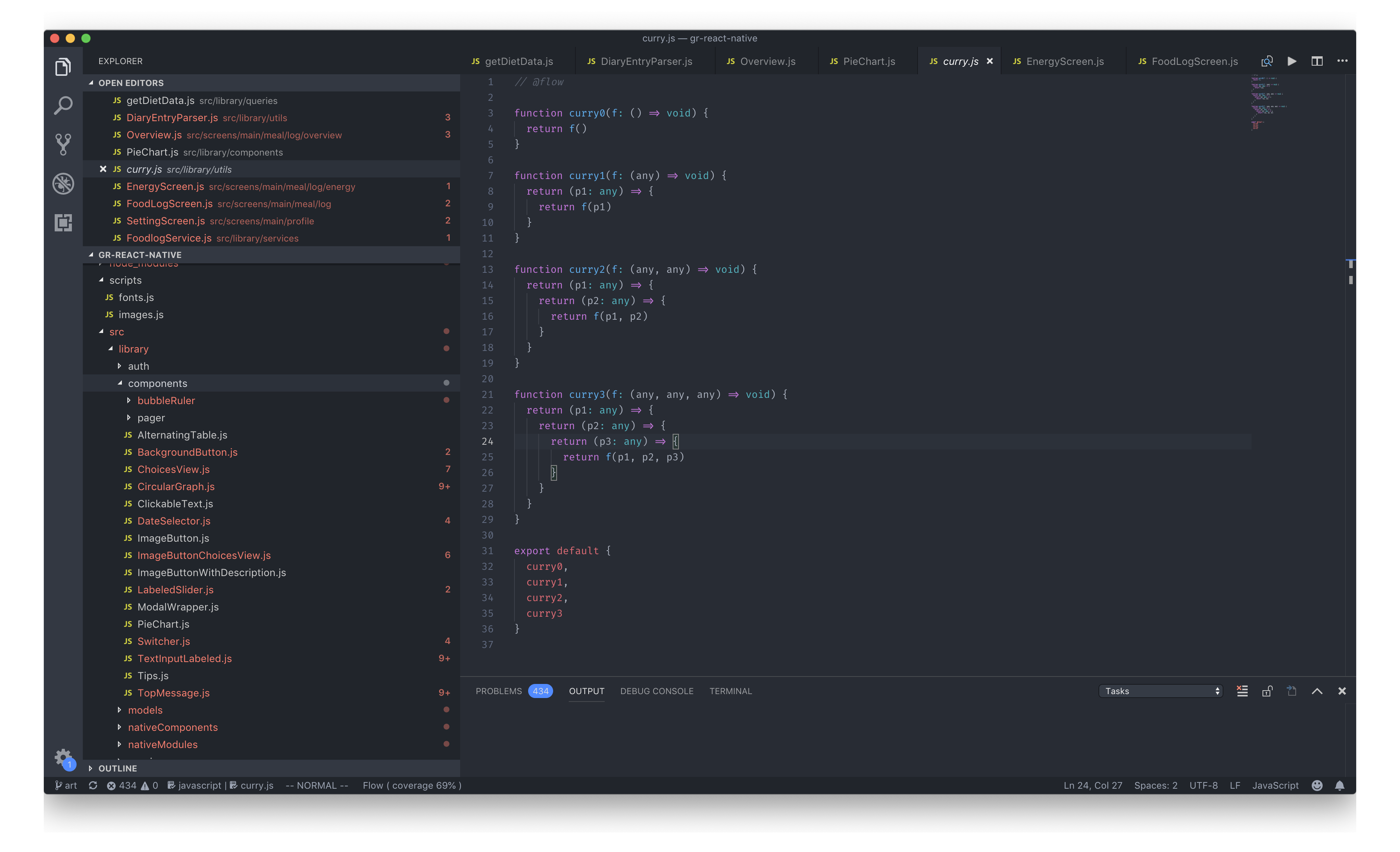

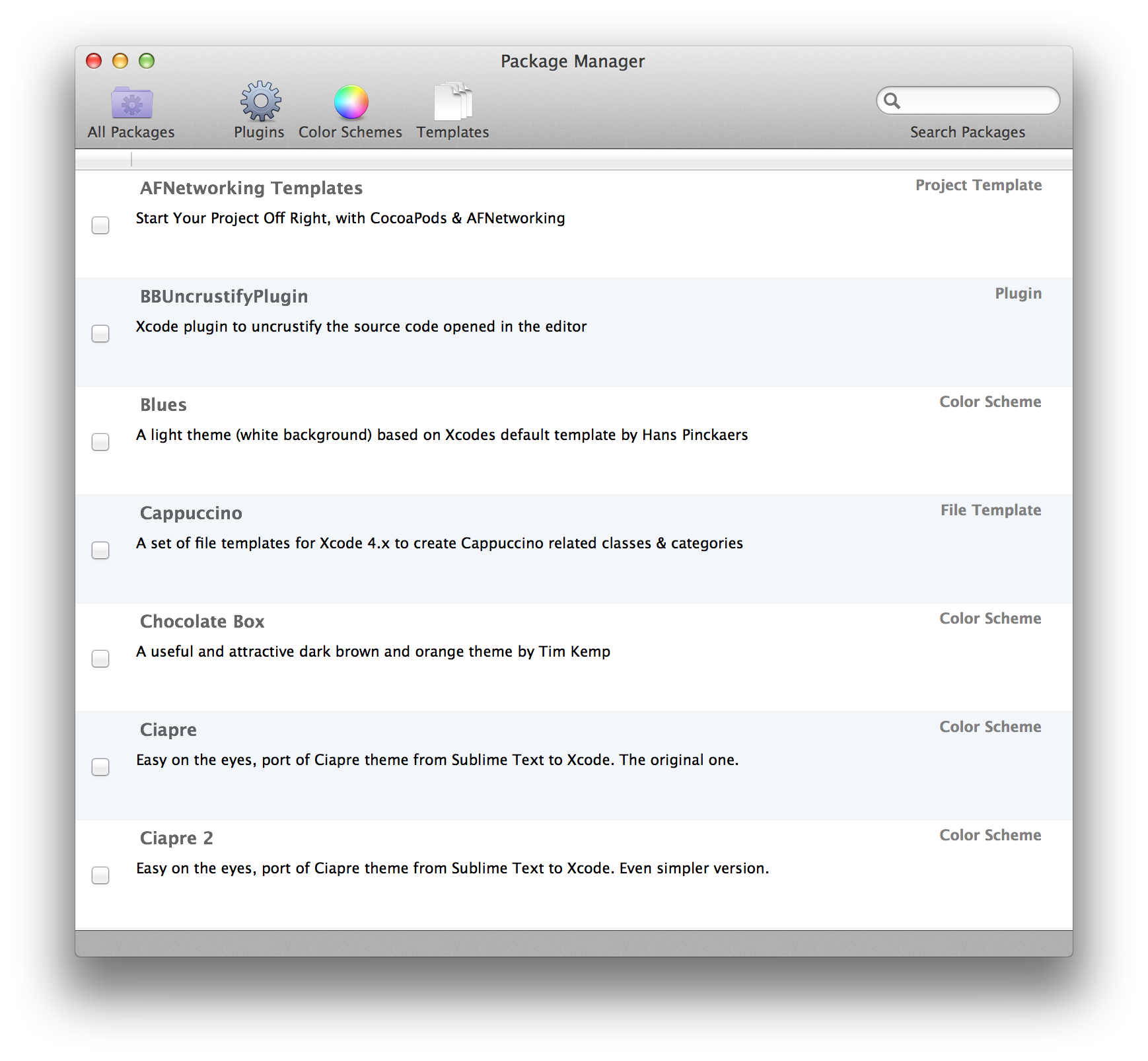

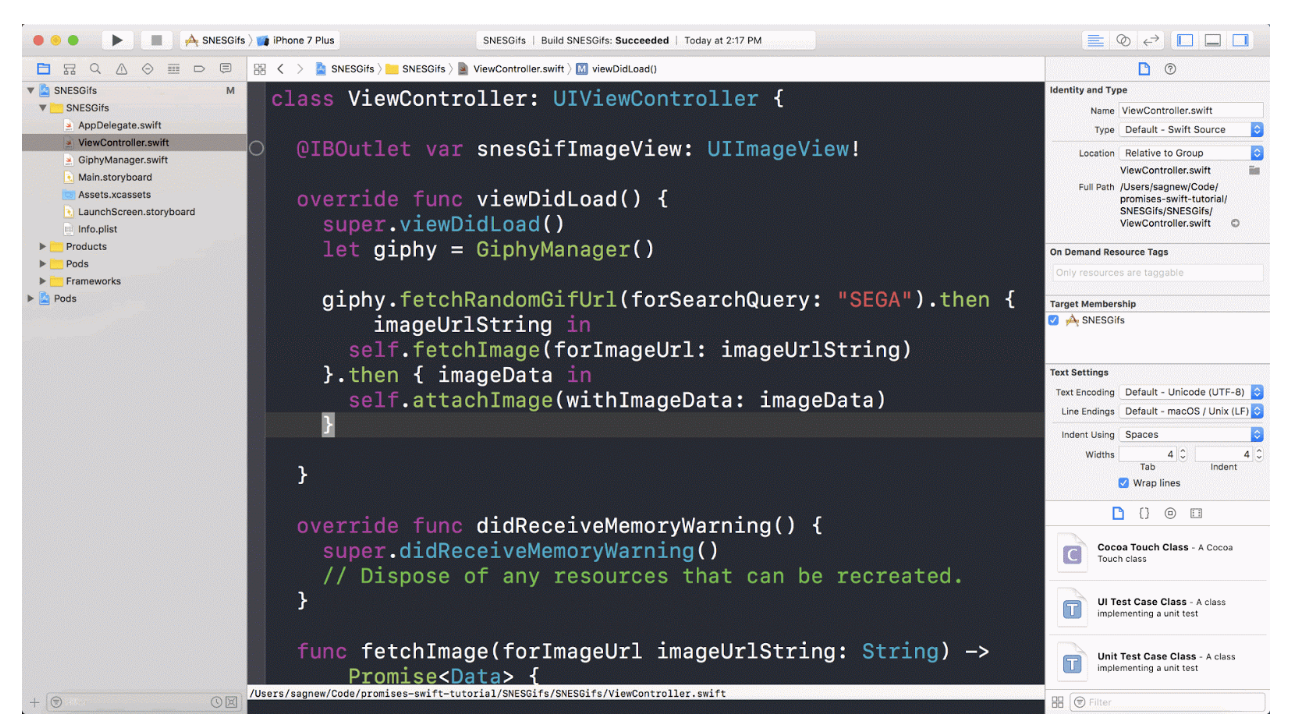

A good theme and font can increase your development happiness a lot. Ever since using Atom, I liked its One Dark theme. The background and text colors are just elegant and pleasant to the eyes.

Original designed for Atom, one-dark-ui that claims to adapt to most syntax themes, used together with Fira Mono font from mozilla.

There is also Dracula which is popular, but the contrast seem too high for my eyes.

I like FiraCode font the most, it is just beautiful and supports ligatures.

Alternatively, you can browse ProgrammingFonts or other ligature fonts like Hasklig to see which font suits you.

Theme and font are completely personal taste, but if you like me, please give One Dark and Fira a try, here is how to do that in Xcode, Android Studio and Visual Studio Code, which are editors that I use daily.

Firstly, you need to install the latest compiled font of FiraCode

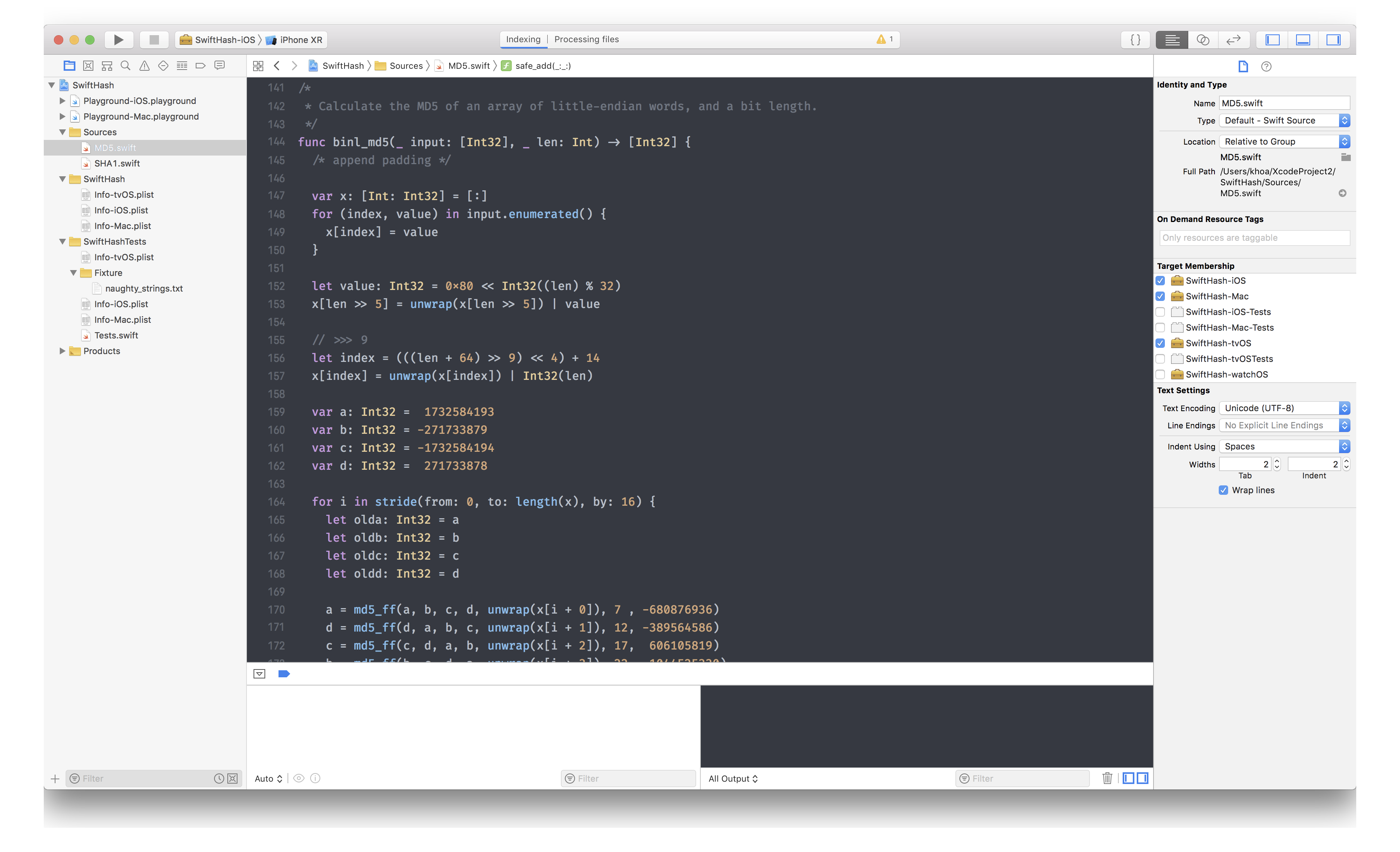

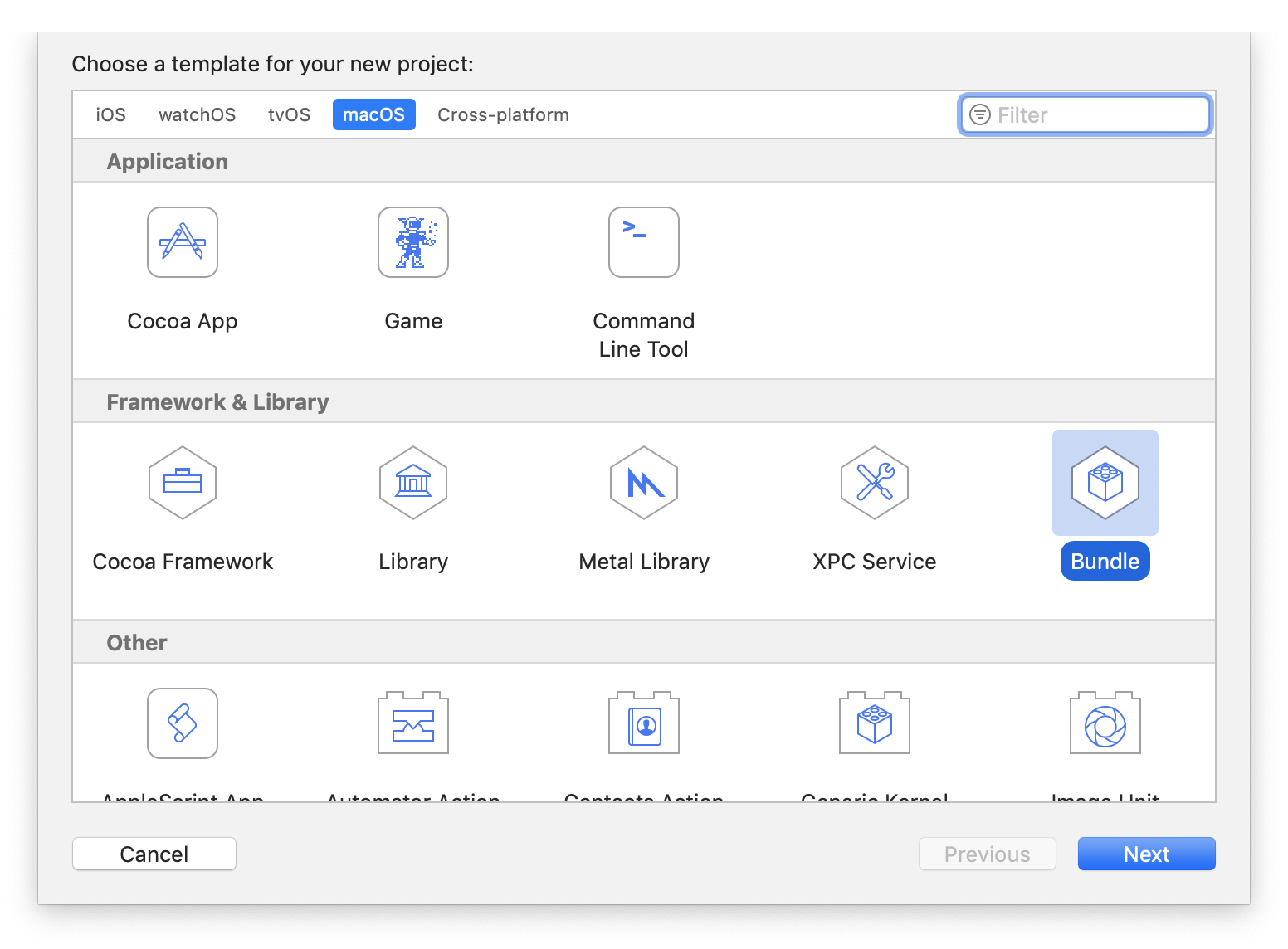

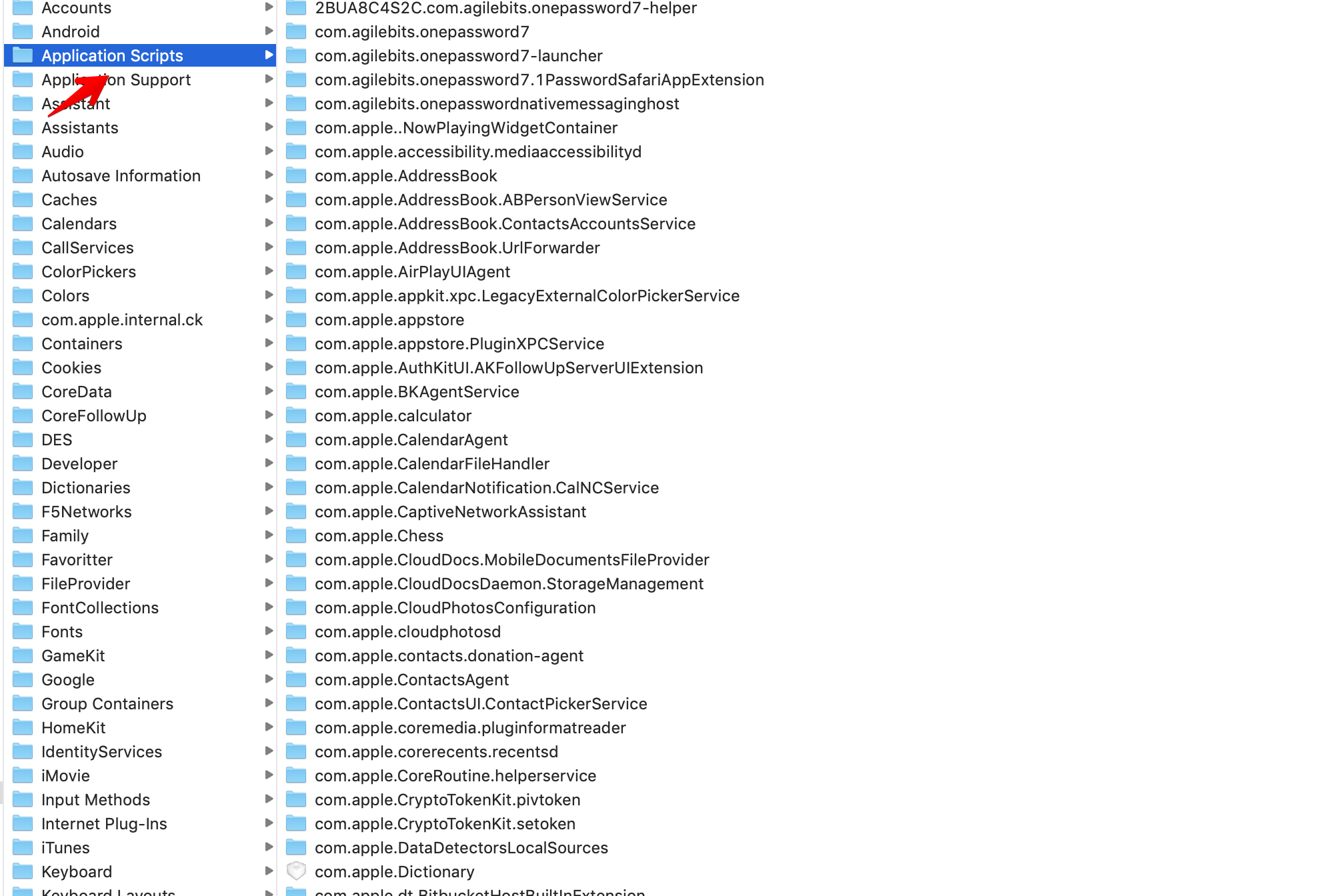

I used to have my own replication of One Dark, called DarkSide, this was how I learned how to make Xcode theme. For now, I find xcode-one-dark good enough. Xcode theme is just xml file with .xccolortheme extension and is placed into ~/Library/Developer/Xcode/UserData/FontAndColorThemes

After installing the theme, you should be able to select it from Preferences -> Fonts & Colors

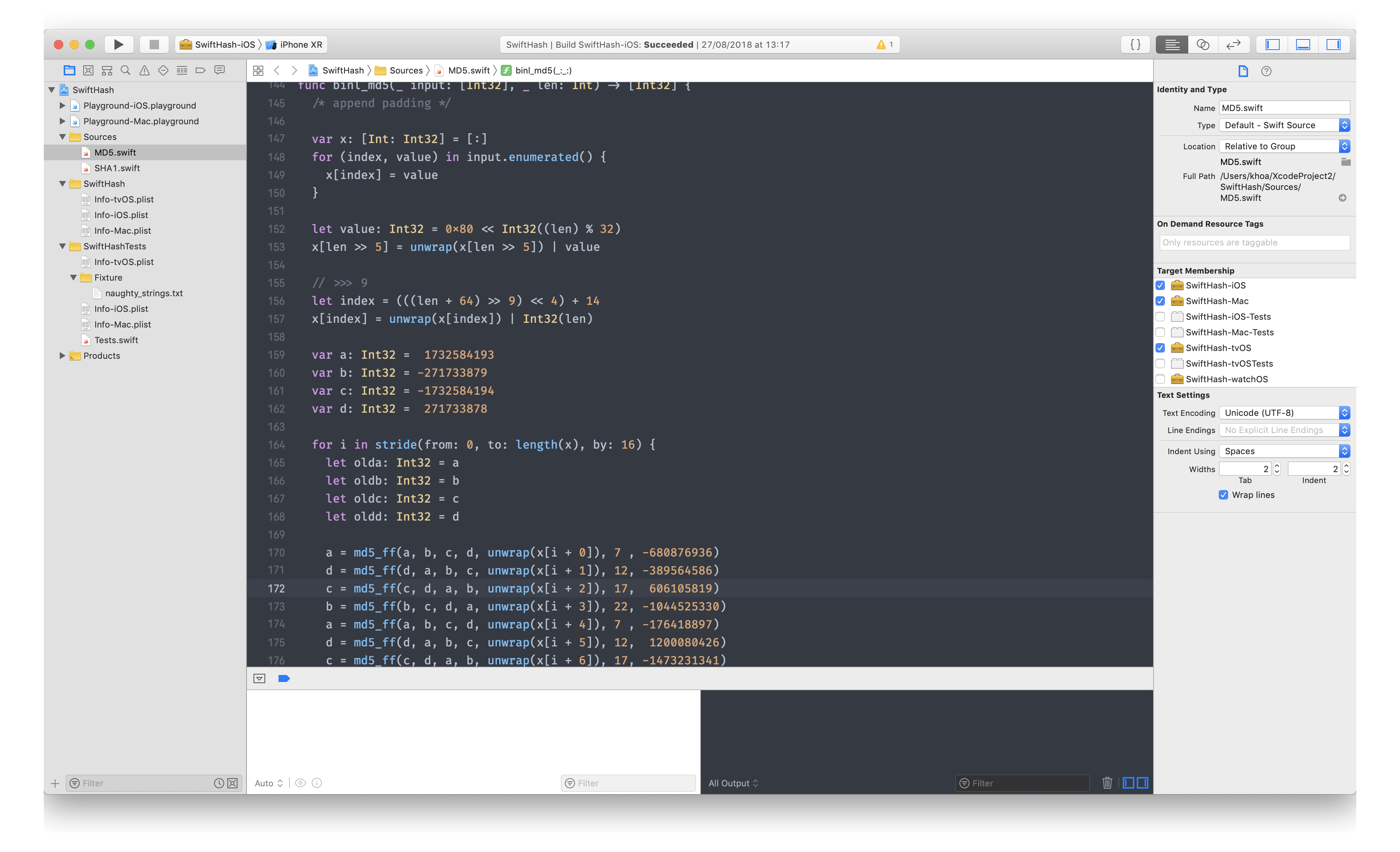

And it looks like below.

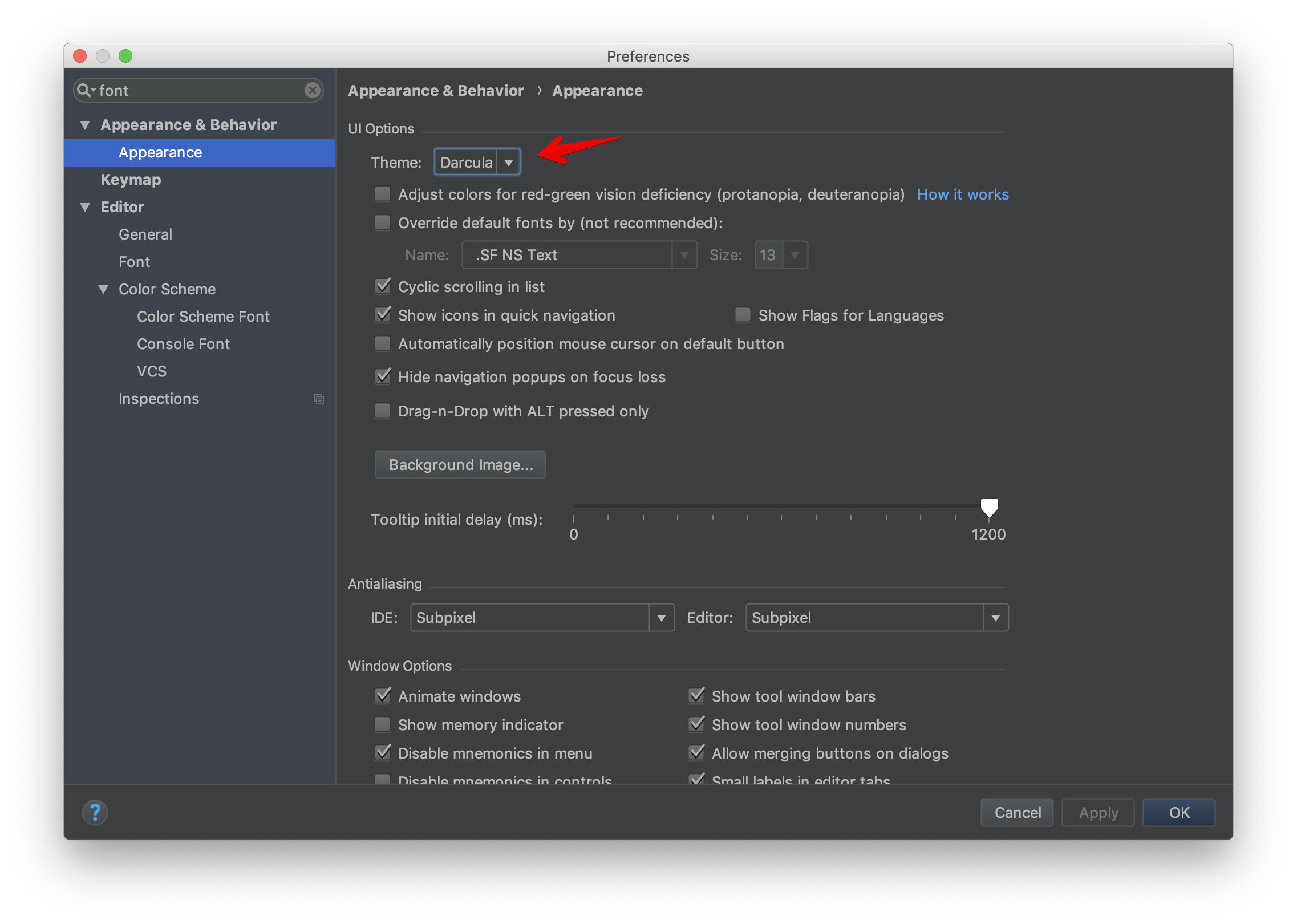

Android Studio defaults to have only Default and Dracula theme. Let’s choose Darcula for now. I hope there will be One Dark support.

Also, Android Studio can preferably selects Fira Code, which we should have already installed. Remember to select Enable font ligatures to stay cool

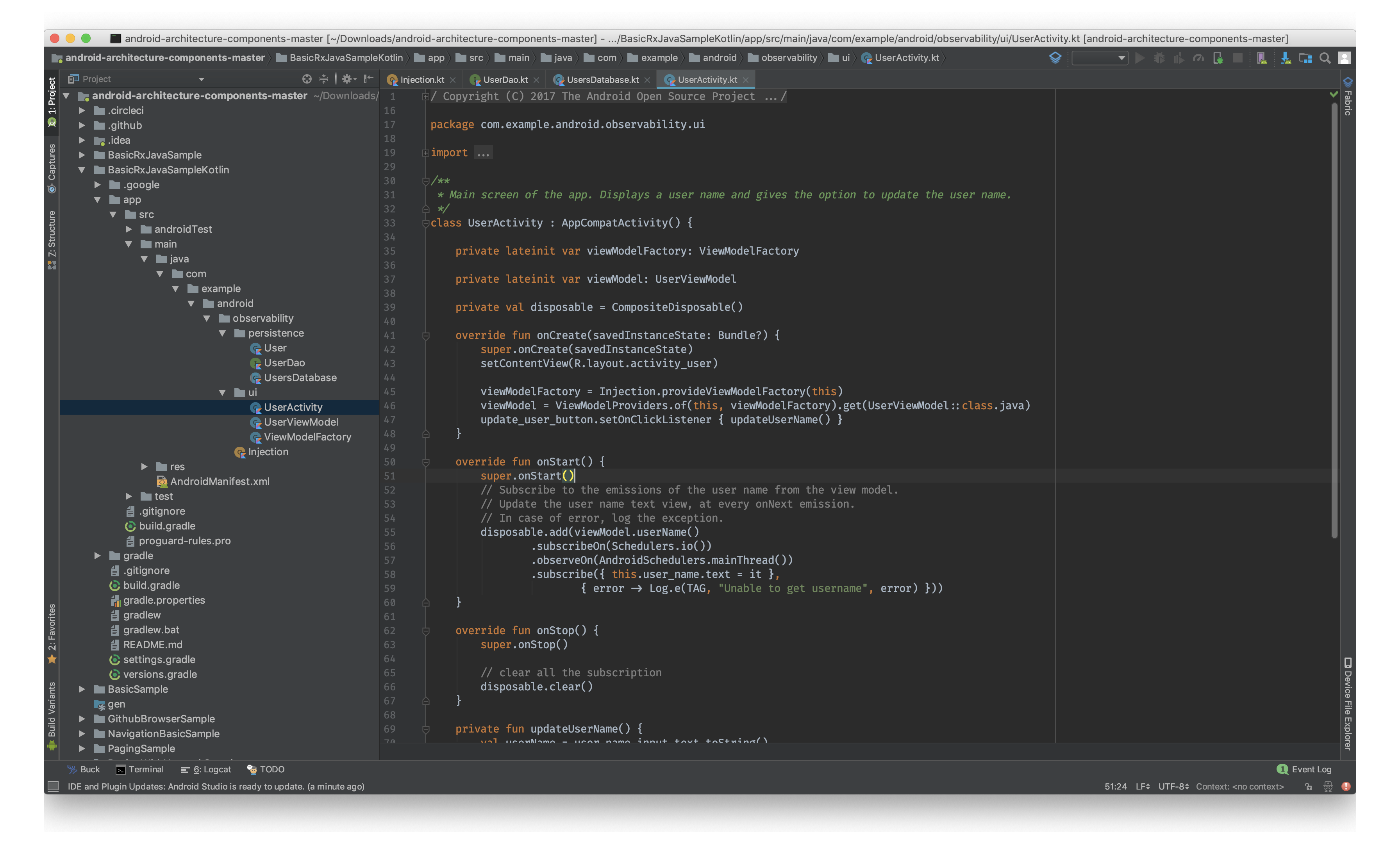

It looks like this

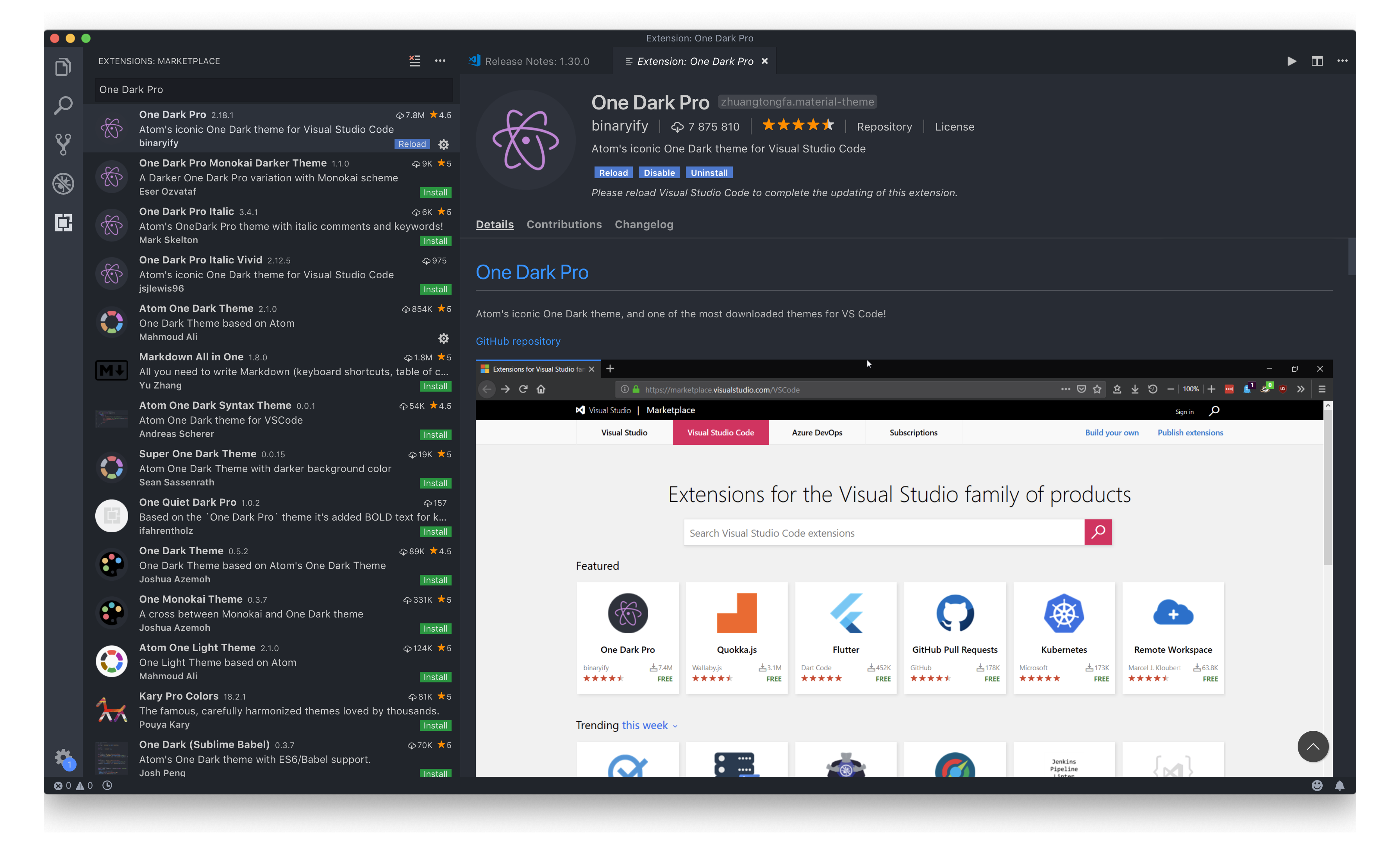

Installing theme in VSCode is easy with extensions. There is this One Dark Pro that we can install directly from Extensions panel in VS Code. Alternatively, you can also choose Atom One Dark Theme

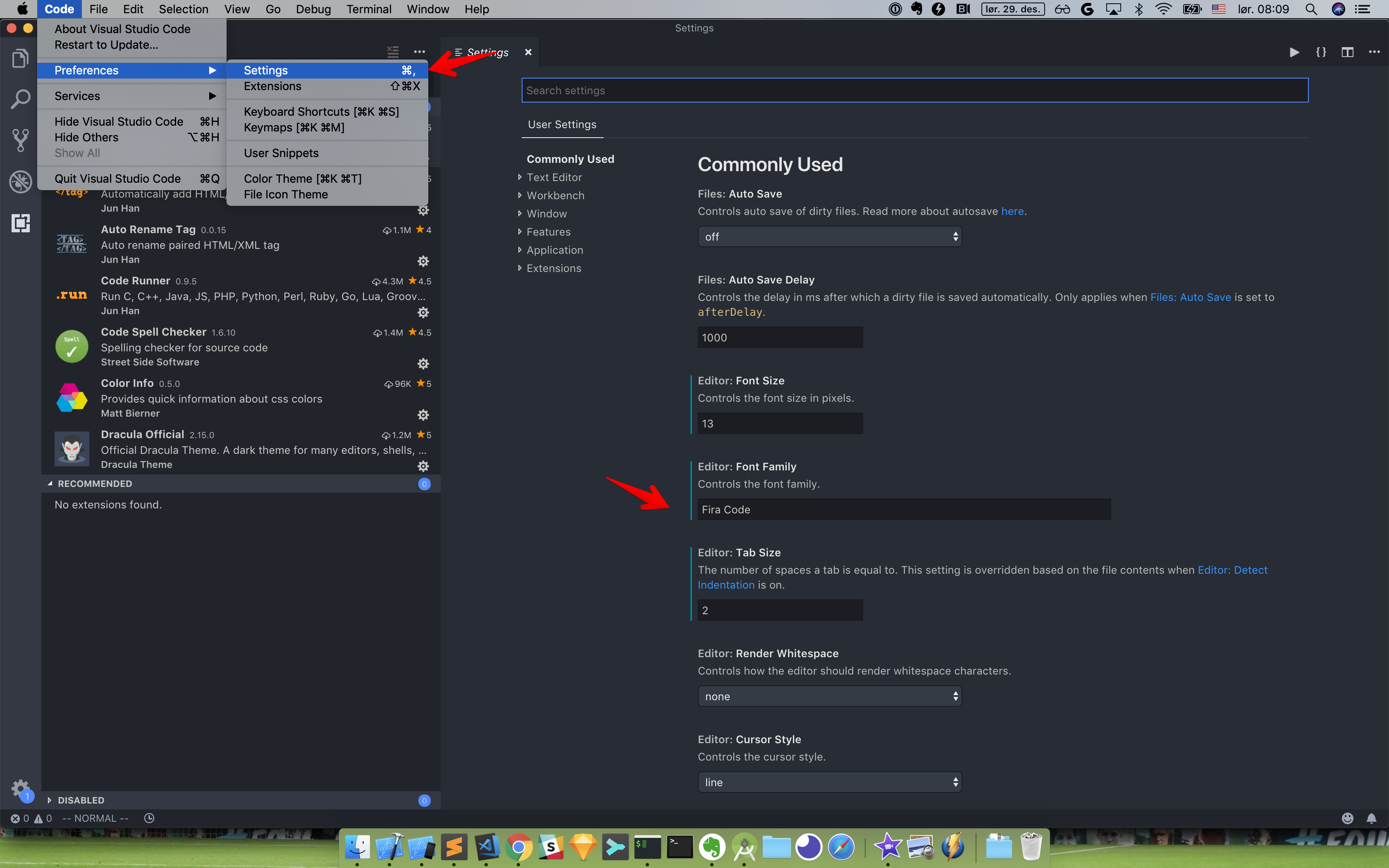

Then go to Preferences -> Settings to specify Fira Code . Remember to check for Font Ligatures

The result should look like this

Updated at 2020-12-05 05:33:52

Issue #280

Original post https://medium.com/fantageek/how-to-fix-wrong-status-bar-orientation-in-ios-f044f840b9ed

When I first started iOS, it was iOS 8 at that time, I had a bug that took my nearly a day to figure out. The issue was that the status bar always orients to device orientation despite I already locked my main ViewController to portrait. This was why I started notes project on GitHub detailing what issues I ‘ve been facing.

ViewController is locked to portrait but the status bar rotates when device rotates · Issue #2 ·…

PROBLEM The rootViewController is locked to portrait. When I rotates, the view controller is portrait, but the status…github.com

Now it is iOS 12, and to my surprise people still have this issues. Today I will revise this issue in iOS 12 project and use Swift.

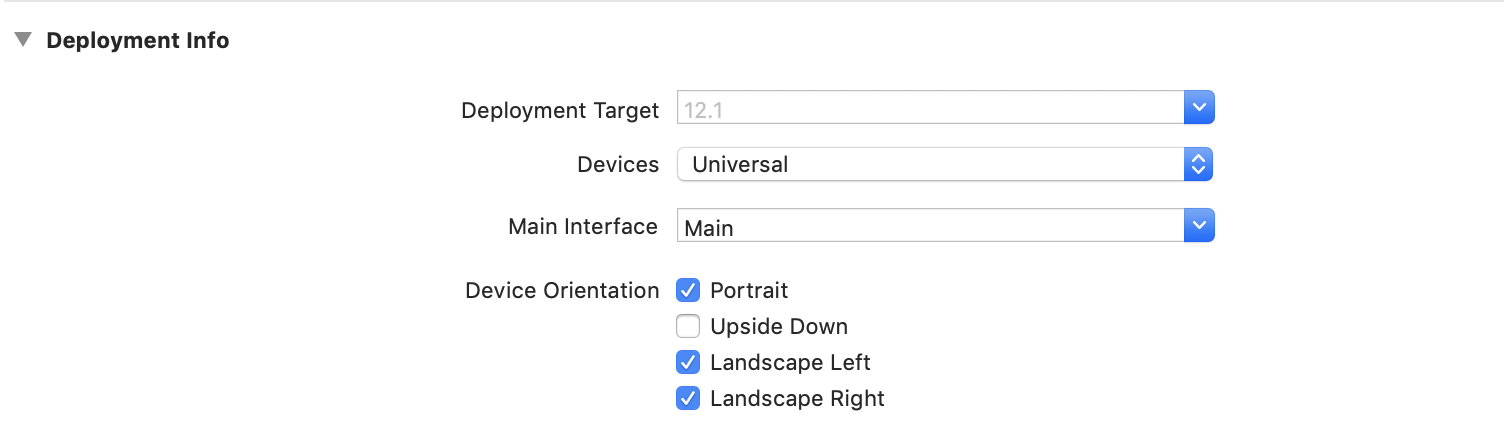

Most apps only support portrait mode, with 1 or 2 screens being in landscape for image viewing or video player. So we usually declare Portrait, Landscape Left and Landscape Right.

Since iOS 7, apps have simple design with focus on content. UIViewController was given bigger role in appearance specification.

Supposed that we have a MainViewController as the rootViewController and we want this to be locked in portrait mode.

[@UIApplicationMain](http://twitter.com/UIApplicationMain)

class AppDelegate: UIResponder, UIApplicationDelegate {

var window: UIWindow?

func application(_ application: UIApplication, didFinishLaunchingWithOptions launchOptions: [UIApplication.LaunchOptionsKey: Any]?) -> Bool {

window = UIWindow(frame: UIScreen.main.bounds)

window?.rootViewController = MainViewController()

window?.makeKeyAndVisible()

return true

}

}There is a property called UIViewControllerBasedStatusBarAppearance that we can specify in Info.plist to assert that we want UIViewController to dynamically control status bar appearance.

A Boolean value indicating whether the status bar appearance is based on the style preferred for the current view controller.

Start by declaring in Info.plist

<key>UIViewControllerBasedStatusBarAppearance</key>

<true/>And in MainViewController , lock status bar to portrait

import UIKit

class MainViewController: UIViewController {

let label = UILabel()

override func viewDidLoad() {

super.viewDidLoad()

view.backgroundColor = .yellow

label.textColor = .red

label.text = "MainViewController"

label.font = UIFont.preferredFont(forTextStyle: .headline)

label.sizeToFit()

view.addSubview(label)

}

override func viewDidLayoutSubviews() {

super.viewDidLayoutSubviews()

label.center = CGPoint(x: view.bounds.size.width/2, y: view.bounds.size.height/2)

}

override var supportedInterfaceOrientations: UIInterfaceOrientationMask {

return .portrait

}

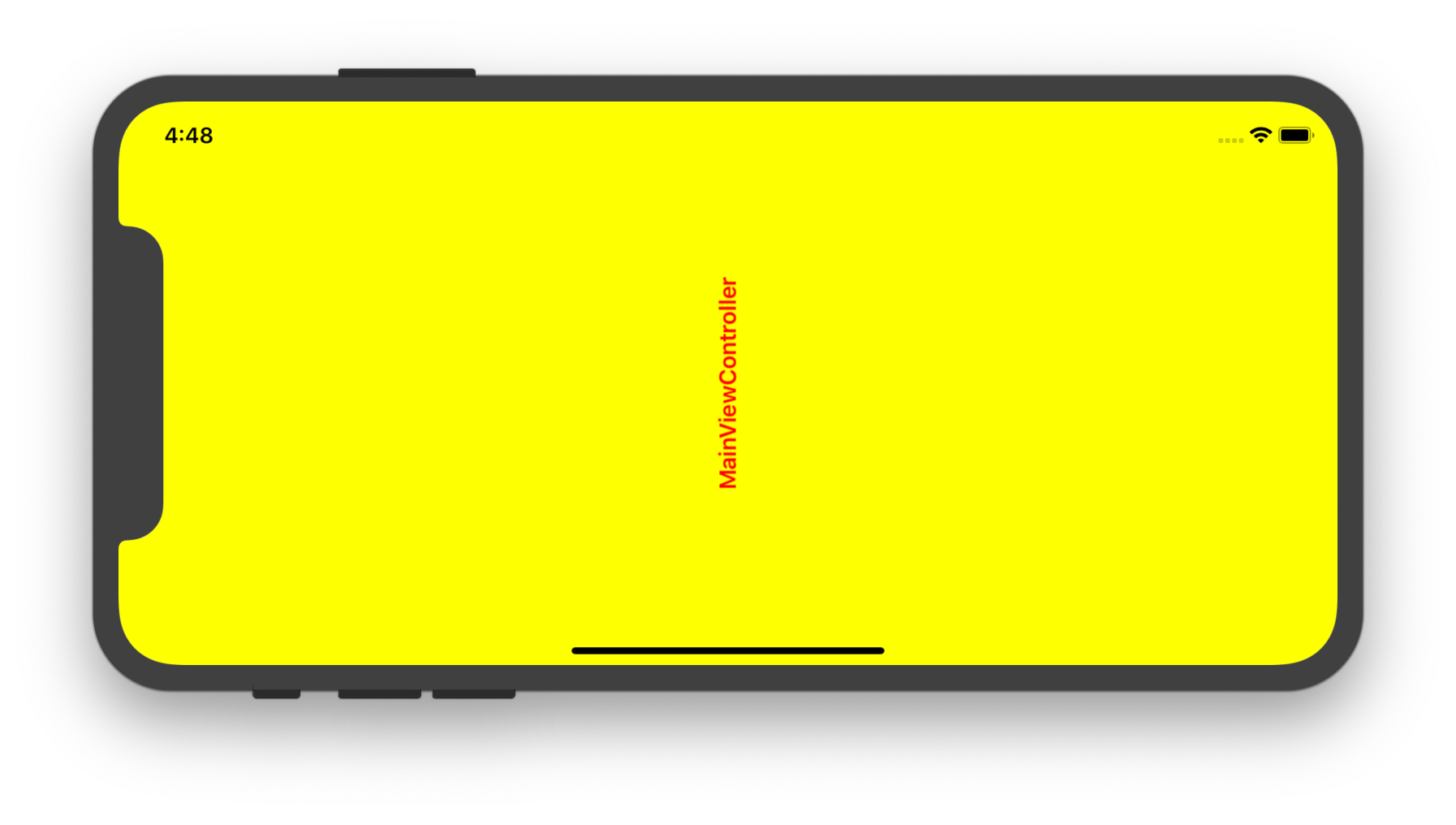

}The idea is that no matter orientation the device is, the status bar always stays in portrait mode, the same as our MainViewController .

But to my surprise, this is not the case !!! As you can see in the screenshot below, the MainViewController is in portrait, but the status bar is not.

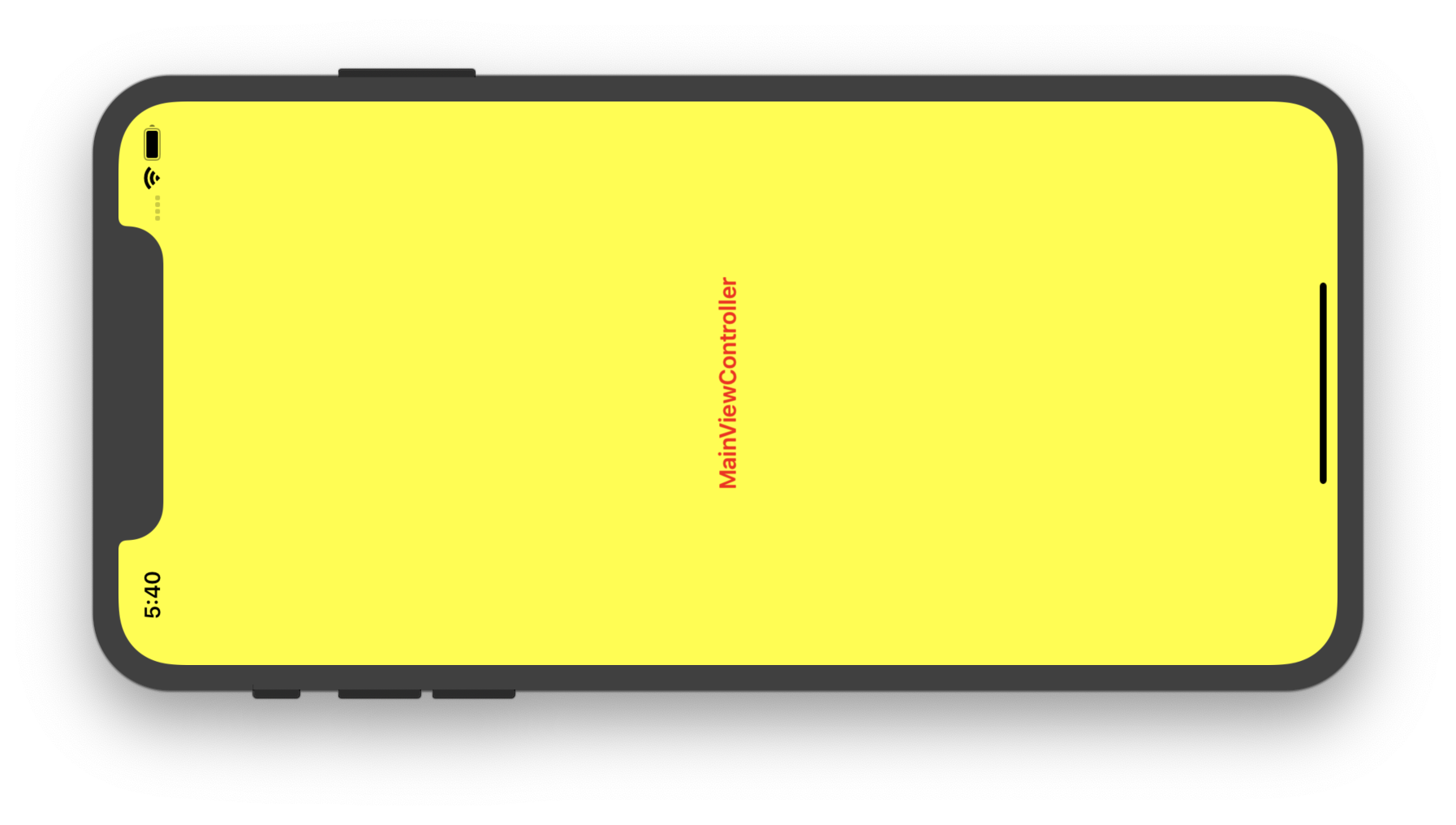

It took me a while to figure out that while I declare UIWindow in code, I also have MainStoryboard that configures UIWindow

<key>UIMainStoryboardFile</key>

<string>Main</string>And this UIWindow from Storyboard has a default root ViewController

import UIKit

class ViewController: UIViewController {

override func viewDidLoad() {

super.viewDidLoad()

}

}In iOS, the status bar is hidden in landscape by default.

Setting UIViewControllerBasedStatusBarAppearance to false means that we want this default behaviour

Setting UIViewControllerBasedStatusBarAppearance to true means we want to have control over the status bar appearance. So to hide the status bar, we must override prefersStatusBarHidden in the rootViewController, the presented view controller or the full screen view controller

As you can see, the problem is that the ViewController from UIWindow in Storyboard clashes with our MainViewController in code, hence a lot of confusion.

The solution is simple, remove Main.storyboard and don’t use that in project

<key>UIMainStoryboardFile</key>

<string>Main</string>Now we get our desired behaviour, status bar and view controllers are always locked to portrait regardless of the device orientation.

If you use code like me to setup UI, remember to clean up the generated storyboard and ViewController that comes default when project was generated by Xcode.

Issue #279

Original post https://medium.com/fantageek/what-is-create-react-native-app-9f3bc5a6c2a3

As someone who comes to React Native from iOS and Android background, I like React and Javascript as much as I like Swift and Kotlin. React Native is a cool concept, but things that sound good in theory may not work well in practice.

Up until now, I still don’t get why big companies choose React Native over native ones, as in the end what we do is to deliver good experience to the end user, not the easy development for developers. I have seen companies saying goodbye to React Native and people saying that React Native is just React philosophy learn once, write anywhere, that they choose React Native mainly for the React style, not for cross platform development. But the fact can’t be denied that people choose React Native mostly for cross platform development. Write once, deploy many places is so compelling. We still of course need to tweak a bit for each platform, but most of the code is reuse.

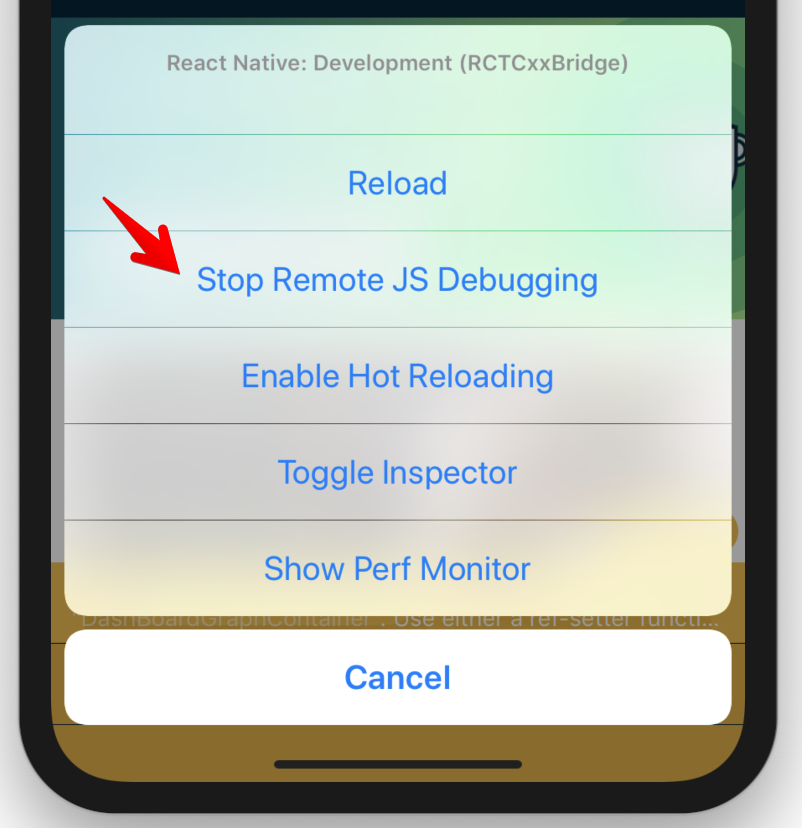

I have to confess that I myself spend less time developing than digging into compiling and bundling issues. Nothing comes for free. To be successful with React Native, not only we need strong understanding on both iOS and Android, but also ready to step into solving issues. We can’t just open issues, pray and wait and hope someone solves it. We can’t just use other dependencies blindly and call it a day. A trivial bug can be super hard to fix in React Native, and our projects can’t wait. Big companies have, of course, enough resource to solve issues, but for small teams, React Native is a trade off decision to consider.

Enough about that. Let’s go to official React Native getting started guide. It recommend create-react-native-app. As someone who made electron.js apps and used create-react-app, I choose create-react-native-app right away, as I think it is the equivalent in terms of creating React Native apps. I know there is react-native init and though that it must have some problems, otherwise people don’t introduce create-react-native-app.

After playing with create-react-native-app and eject, and after that read carefully through its caveats, I know it’s time to use just react-native init . In the end I just need a quick way to bootstrap React Native app with enough tooling (Babel, JSX, Hot reloading), however create-react-native-app is just a limited experience in Expo with opinionated services like Expo push notification docs.expo.io/versions/latest/guides/push-notifications.html and connectivity to Google Cloud Platform and AWS. I know every thing has its use case, but in this case it’s not the tool for me. Dependencies are the root of all evils, and React Native already has a lot, I don’t want more unnecessary things.

The concept of Expo is actually nice, in that it allows newbie to step into React Native fast with recommended tooling already set up, and the ability to reject later. It also useful in sharing Snack to help reproduce issues. But the name of the repo create-react-native-app is a bit misleading.

Maybe it’s just me, or …

dev.to/kylessg/ive-released-over-100-apps-in-react-native-since-2015-ask-me-anything-1m9g

No, I would never use Expo for a serious project. I imagine what ends up happening in most projects is they reach a point where they have to ultimately eject the app (e.g. needing a native module) which sounds very painful.

dev.to/gaserd/why-i-do-not-use-expo-for-react-native-dont-panic-1bp

If you use EXPO, you use wrapper-wrapper.

hackernoon.com/understanding-expo-for-react-native-7bf23054bbcd

Expo is a great tool for getting started quickly with React Native. However, it’s not always something that can get you to the finish line. From what I’ve seen, the Expo team is making a lot of great decisions with their product roadmap. But their rapid pace of development has often led to bugs in new features.

docs.expo.io/versions/latest/introduction/why-not-expo

JS and assets managed by Expo require connectivity to Google Cloud Platform and AWS

http://www.albertgao.xyz/2018/05/30/24-tips-for-react-native-you-probably-want-to-know

If you are coming from web world, you need to know that create-react-native-app is not the create-react-app equivalent

But if you want more control over your project, something like tweaking your react native Android and iOS project, I highly suggest you use the official react-native-cli. Still, one simple command, react-native init ProjectName, and you are good to go.

medium.com/@paulsc/react-native-first-impressions-expo-vs-native-9565cce44c92

I wish I would have used “Native” from the get go, instead I wasted quite a bit of time with Expo

Maybe the biggest one for me is that the whole thing feels like adding another layer of indirection and complexity to an already complicated stack

I wish Expo would get removed from the react-native Getting Started guide to avoid confusing new arrivals

levelup.gitconnected.com/how-i-ditched-expo-for-pure-react-native-fc0375361307

The Expo dev team did a lot of good stuff there, and they are all doing it for free, so I definitely want to thank all of them for providing a smoother entrance to this world. If they ever manage to solve this issue of custom native code somehow, it may become my platform of choice again.

https://medium.com/@aswinmohanme/how-i-reduced-the-size-of-my-react-native-app-by-86-27be72bba640

I love everything about Expo except the size of the binaries. Each binary weighs around 25 MB regardless of your app.

So the first thing I did was to migrate my existing Expo app to React Native.

If you are new to React Native and you think this is the “must” way to go. Check if it meets your needs first.

If you are planning to use third party RN packages that have custom native modules. Expo does not support this functionality and in that case, you will have to eject Expo-Kit. In my opinion, if you are going to eject any kit, don’t use it in the first place. It will probably make things harder than if you hadn’t used the kit at all.

Issue #278

Original post https://medium.com/fantageek/best-places-to-learn-ios-development-85ebebe890cf

It’s good to be software engineers, when you get paid to do what you like best. The good thing about software development is it ‘s changing fast, and challenging. This is also the not good thing, when you need to continuously learn and adapt to keep up with the trends.

This is for those who have been iOS developers for some time. If you have a lot of free time to spend, then congratulations. If you do not, you know the luxury of free time, then it’s time to learn wisely, by selecting only the good resources. But where should I learn from?

Welcome to the technology age, where there are tons to things to keep track of, iOS releases, SDK, 3rd frameworks, build tools, patterns, … Here are my list that I tend to open very often. It is opinionated and date aware. If it was several years ago, then http://nshipster.com/, https://www.objc.io/ should be in the top of the list.

I like to keep track of stuff, via my lists https://github.com/onmyway133/fantastic-ios, https://github.com/onmyway133/fantastic-ios-architecture, https://github.com/onmyway133/fantastic-ios-animation. Also you should use services like https://feedly.com/ to organise your subscription feed.

The point of this is for continuous learning, so it should be succinct. There is no particular order.

This is probably one of the most visited site for learning iOS development. All the tutorials are well designed and easy to follow. If you can, you can subscribe to videos https://videos.raywenderlich.com/courses. I myself find watching video much more relaxing. And the team is reviving its Podcast https://www.raywenderlich.com/rwpodcast which I really recommend

The people behind objc.io started their swift talks last year. I’m a fan of clean code, so these talks are really helpful when they show how to organise and write code. Also, they have awesome guests from some companies too.

If you have less time, then this is great option. These covers many aspects of the iOS SDKs, and the videos are weekly.

NSScreencast: Bite-sized Screencasts for iOS Development

Quality videos on iOS development, released each week.nsscreencast.com

I actually learn a lot from reading John ‘s blog. He shows various tips on iOS programming and the Swift language. Also his podcast is a must subscribe https://www.swiftbysundell.com/podcast/. I’ve listened to many podcasts, but I like this best.

All posts

Like many abstractions and patterns in programming, the goal of the builder pattern is to reduce the need to keep…www.swiftbysundell.com

I like this because the content is short, and focused. I can easily follow and grasp the gist immediately. And it has large collection of various contents.

AppCoda - Learn Swift & iOS Programming by Doing

AppCoda is an educational startup that focuses on teaching people how to learn Swift & iOS programming blog. Our…www.appcoda.com

This has updated posts for every new SDK features. Also, the content is short and to the points. It’s like wikipedia for iOS development.

Use Your Loaf

If you are upgrading to Xcode 10 and migrating to Swift 4.2 you are likely to see a number of errors because Swift 4.2…useyourloaf.com

The number of newsletters now is like stars on the sky. Among them I like iOS Goodies the best. It is driven by community https://github.com/iOS-Goodies/iOS-Goodies and contains lots of new awesome stuff each week.

iOS Goodies

weekly iOS newsletter curated by Marius Constantinescu logo by José Torre founded by Rui Peres and Tiago Almeidaios-goodies.com

This is a bit advanced where it discusses Swift languages. But it’s good to read if you want to get yourself to know more about hidden language features.

Erica Sadun

Recently, some of my simulators launched and loaded just fine. Others simply went black. It didn’t seem to matter which…ericasadun.com

I just discovered this recently, but I kinda like the blog. The number of posts are growing, and those are good reads about iOS SDKs.

swifting.io

Our dear friend and a founder of swifting.io - Michał, had an accident two months ago. He had a bad luck and got hit by…swifting.io

I learn many good patterns and clean code from reading this blog. He suggests many ideas on refactoring code. Really recommend.

Khanlou

To convert this into an infinite collection, we need to think about a few things. First, how will we represent an index…khanlou.com

This has been in my favorite list for a long time. Although this is a bit advanced, it is good to dive deep into Swift.

Articles - Ole Begemann

Edit descriptionoleb.net

This is my favorite, too. This shows many practical advices for iOS development. He also talks about build tool that I really like.

Posts

Have you ever written tests? Usually, they use equality asserts, e.g. XCTAssertEqual, what happens if the object isn’t…merowing.info

Realm collects a huge collection of iOS videos from conferences and meet ups, and it has transcripts too. It’s more than enough to fill your free time.

Realm Academy - Expert content from the mobile experts

Developer videos, articles and tutorials from top conferences, top authors, and community leaders.academy.realm.io

This has posts in both iOS and Android. But I really like the contents, very good.

App Development and Design Blog | Big Nerd Ranch

Our blog offers app development and design tutorials, tips and tricks for software engineering and insights for team…www.bignerdranch.com

This is very advanced, and suitable for hardcore fans. I feel small when reading the posts.

Cocoa with Love

The upcoming CwlViews library offers a syntax for constructing views that has a profound effect on the Cocoa…www.cocoawithlove.com

I really enjoy reading blog posts from Atomic Object. There are posts for many platforms, and about life, so you need to filter for iOS development.

Atomic Spin

More and more studies have shown that the most effective teams are the ones whose members trust each other and feel…spin.atomicobject.com

This has very good articles about iOS. Highly recommend.

React Native Animations: Part 2 - RaizException - Raizlabs Developer Blog

When we build apps for our clients, beautiful designs and interactions are important. But equally important is…www.raizlabs.com

This has topics for many iOS features. All the contents are good and succinct.

iOS Development - Computer Vision iOS Apps

Computer Vision iOS Appswww.invasivecode.com

I like posts about animation and replicating app features. This has all of them.

This has a series of small tips, on how to use iOS SDKs and other 3rd frameworks. Good to know.

Little Bites of Cocoa - Tips and techniques for iOS and Mac development - Weekday mornings at 9:42…

Tips and techniques for iOS and Mac development - Weekday mornings at 9:42 AM. The goal of each of these ‘bites’ is to…littlebitesofcocoa.com

All the posts are good, short and to the points. Really like this.

@samwize

¯_(ツ)_/¯samwize.com

I think that’s enough. Feel free to share and suggest other awesome blogs that I might miss. Also, it’s good to contribute back to community by writing your blog posts. You will learn a lot by sharing.

Issue #277

Original post https://codeburst.io/using-bitrise-ci-for-react-native-apps-b9e7b2722fe5

After trying Travis, CircleCI and BuddyBuild, I now choose Bitrise for my mobile applications. The many cool steps and workflows make Bitrise an ideal CI to try. Like any other CIs, the learning steps and configurations can be intimidating at first. Things that work locally can fail on CI, and how to send things that are marked as git ignore to be used in CI builds are popular issues.

In this post I will show how to deploy React Native apps for both iOS and Android. The key thing to remember is to have the same setup on CI as you have locally. They are to ensure exact same versions for packages and tools, to be aware of file patterns in gitignore as those changes won’t be there on CI, and how to property use environment variables and secured files.

This post tried to be intuitive with lots of screenshots. Hope you learn something and avoid the wasted hours that I have been too.

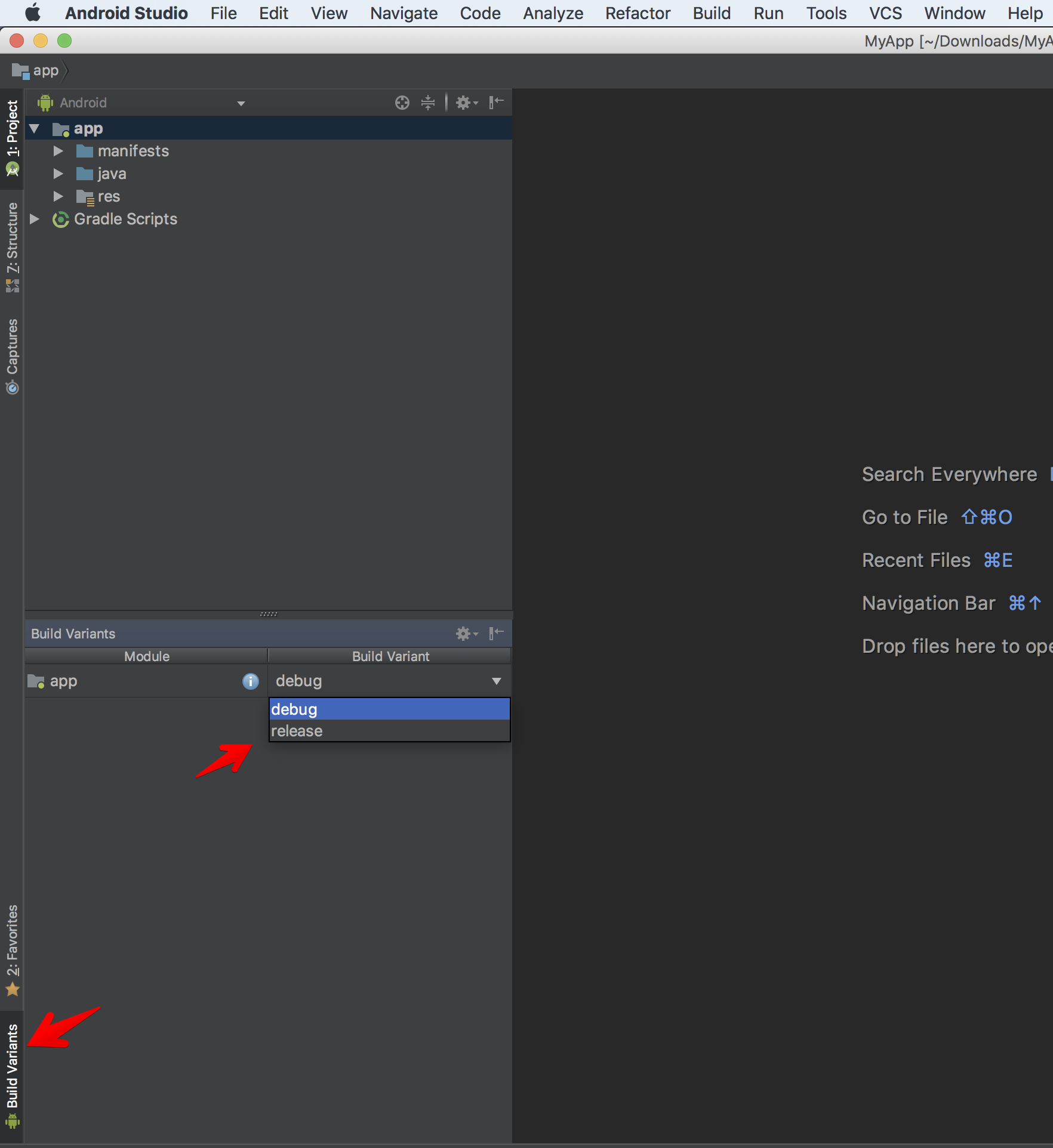

In its simplest sense, React Native is just Javascript code with native iOS and Android projects, and Bitrise has a very good support for React Native. It scans ios and android folder, and give suggestion about scheme and build variants to build.

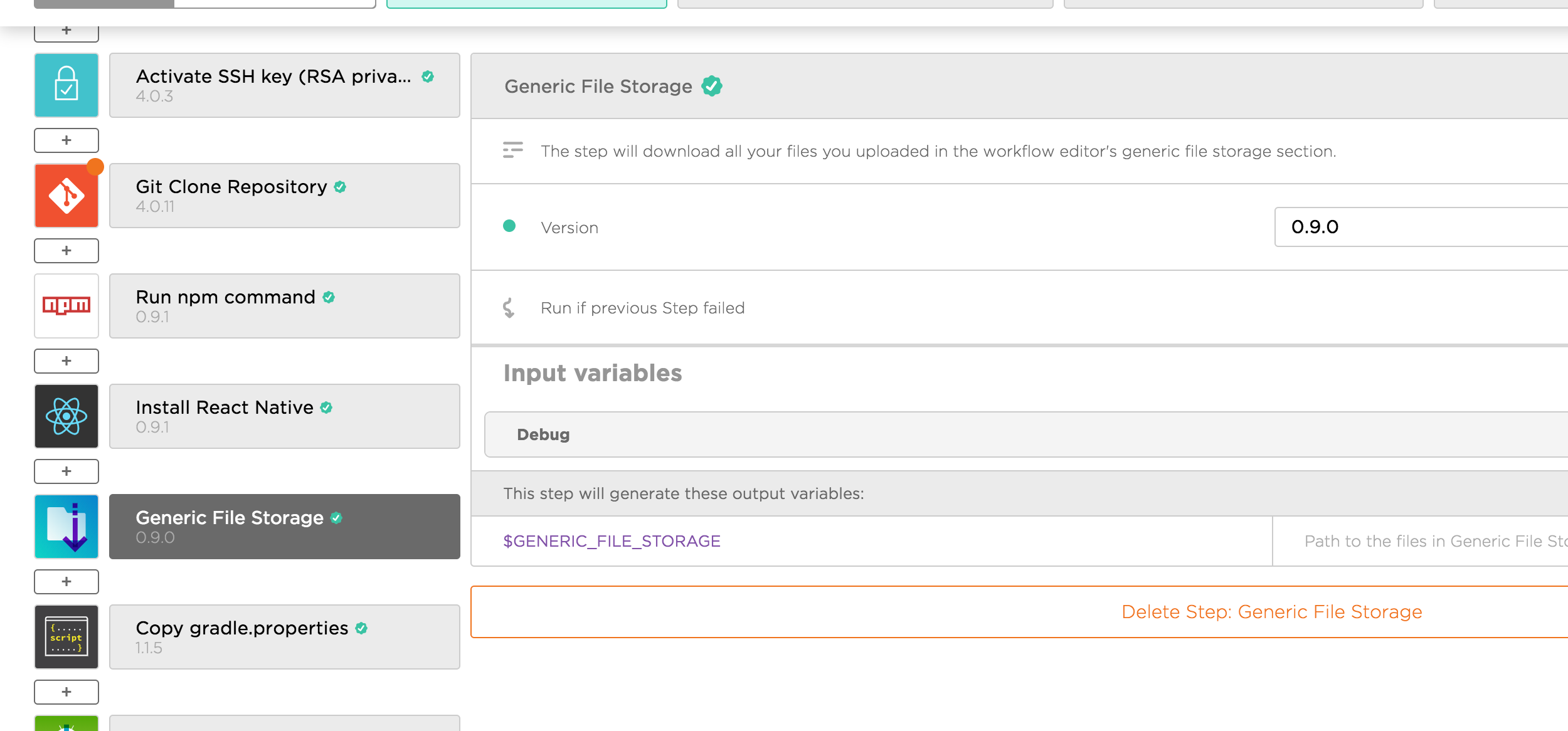

Another good thing about Bitrise is its various useful steps and workflows. Unlike other CIs where we have to spend days on how to property edit the configuration file, Bitrise has a pretty good UI to add and edit steps. Steps are just custom scripts, and we can write pretty much what we like. Most of the predefined steps are open source. Here are a few

Unless you use the step Build with Simulator , you will need provisioning profile and certificates with private keys in order to build for devices.

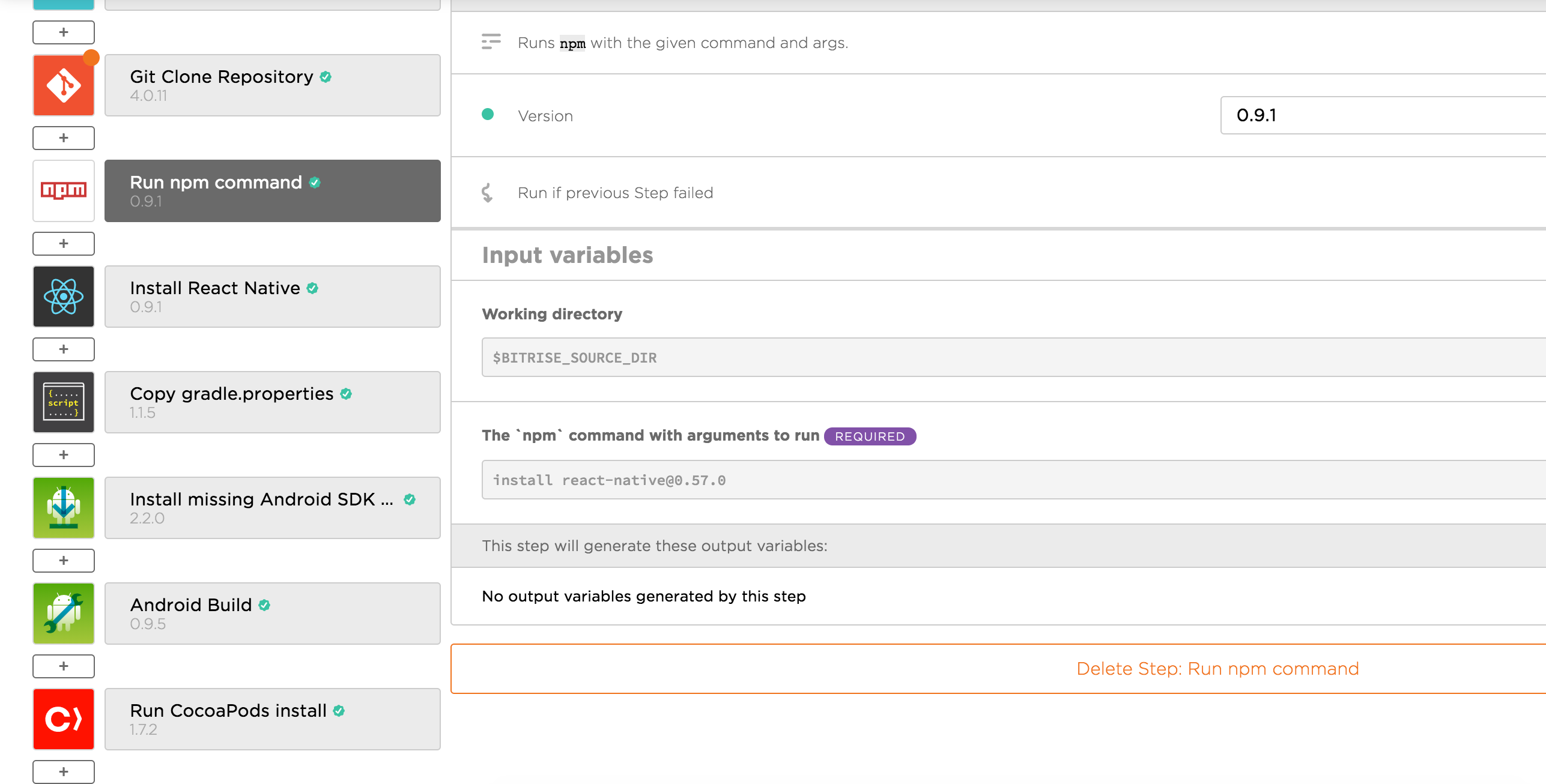

React Native moves fast and break things. If you don’t have same version of React Native, you will have hard time figuring out why the builds constantly fail on CI.

Currently I use React Native 0.57.0, so I enter install react-native@0.57.0 to let Bitrise install the same version. But normally you just need to npm install as versions should be explicitly defined in package.json file.

Also, we need to make sure we have the same version of react-native-cli with Install React Native step

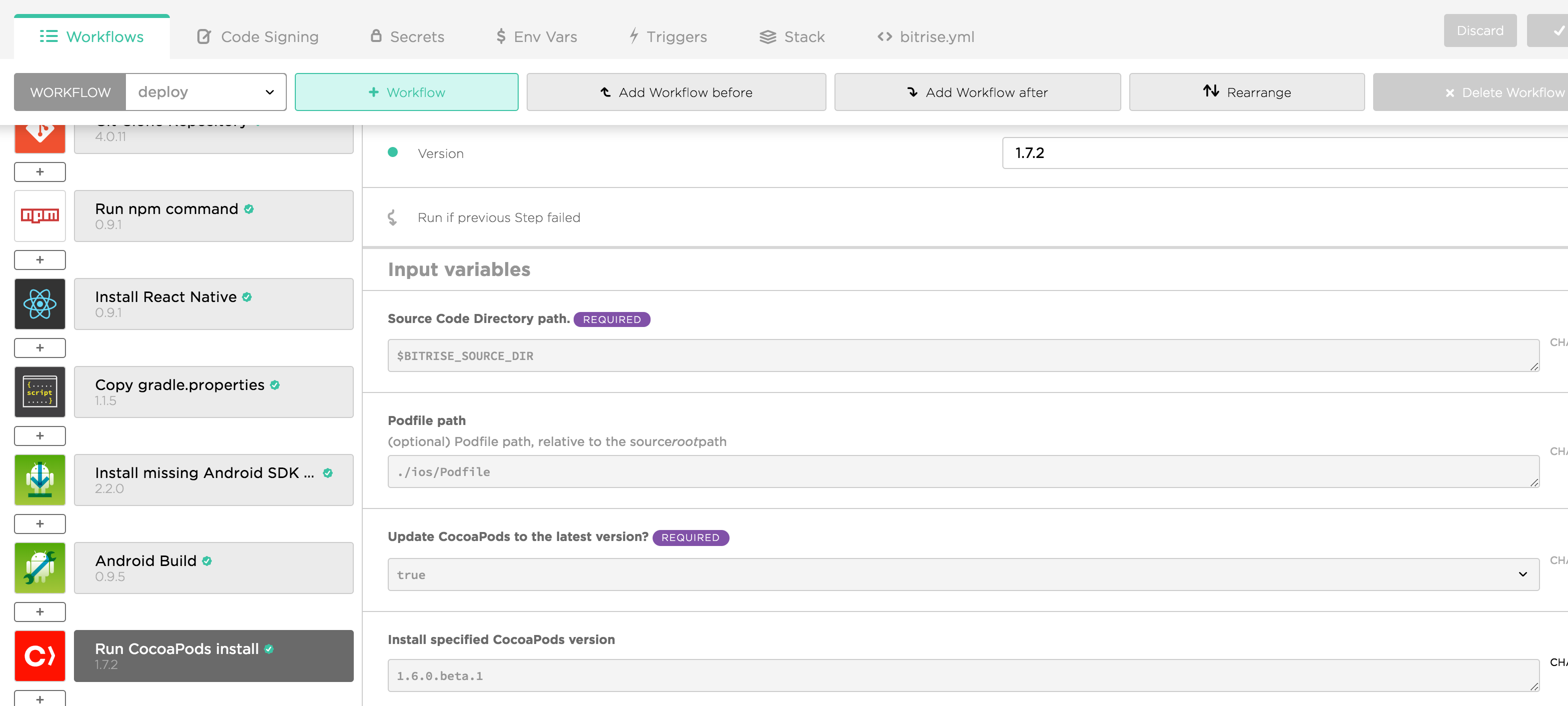

It’s safe to have the same CocoaPods version. For Xcode 10, we need at least CocoaPods 1.6.0.beta-1 to avoid the bug Skipping code signing because the target does not have an Info.plist file. Note that our Xcode project is inside ios folder, so we specify ./ios/Podfile for Podfile path

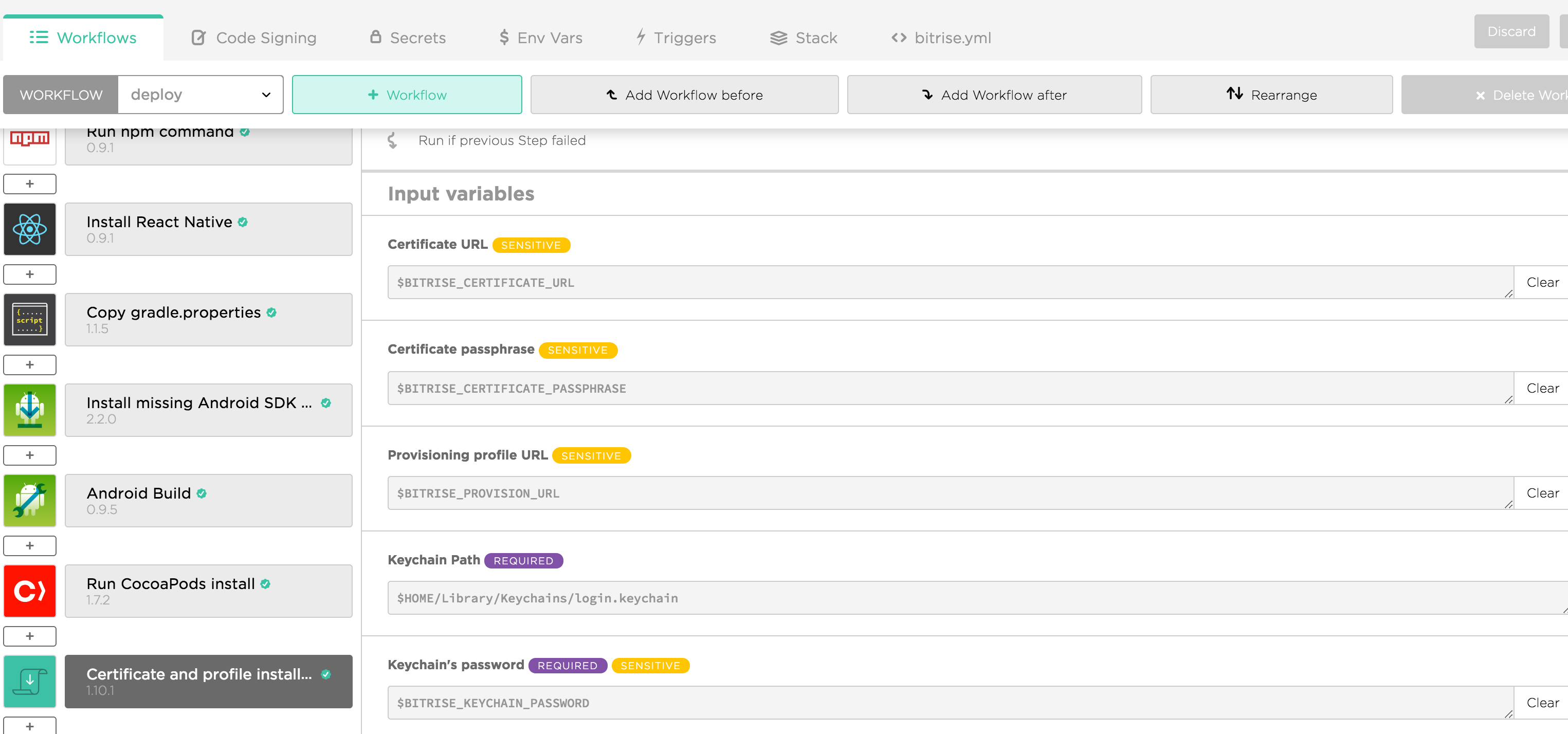

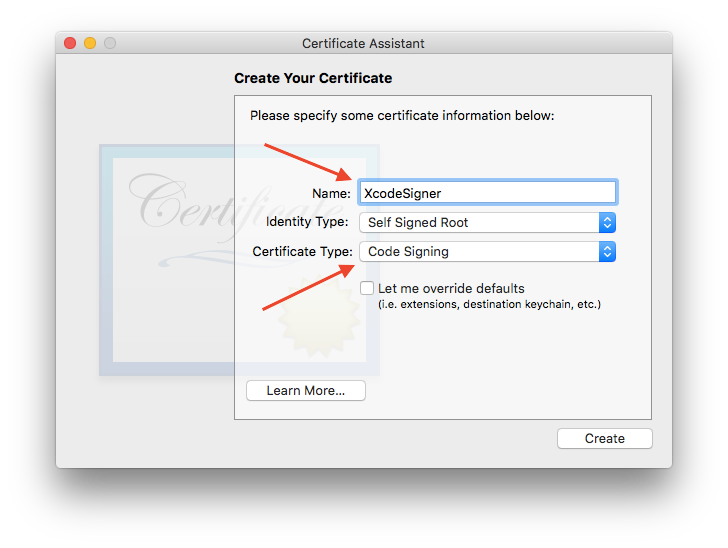

Under Workflow -> Code Signing we can upload provisioning profiles and certificates.

Then we need to use Certificate and profile install step to make use of the profiles and certificates we uploaded.

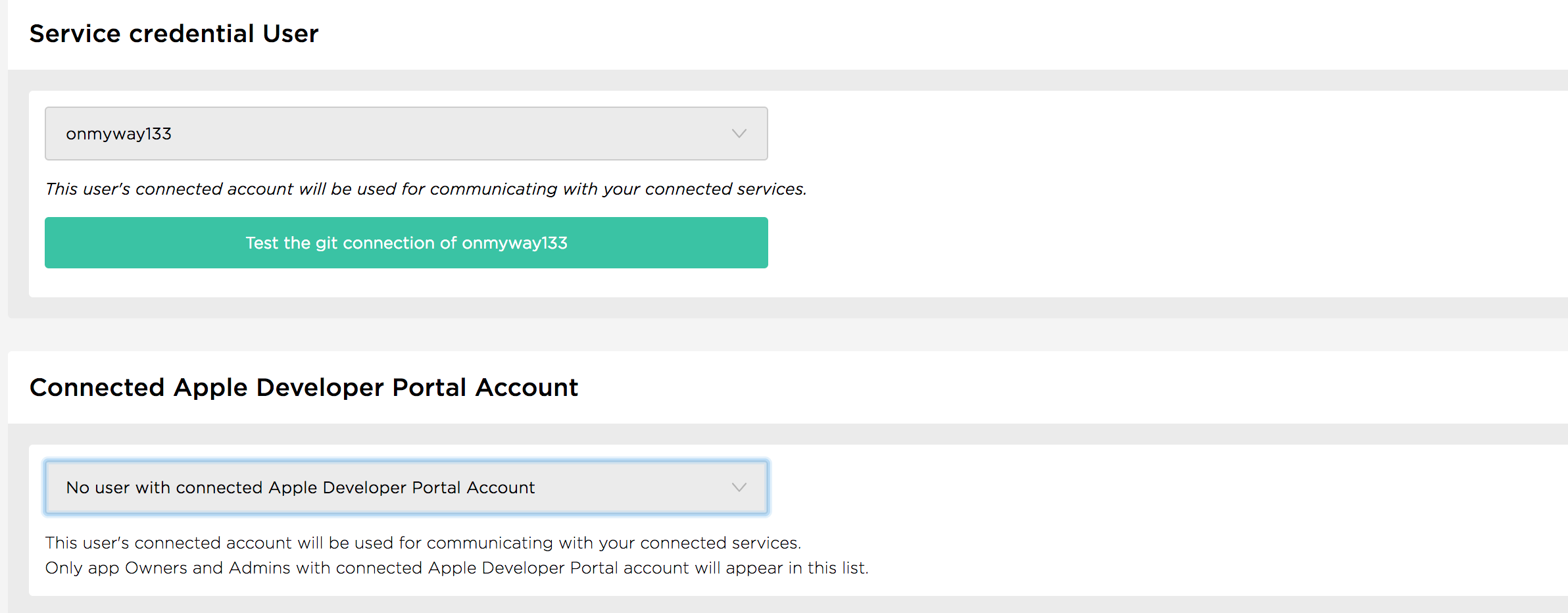

Another option is to use the iOS Auto Provision step, but it requires that we need to have an account in team that has connected Apple Developer Account. This way Bitrise can autogenerate profiles for us.

Sometimes you need to set Should the step try to generate Provisioning Profiles even if Xcode managed signing is enabled in the Xcode project? to yes

Under Xcode Archive & Export for iOS -> Debug -> Additional options for xcodebuild call We need to pass -allowProvisioningUpdates to make auto provisioning profile update happen.

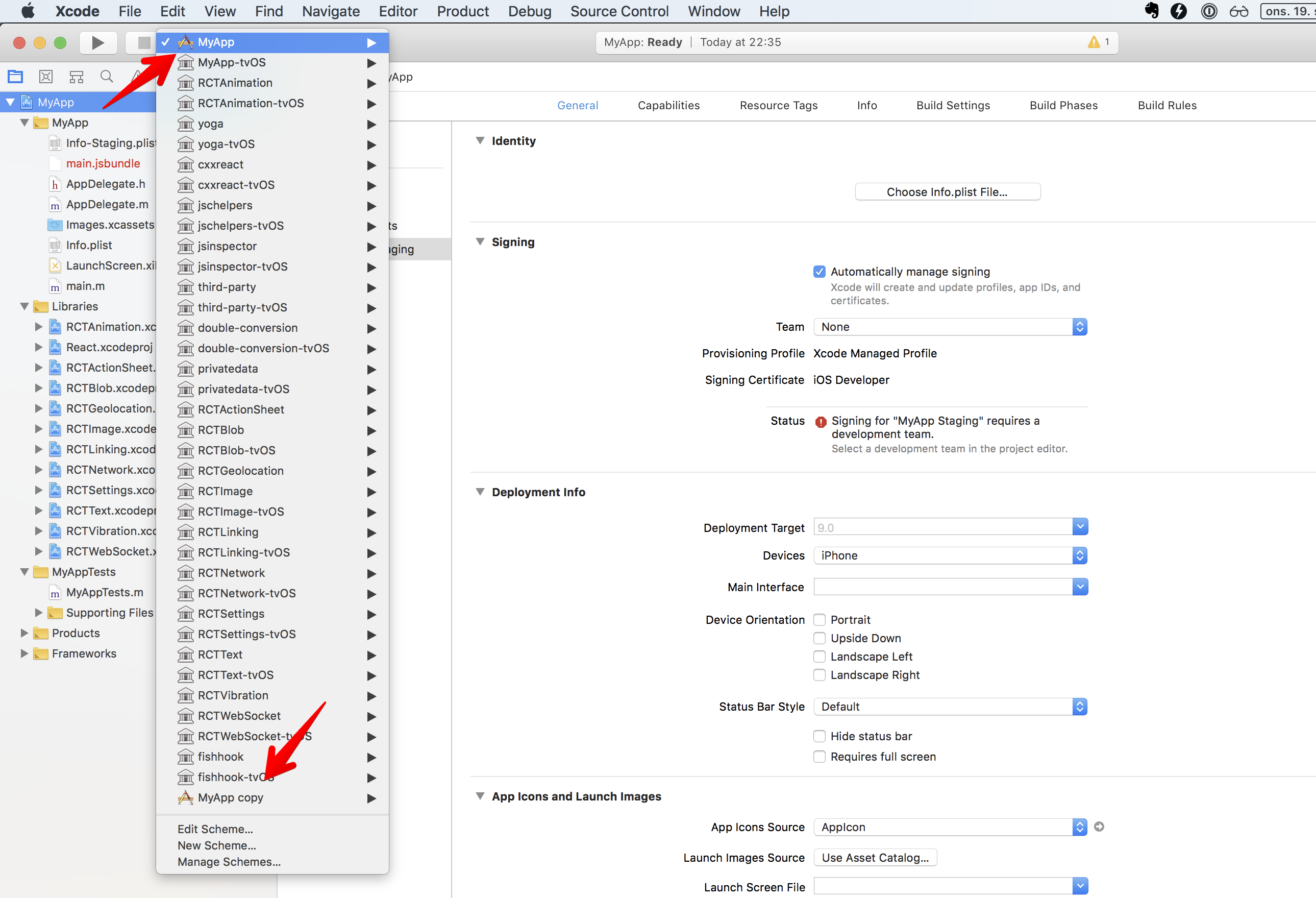

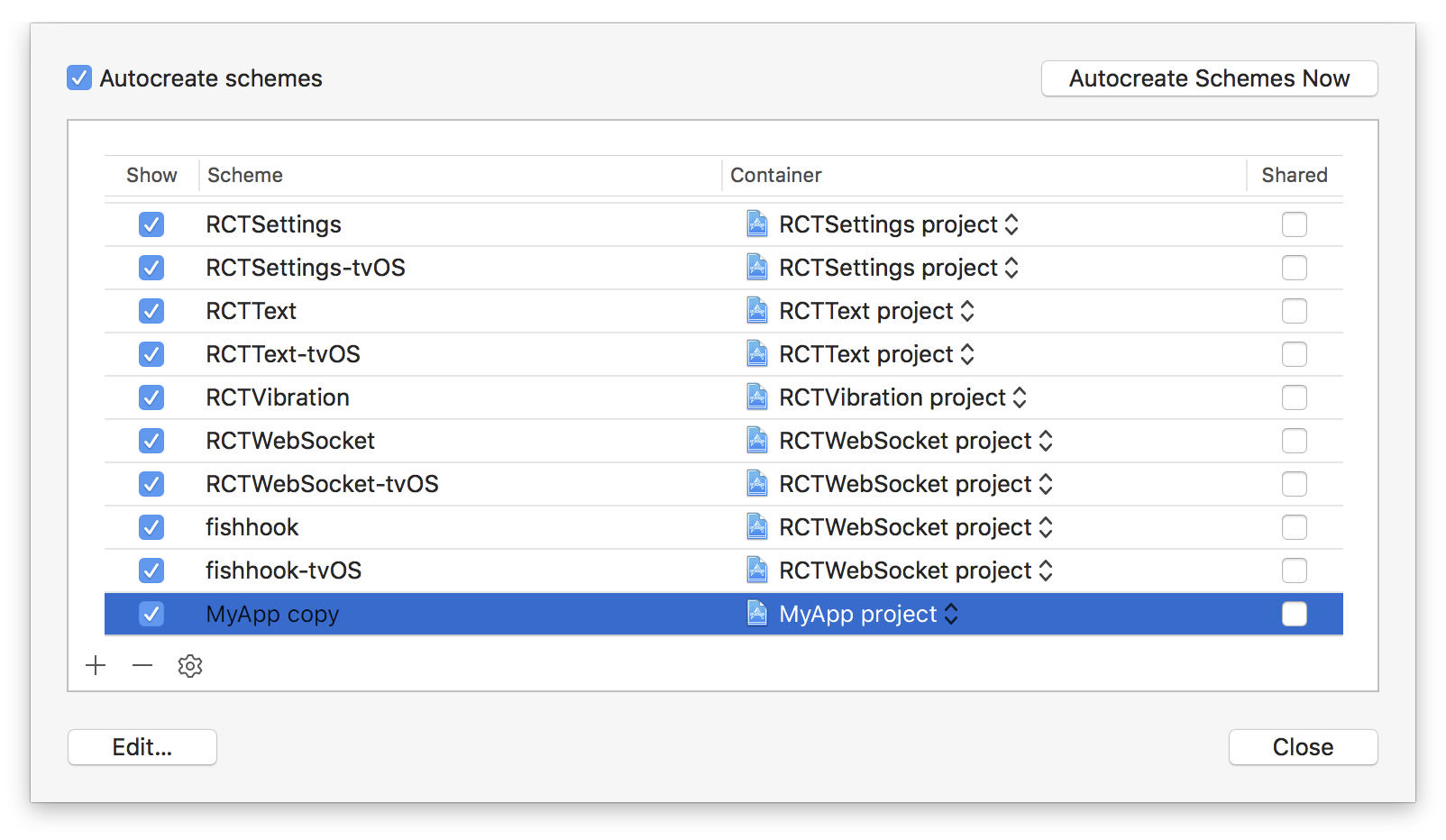

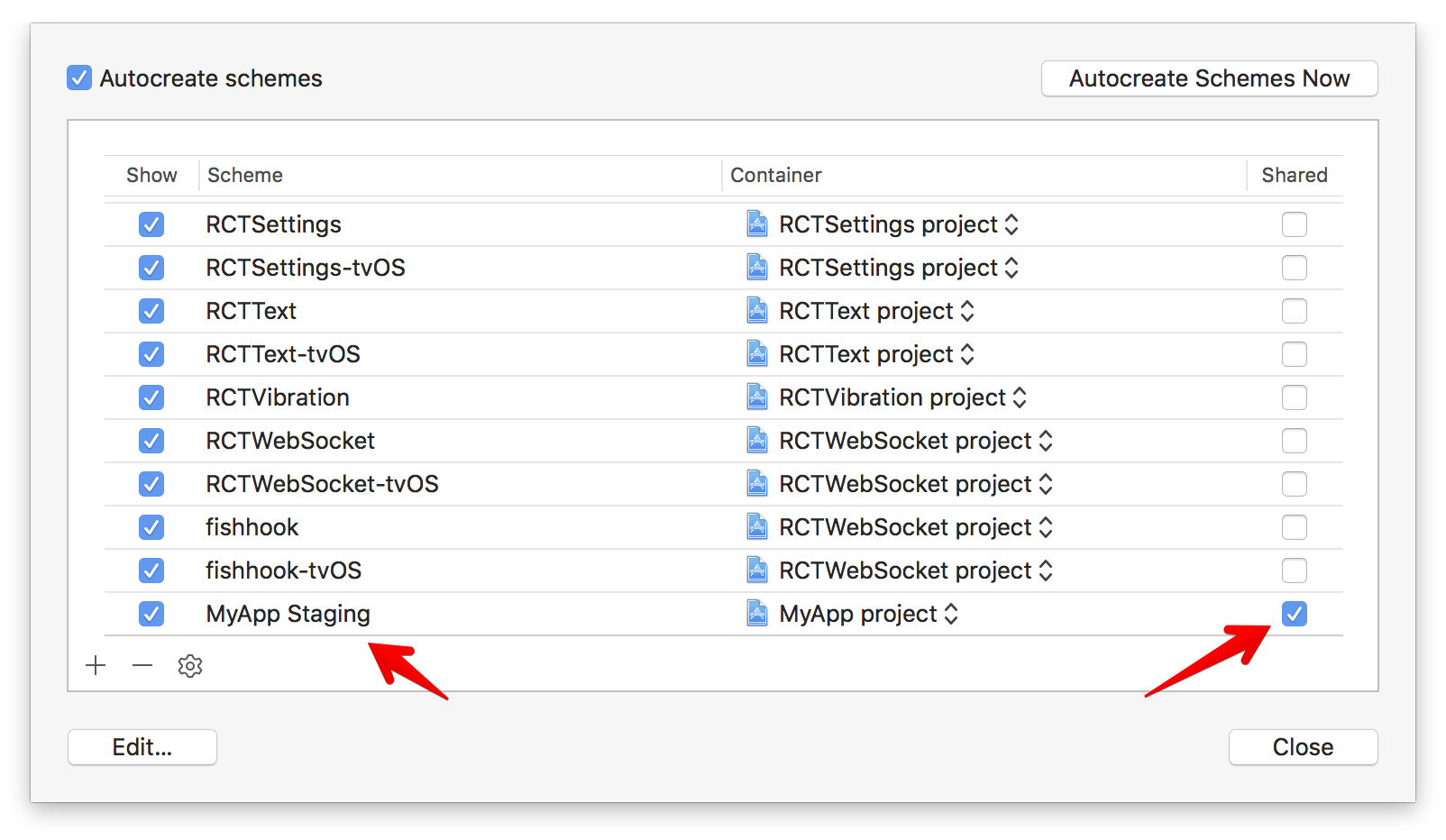

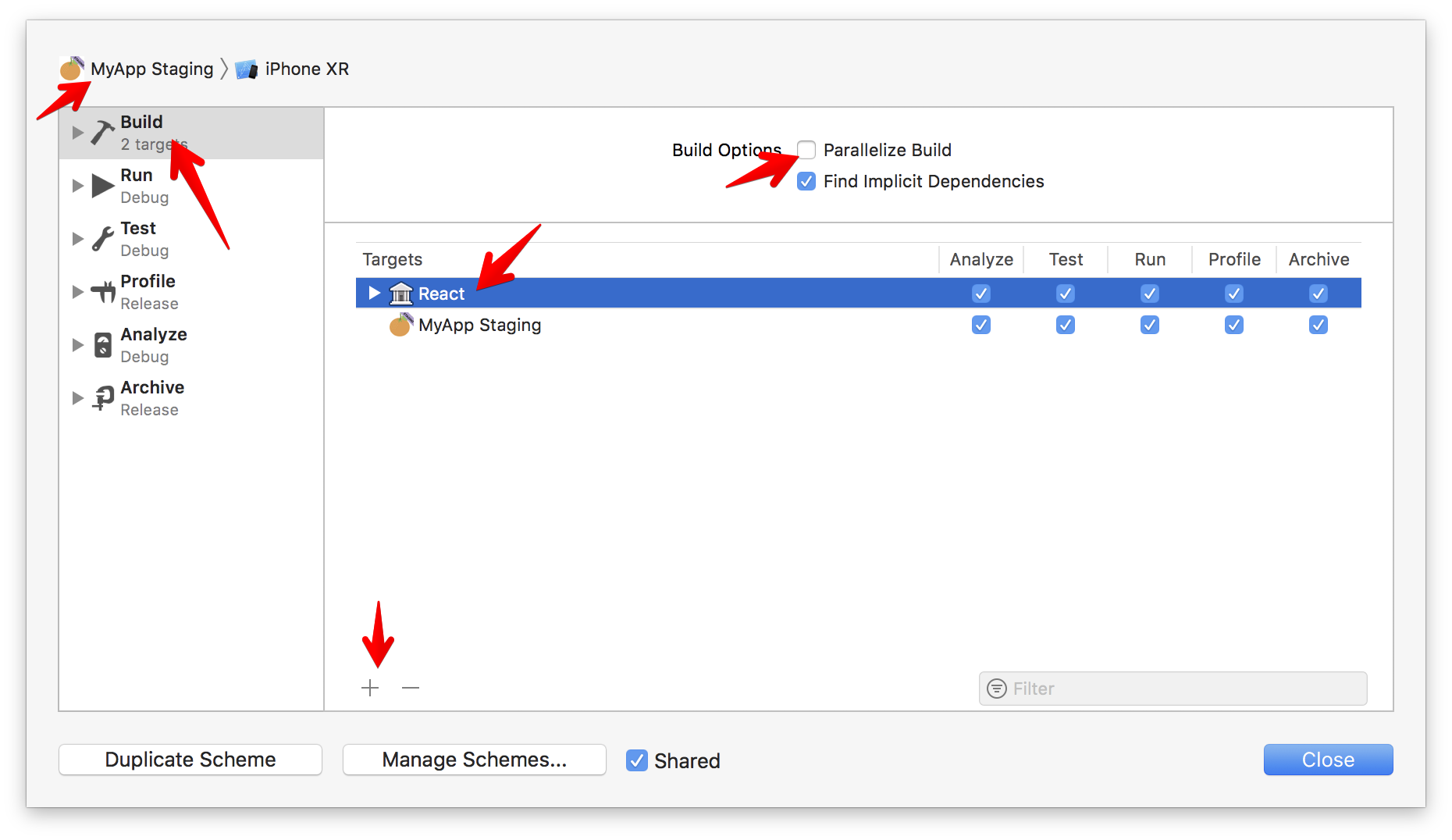

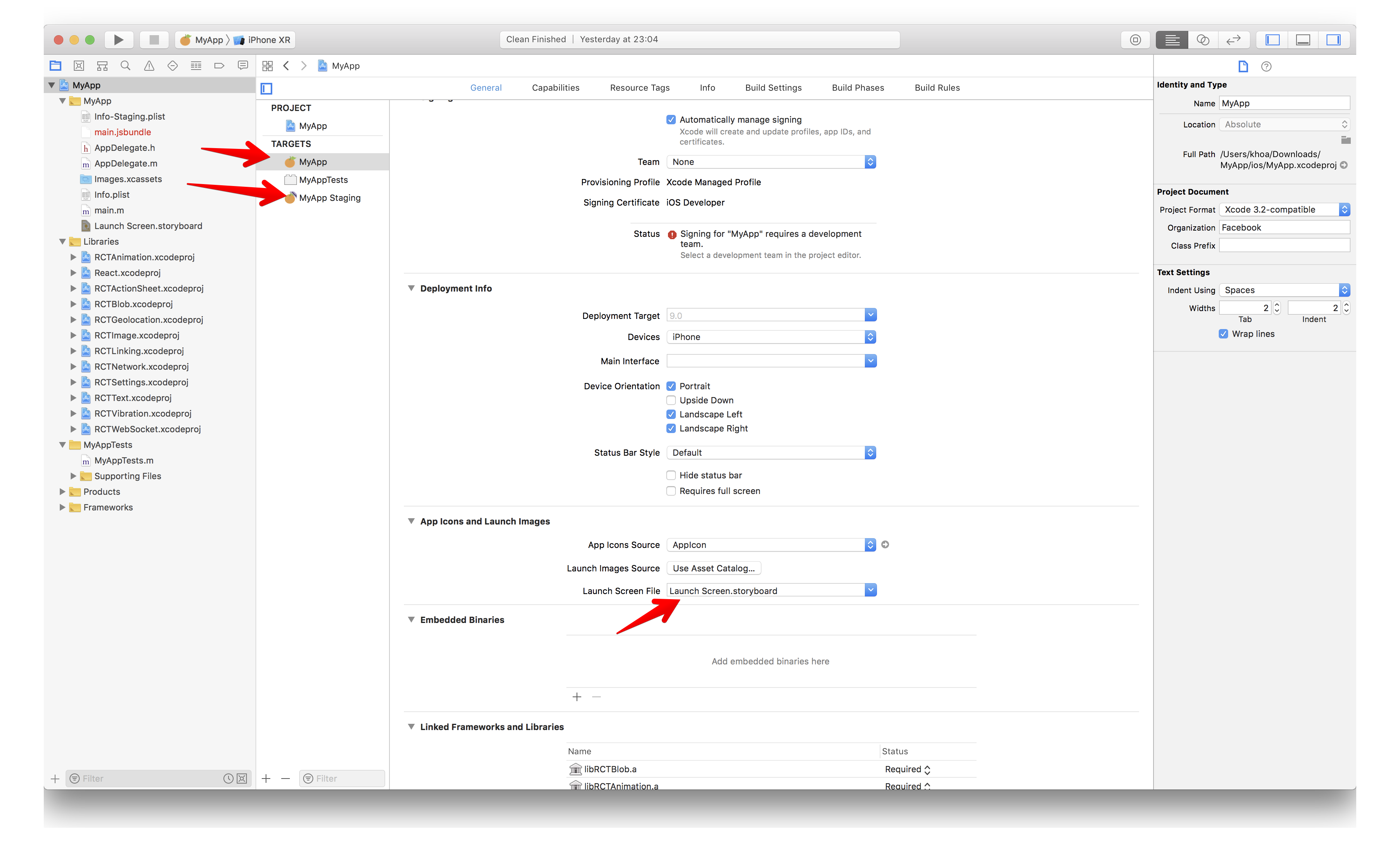

Bitrise can autogenerate schemes with the Recreate user scheme step, but it’s good to mark our scheme as Shared in Xcode. This ensures consistence between local and CI builds.

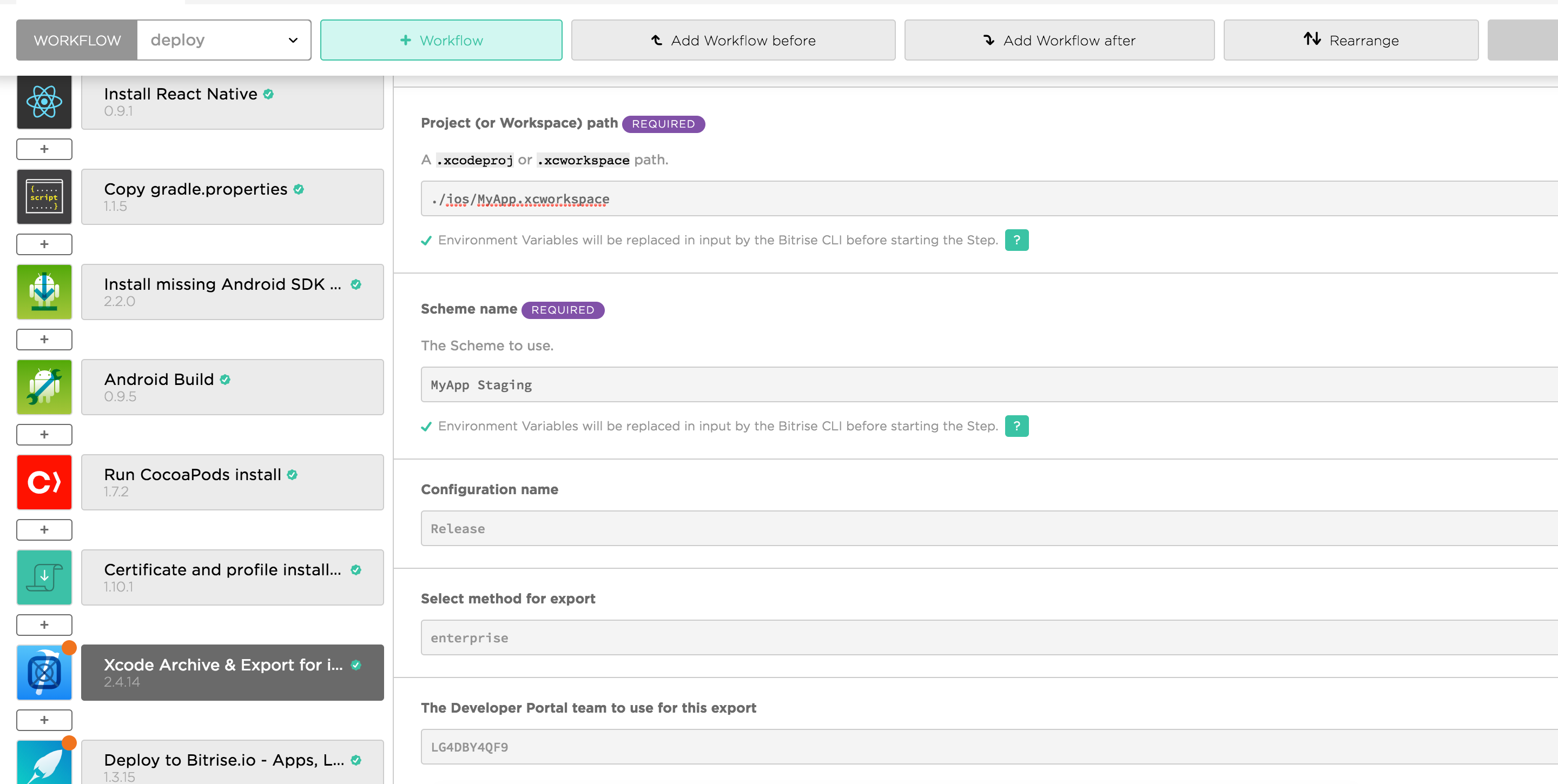

To archive project, we need to add Xcode Archive & Export . For React Native, iOS project is inside ios folder, so we need to specify ./ios/MyApp.xcworkspace for Project path. Note that I use xcworkspace because I have CocoaPods

Right now, as of React Native 0.57.0, it has problem running with the new build system in Xcode 10, so the quick fix is to use legacy build system. Under Xcode Archive & Export step there are fields to add additional options for xcodebuild call. Enter -UseModernBuildSystem=NO

You can read more Build System Release Notes for Xcode 10

Xcode 10 uses a new build system. The new build system provides improved reliability and build performance, and it catches project configuration problems that the legacy build system does not.

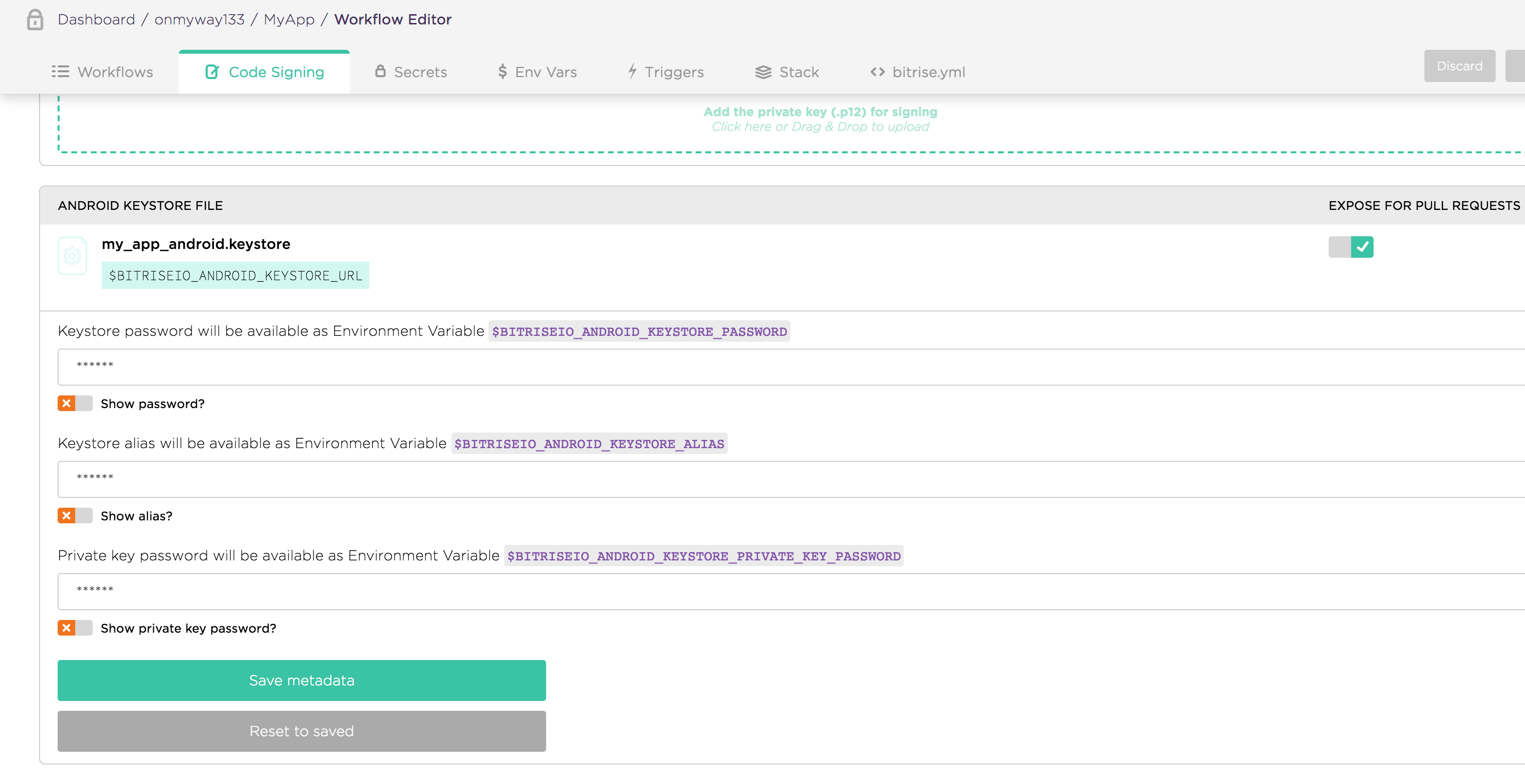

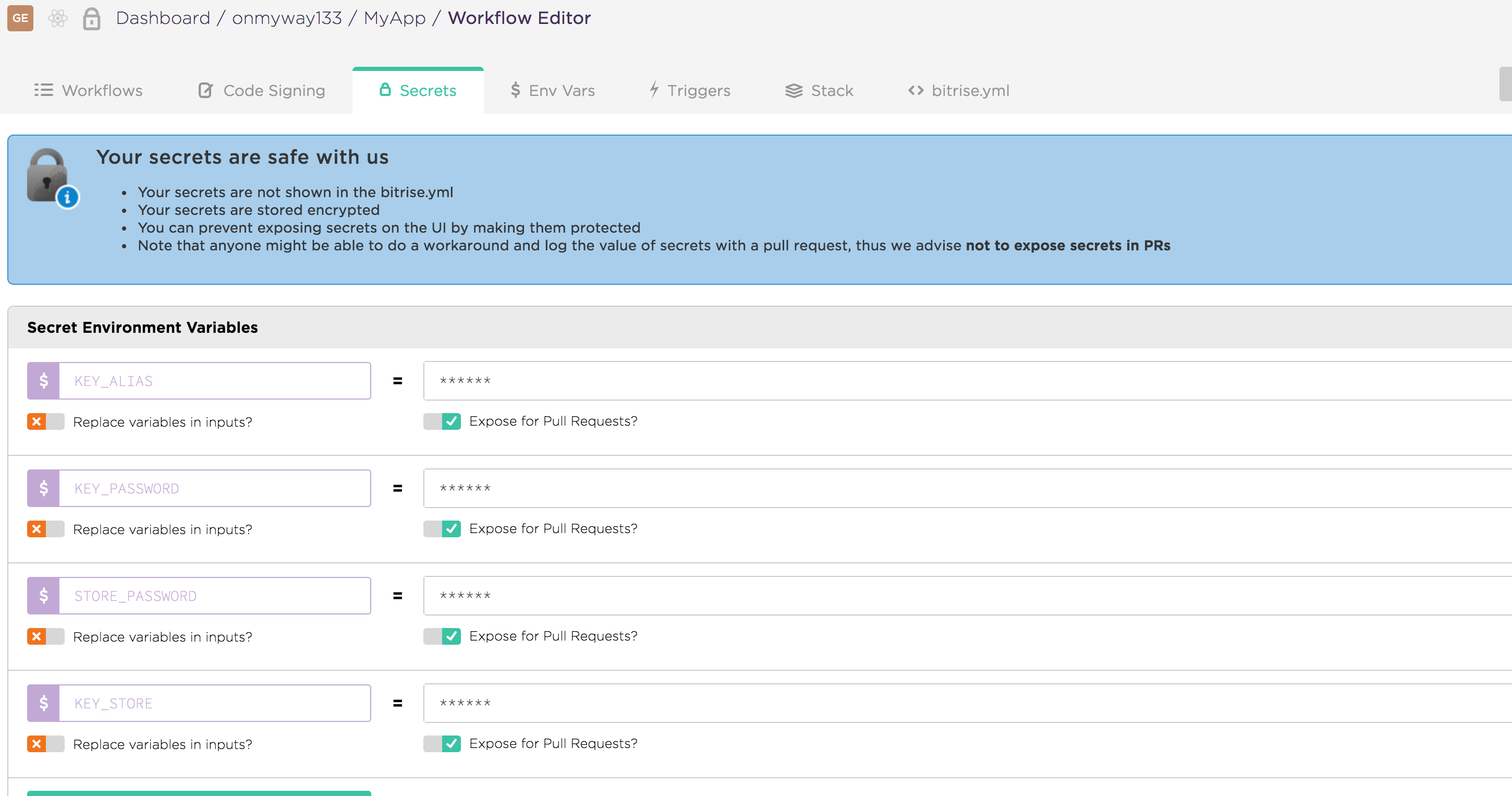

For Android, the most troublesome task is to give keystore files to Bitrise as it is something we would keep privately from GitHub. Bitrise allows us to upload keystore file, but we also need to specify key alias and key password. One solution is to use Secrets and Environment variables

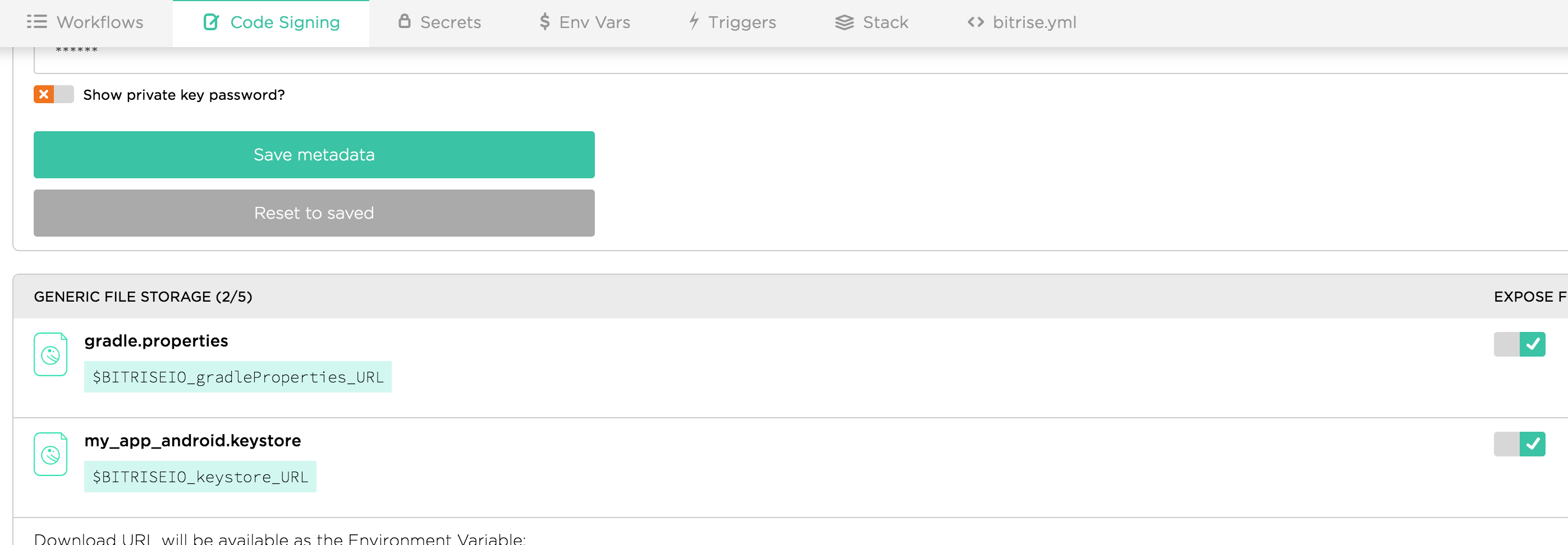

But as we often use gradle.properties to specify custom variables for Gradle, it’s convenient to upload this file. Under Workflow -> Code Signing is where we can upload keystore, as well as secured files

The generated URL variables are path to our uploaded files. We can use curl to download them. Bitrise has also Generic File Storage step to download all secured files.

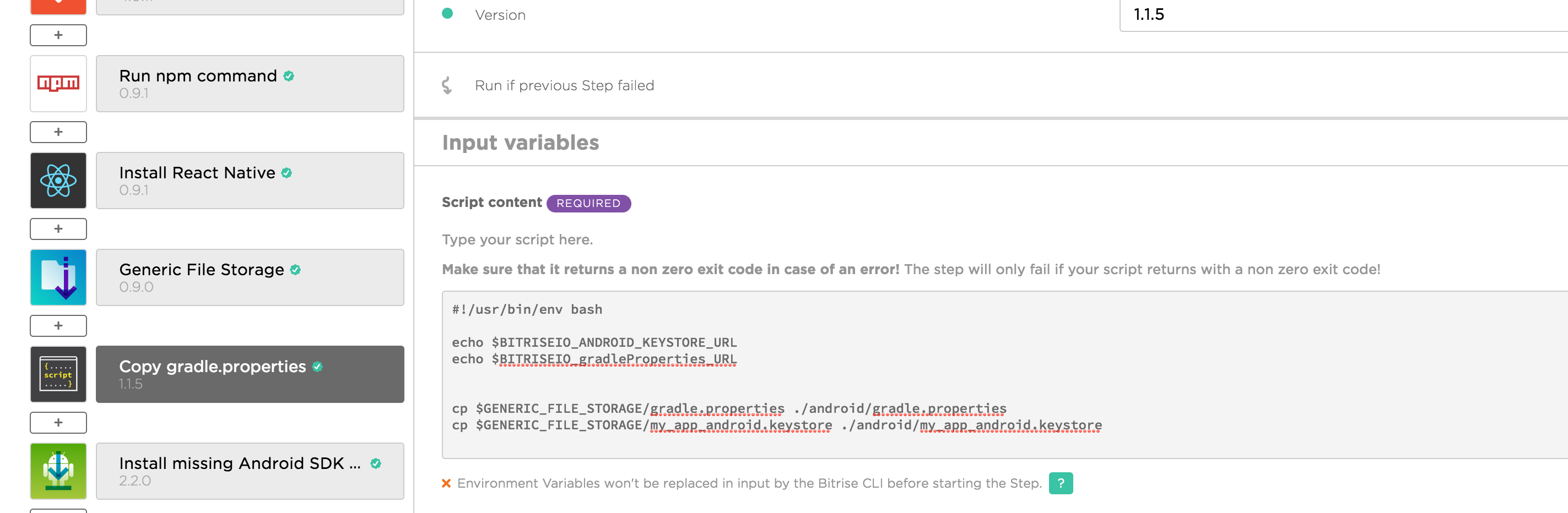

The downloaded files is located at GENERIC_FILE_STORAGE , so we add another Custom Script step to copy those files into ./android folder

#!/usr/bin/env bash

cp $GENERIC_FILE_STORAGE/gradle.properties ./android/gradle.properties

cp $GENERIC_FILE_STORAGE/my_app_android.keystore ./android/my_app_android.keystoreNote that the name of the files are the same of when we uploaded, and we use cp command to copy to folders.

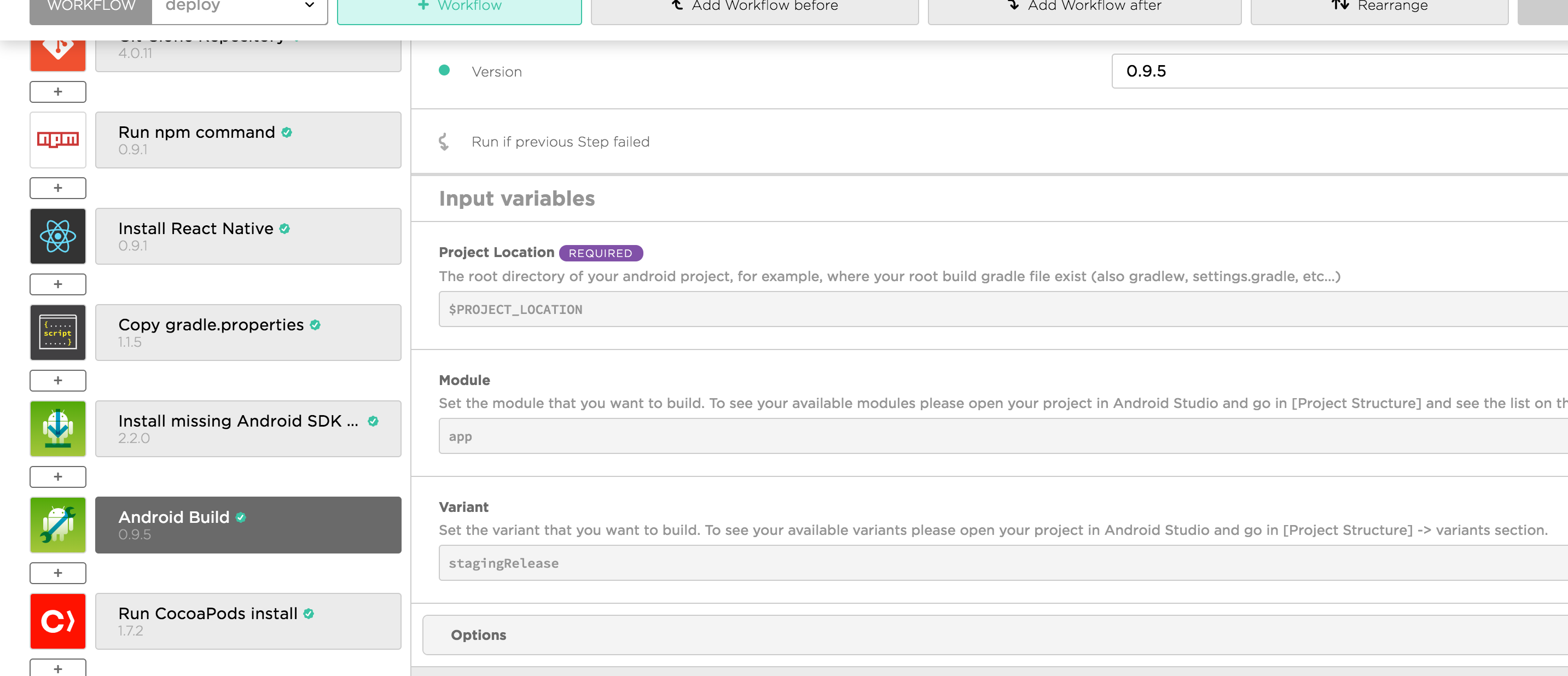

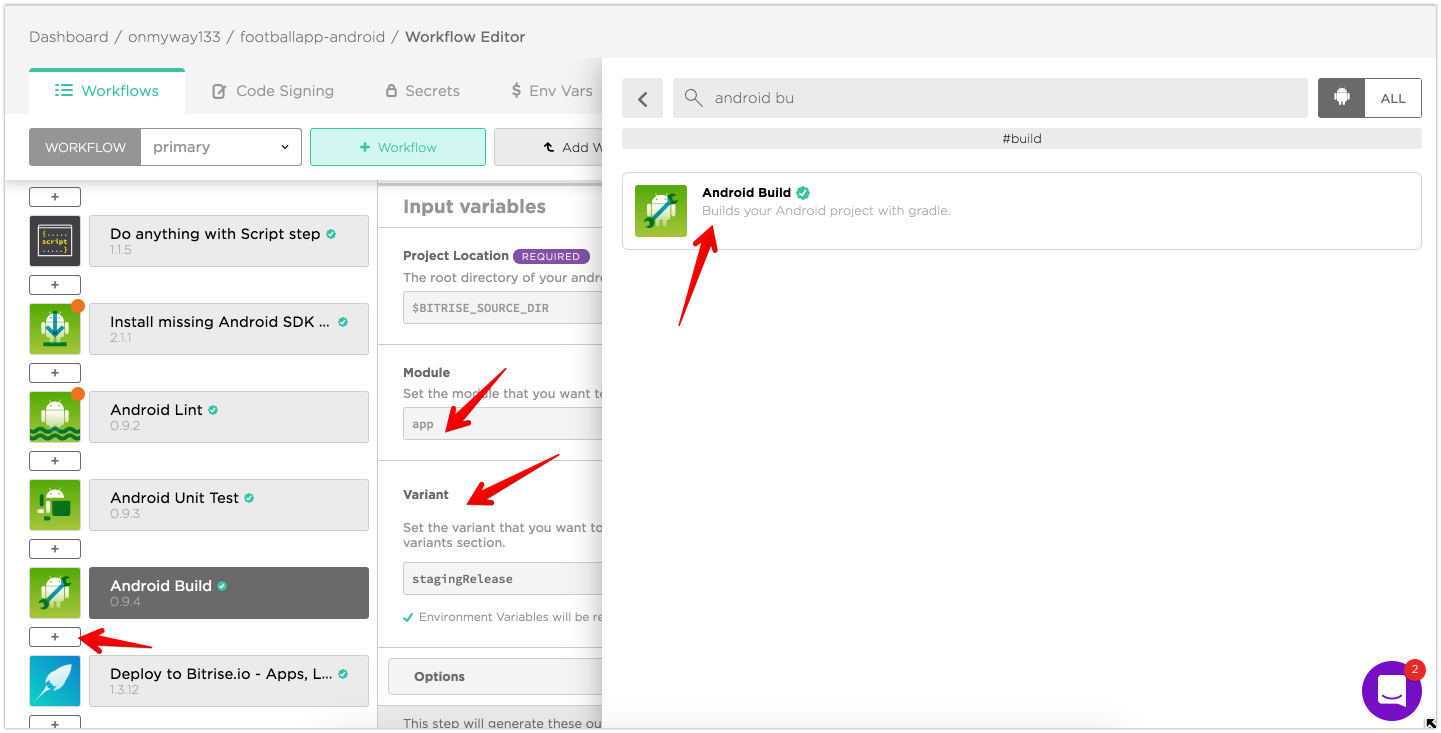

By default, Bitrise has 2 workflows: primary for quick start, and deploy for archiving and deploying. In the deploy workflow there is Android Build step. We can overwrite module and build variant here.

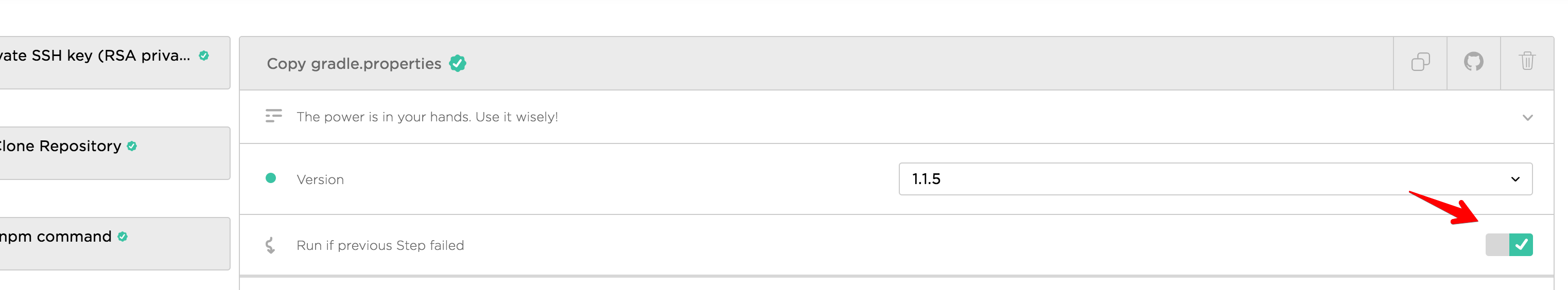

In Bitrise we can mark a step to continue regardless of the previous step. I use this to make sure iOS build independently from Android

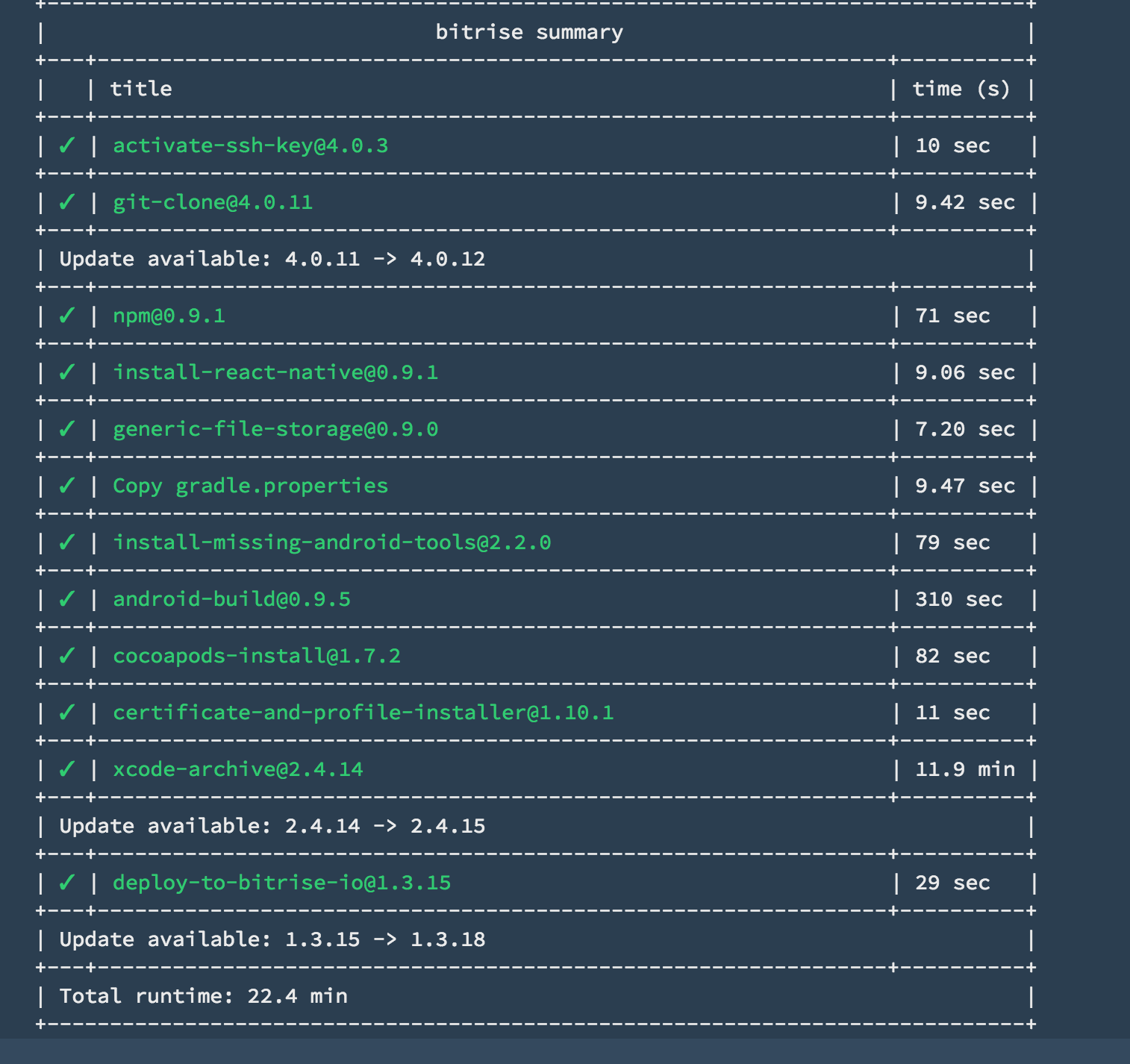

If both iOS and Android build are successful, we should see the below summary

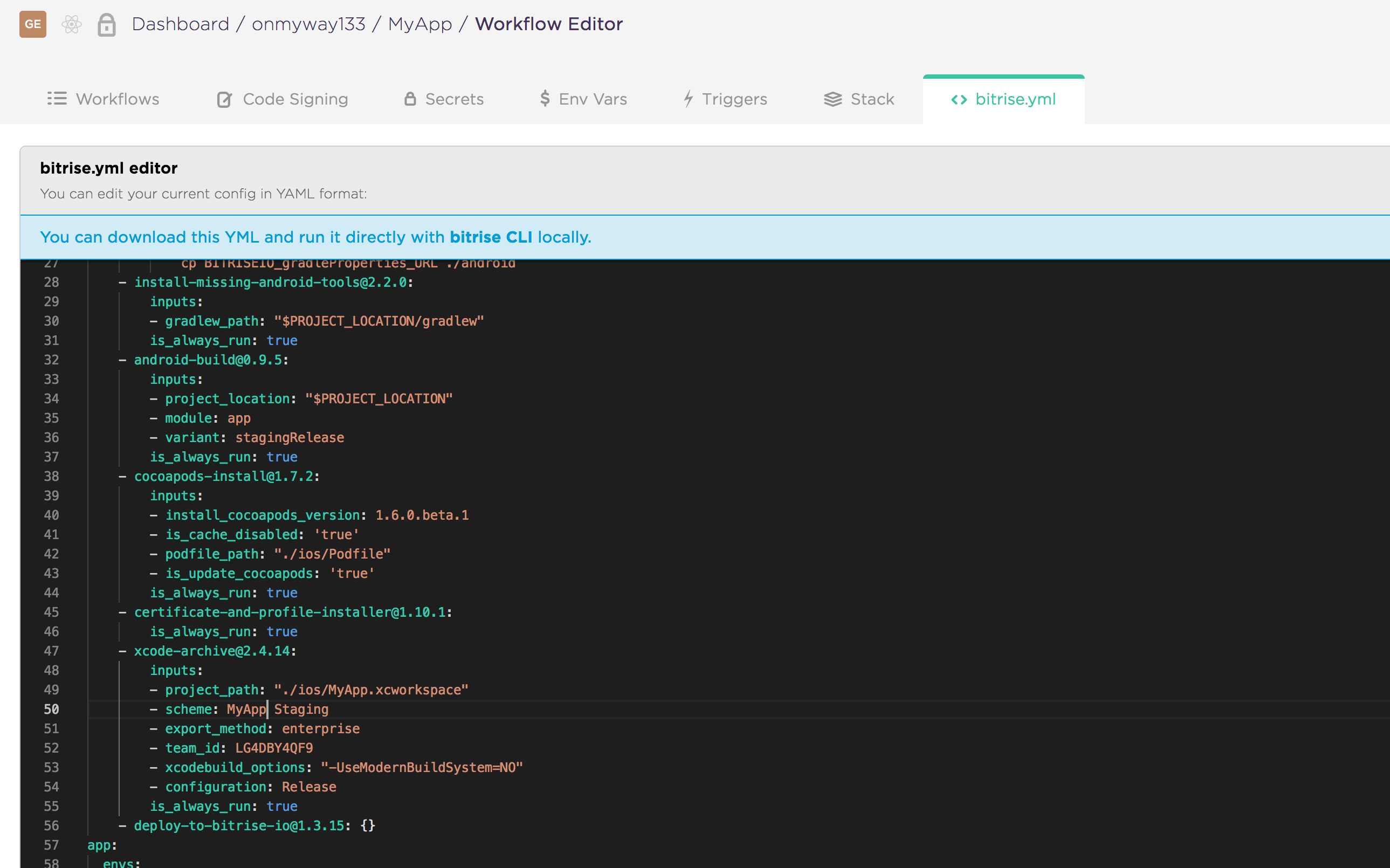

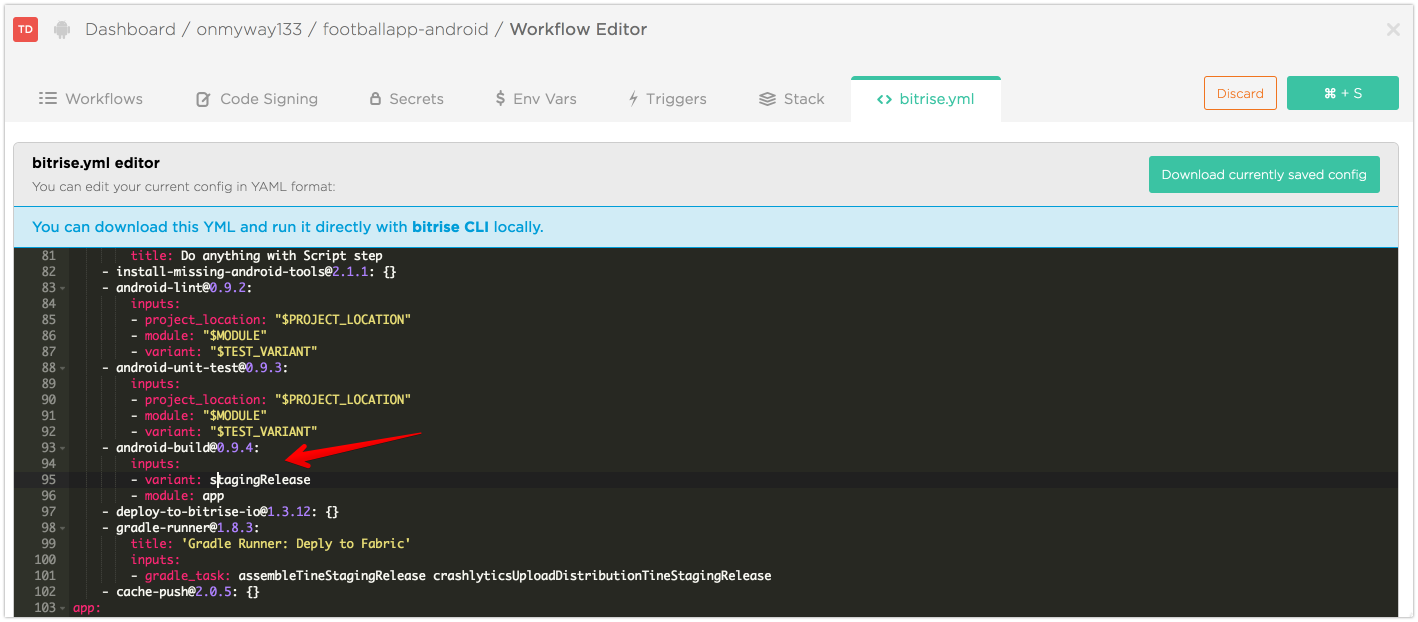

Although Bitrise has a good UI to configure workflows and steps, we have fine grained control every parameters in the bitrise.yml file. It’s good to reason here as we get familiar with all the steps.

I hope you learn something and have a happy deploy. Since you are here, below articles may be of your interest

Issue #275

Original post https://codeburst.io/making-unity-games-in-pure-c-2b1723cdc71f

As an iOS engineers, I ditched Storyboard to avoid all possible hassles and write UI components in pure Swift code. I did XAML in Visual Studio for Windows Phone apps and XML in Android Studio for Android apps some time ago, and I had good experience. However since I’m used to writing things in code, I like to do the same for Unity games, although the Unity editor and simulator are pretty good. Besides being comfortable declaring things in code, I find that code is easy to diff and organise. I also learn more how components work, because in Unity editor, most of important the components and properties are already wired and set up for us, so there’s some very obvious things that we don’t know.

All the things we can do in the editor, we can express in code. Things like setting up components, transform anchor, animation, sound, … are all feasible in code and you only need to using the correct namespace. There’s lots of info at Welcome to the Unity Scripting Reference. Unity 2018 has Visual Studio Community so that’s pretty comfortable to write code.

I would be extremely happy if Unity supports Kotlin or Swift, but for now only Javascript and C# are supported. I’m also a huge fan of pure Javascript, but I choose C# because of type safety, and also because C# was the language I studied at university for XNA and ASP.NET projects.

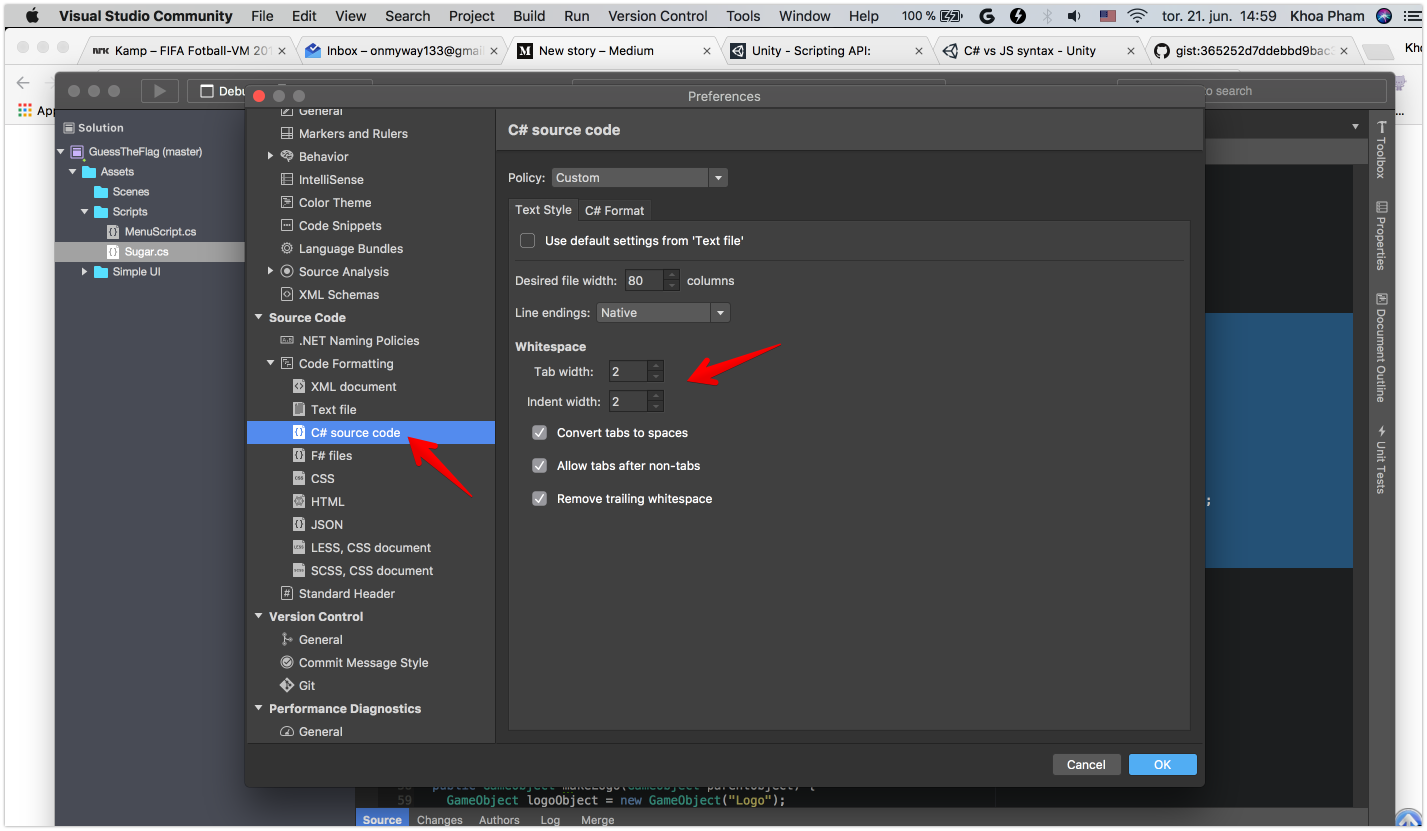

If you like 2 spaces indentation like me, you can go to Preferences in Visual Studio to change the editor settings

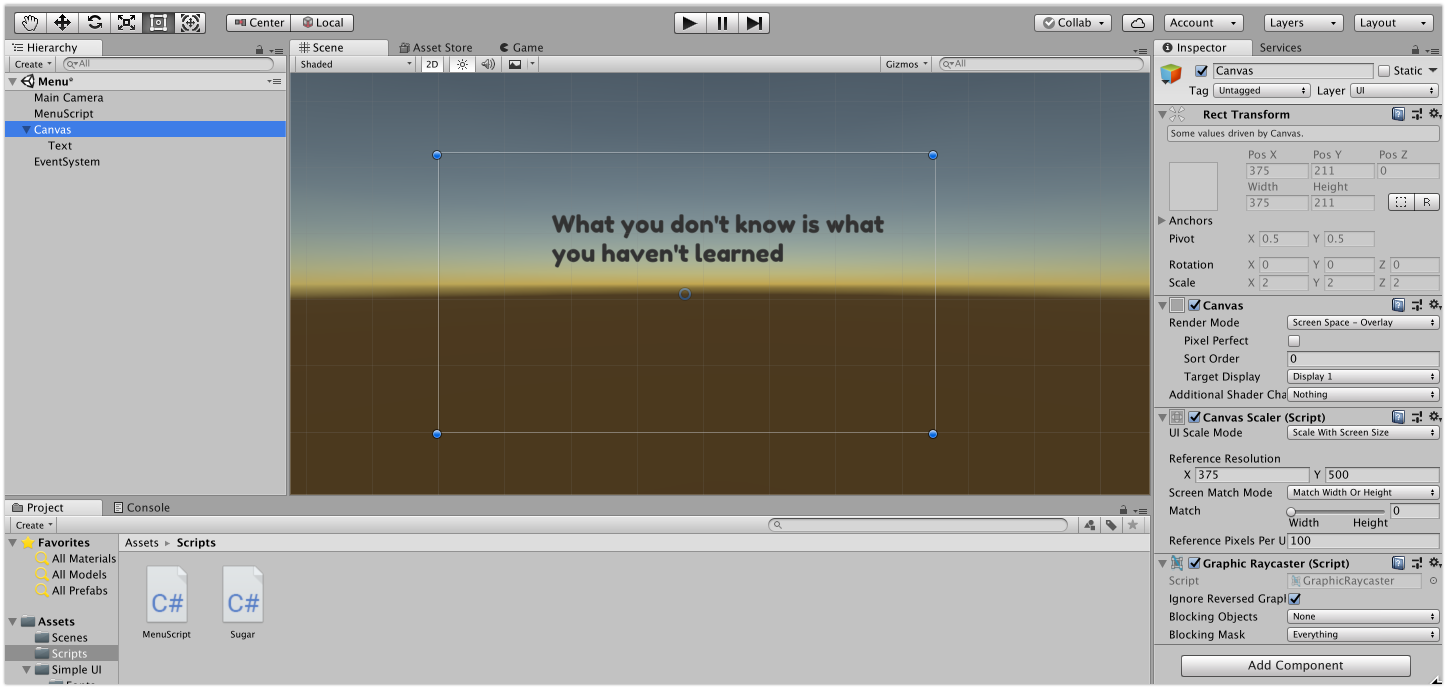

In the Unity editor, try dragging an arbitrary UI element onto the screen, you can see that a Canvas and EventSystem are also created. These are GameObject that has a bunch of predefined Component .

If we were to write this in code, we just need to follow the what is on the screen. In the beginning, we must use the editor to learn objects and properties, but later if we are familiar with Unity and its many GameObject , we can just code. Bear with me, it can be a hassle with code at first, but you surely learn a lot.

I usually organise reusable code into files, let’s create a C# file and name it Sugar . For EventSystemand UI we need UnityEngine.EventSystems and using UnityEngine.UI namespaces.

Here’s how to create EventSystem

1 | public class Sugar { |

and Canvas

1 | public GameObject makeCanvas() { |

and how to make some common UI elements

1 | public GameObject makeBackground(GameObject canvasObject) { |

Now create an empty GameObject in your Scene and add a Script component to this GameObject . You can reference code in your Sugar.cs without any problem

1 | public class MenuScript : MonoBehaviour { |

Creating UI elements from scripting: another way is to use Prefabs and Instantiate function in C# to easily reference and construct elements

2D Game Creation: official Unity tutorials for making 2D games.

Issue #274

Original post https://hackernoon.com/20-recommended-utility-apps-for-macos-in-2018-ea494b4db72b

Depending on the need, we have different apps on the mac. As someone who worked mostly with development, below are my indispensable apps. They are like suits to Tony Stark. Since I love open source apps, they have higher priority in the list.

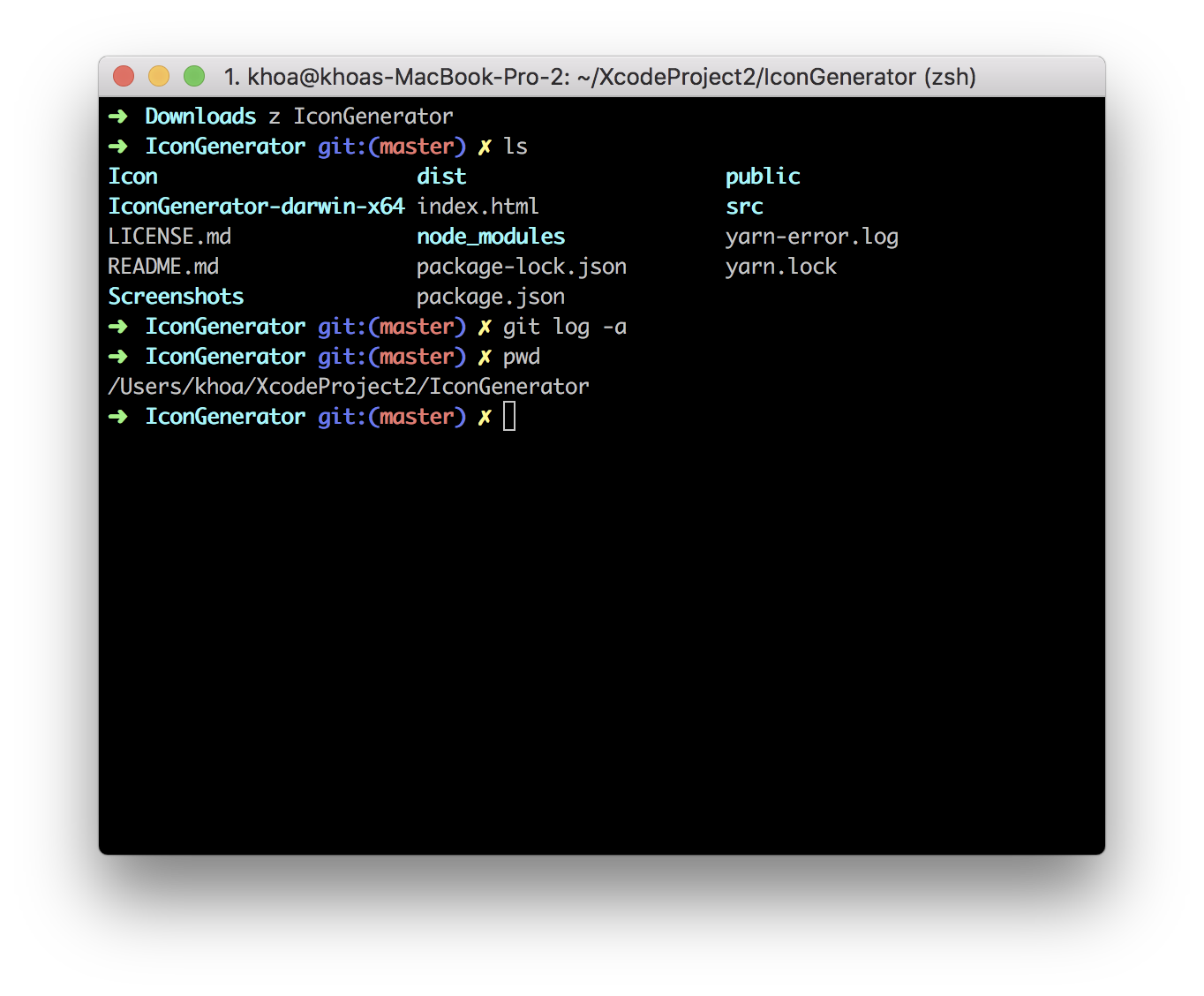

iTerm2 is a replacement for Terminal and the successor to iTerm. It works on Macs with macOS 10.10 or newer. iTerm2 brings the terminal into the modern age with features you never knew you always wanted.

iTerm2 has good integration with tmux and supports Split Panes

Term2 allows you to divide a tab into many rectangular “panes”, each of which is a different terminal session. The shortcuts cmd-d and cmd-shift-d divide an existing session vertically or horizontally, respectively. You can navigate among split panes with cmd-opt-arrow or cmd-[ and cmd-]. You can “maximize” the current pane — hiding all others in that tab — with cmd-shift-enter. Pressing the shortcut again restores the hidden panes.

There’s the_silver_searcher with ag command to quickly search for files

A delightful community-driven (with 1,200+ contributors) framework for managing your zsh configuration. Includes 200+ optional plugins (rails, git, OSX, hub, capistrano, brew, ant, php, python, etc), over 140 themes to spice up your morning, and an auto-update tool so that makes it easy to keep up with the latest updates from the community.

I use z shell with oh-my-zsh plugins. I also use zsh-autocompletions to have autocompletion like fish shell and z to track and quickly navigate to the most used directories.

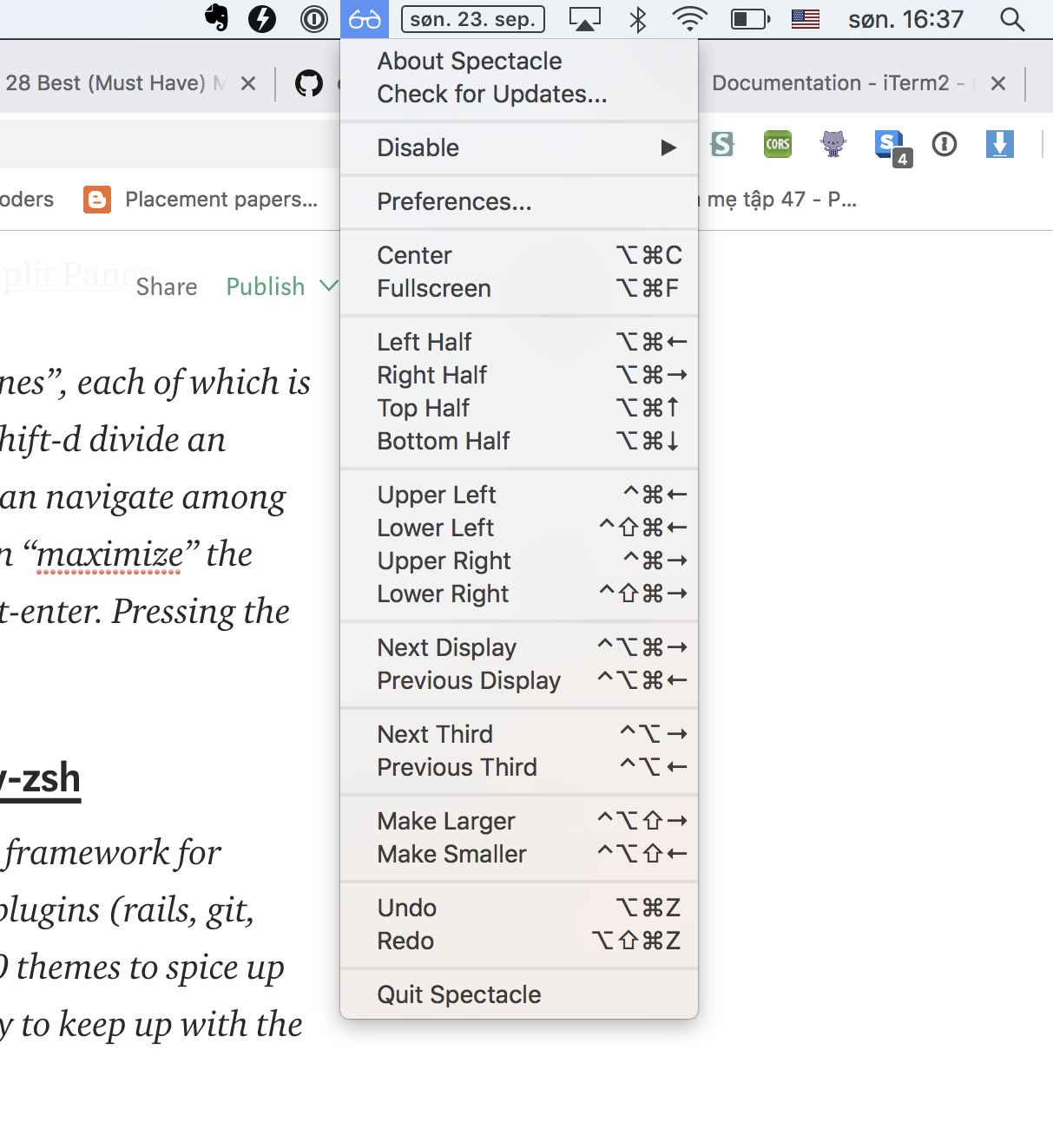

Spectacle allows you to organize your windows without using a mouse.

With spectable, I can organise windows easily with just Cmd+Option+F or Cmd+Option+Left

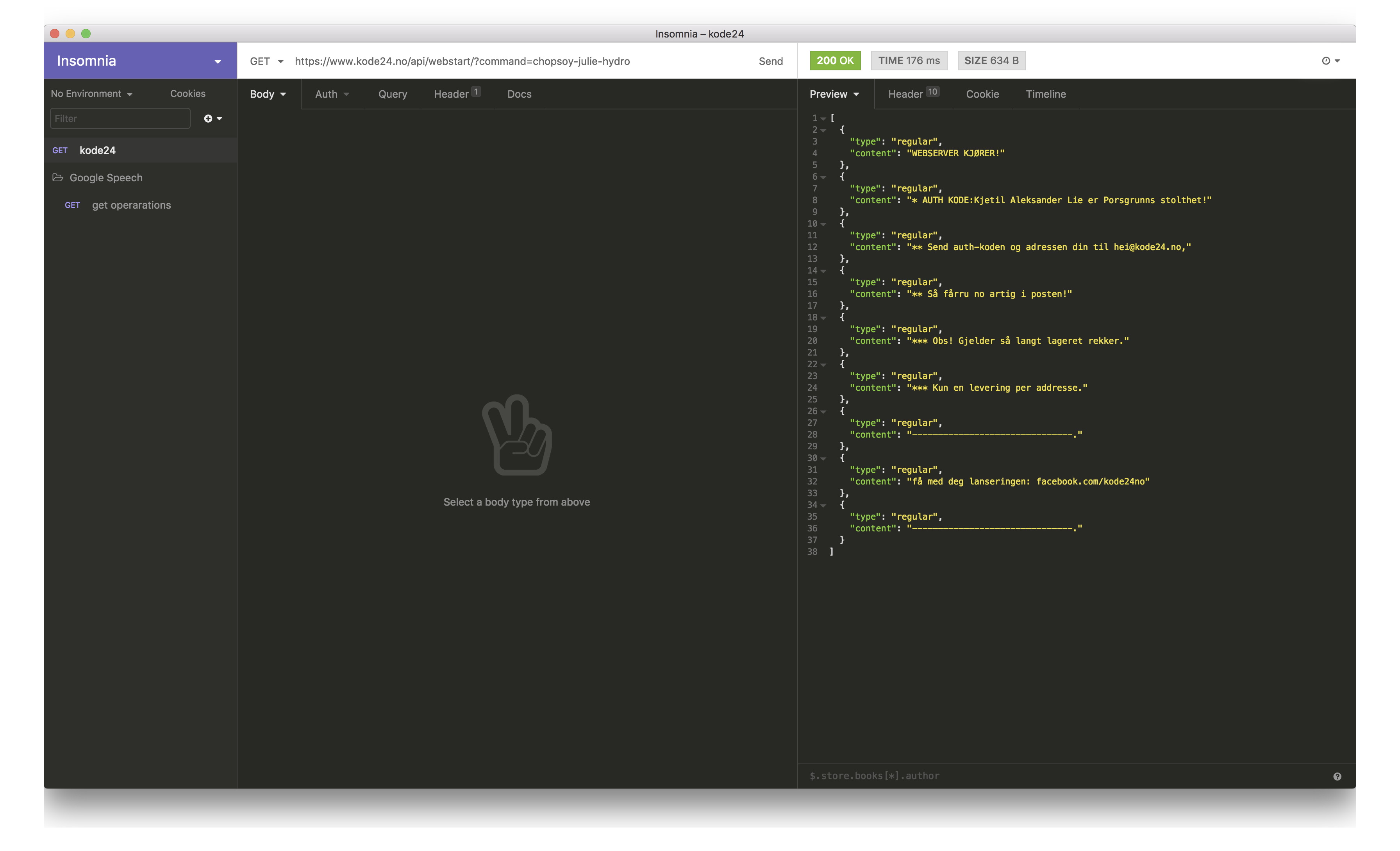

Insomnia is a cross-platform REST client, built on top of Electron.

Regardless if you like electron.js apps or not. This is a great tool for testing REST requets

VS Code is a new type of tool that combines the simplicity of a code editor with what developers need for their core edit-build-debug cycle. Code provides comprehensive editing and debugging support, an extensibility model, and lightweight integration with existing tools.

This seems to be the most popular for front end development, and many other things. There’s bunch of extensions that make the experience to a new level.

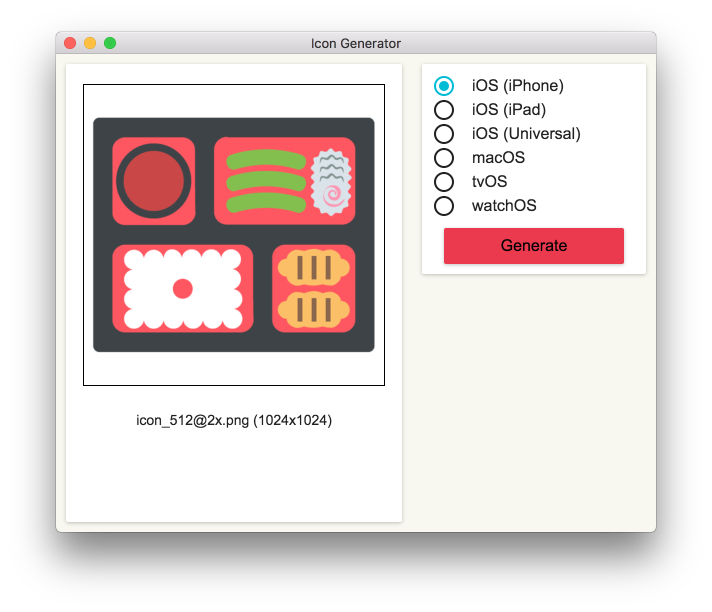

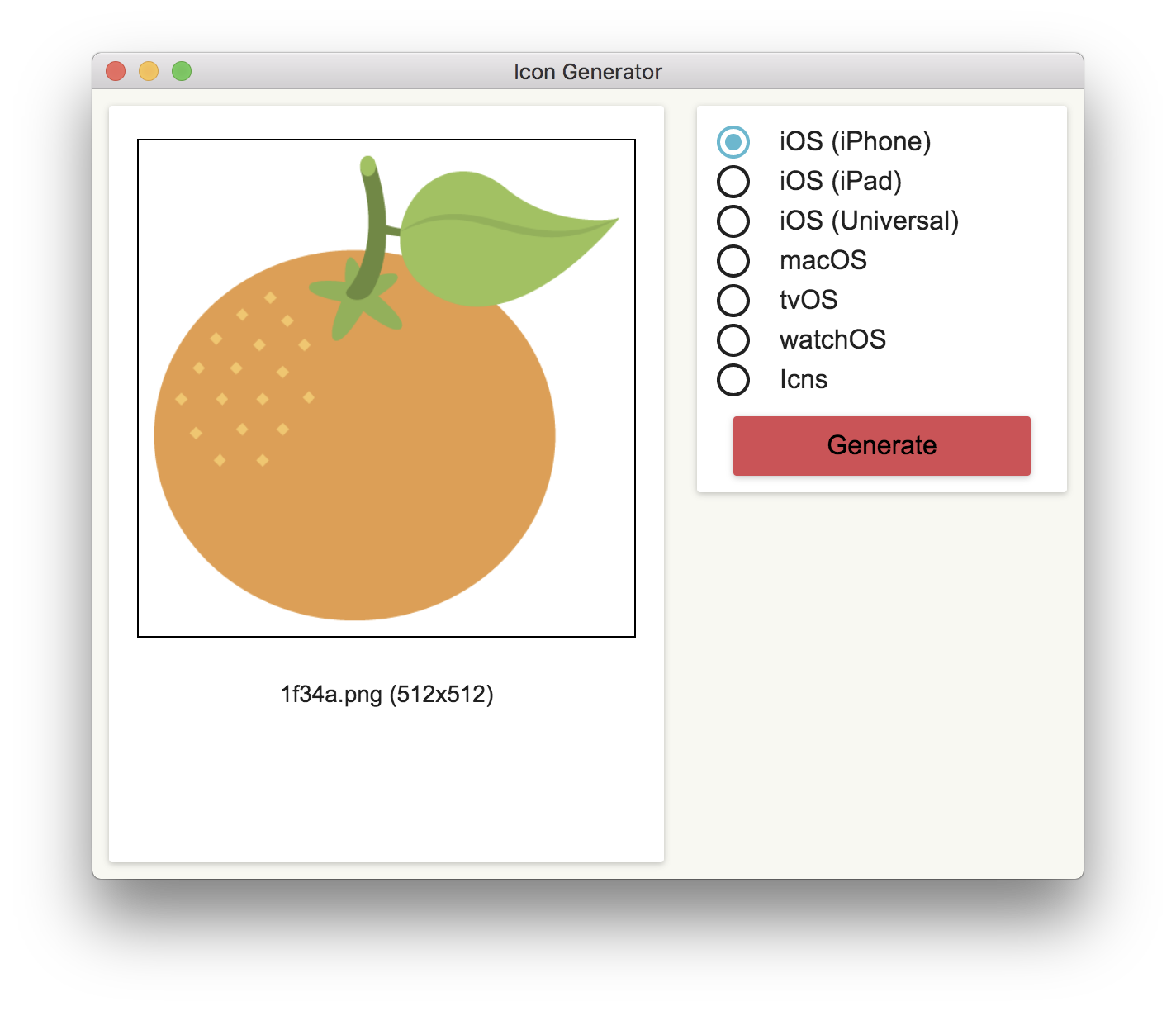

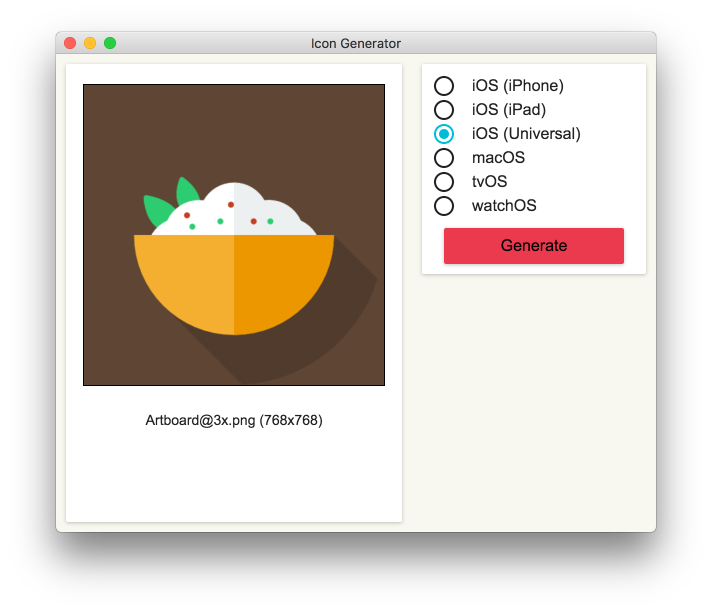

Built by me. When developing iOS, Android and macOS applications, I need a quick way to generate icons in different sizes. You can simply drag the generated asset into Xcode and that’s it.

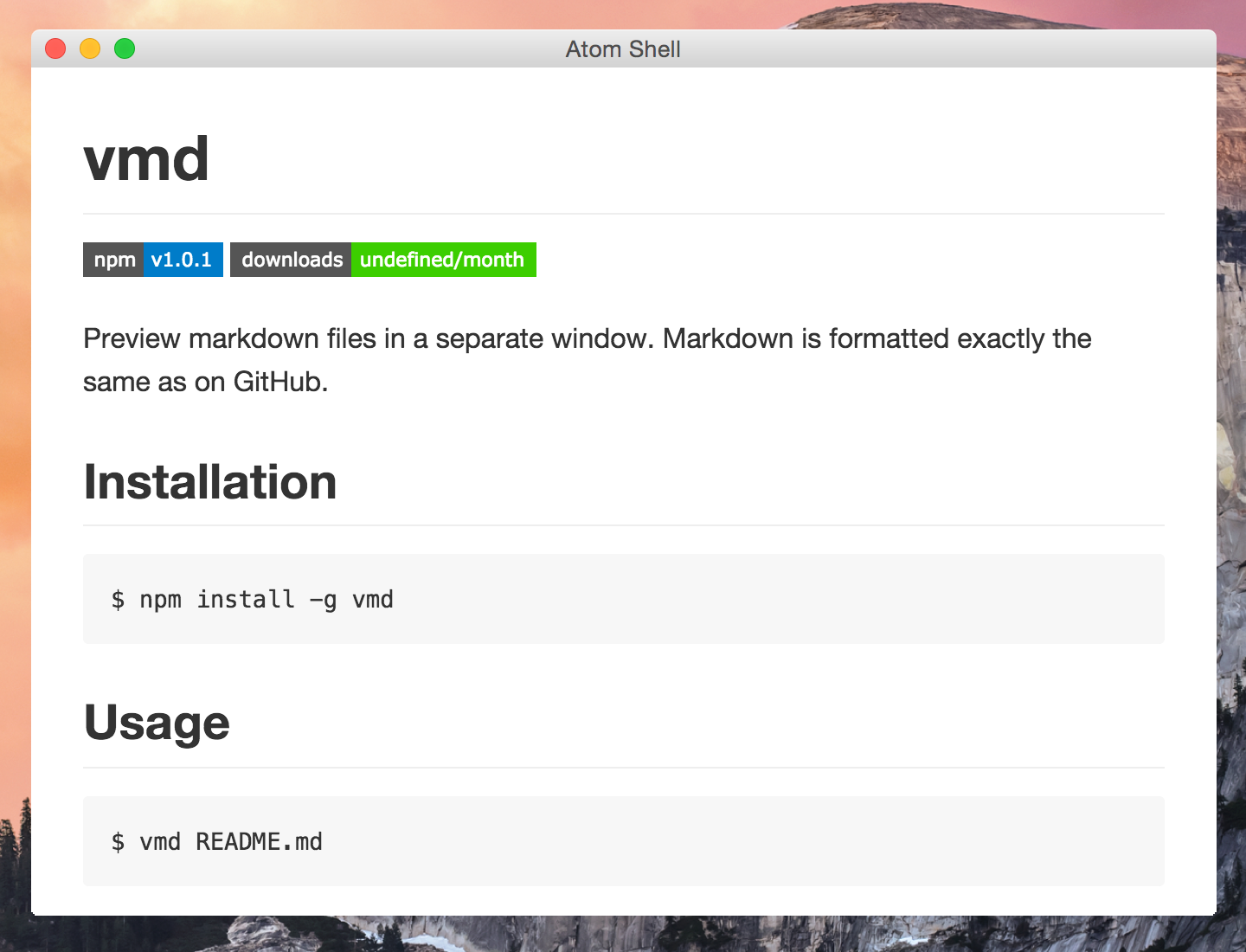

Preview markdown files in a separate window. Markdown is formatted exactly the same as on GitHub.

A mininal but complete colorpicker desktop app

I used to use Sip but I often get the problem of losing focus.

I built this as a native macOS app to capture screen and save to gif file. It works like Licecap but open source. There’s also an open source tool called kap that is slick.

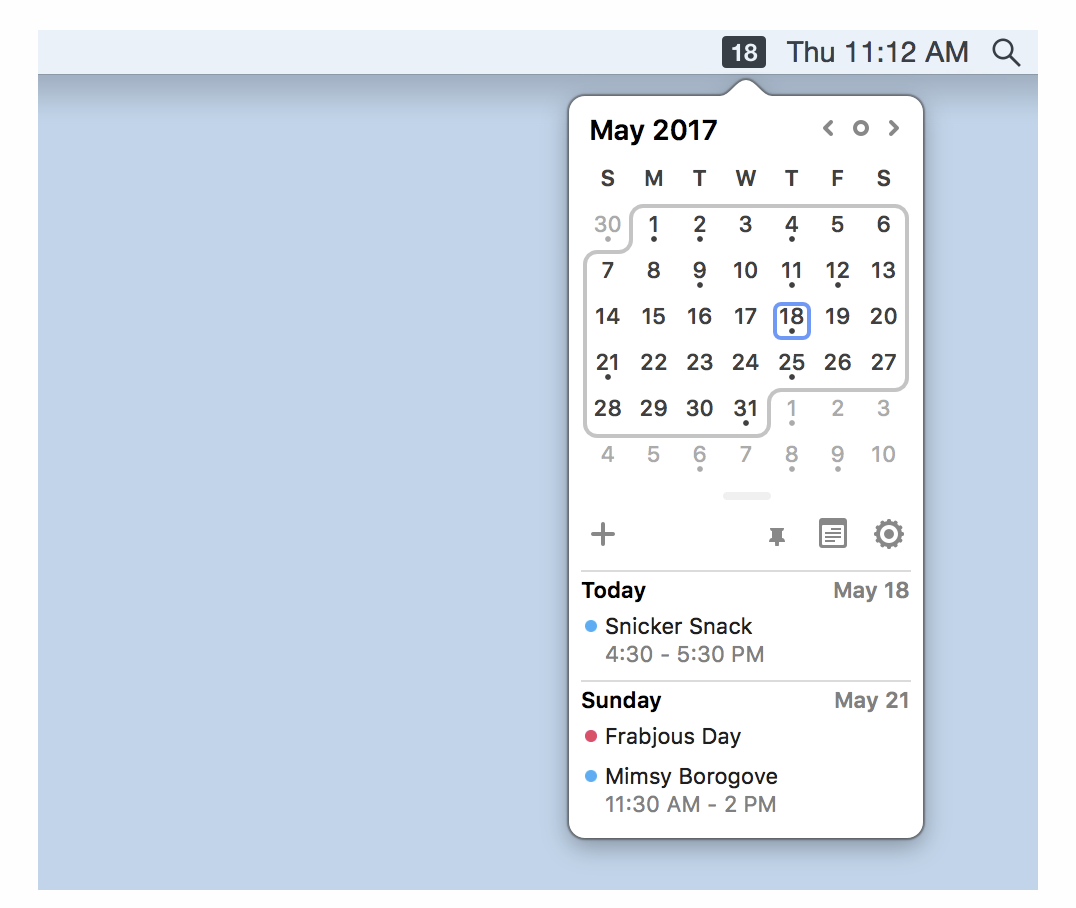

Itsycal is a tiny calendar for your Mac’s menu bar.

The app is minimal and works very well. It can shows calendar for integrated accounts in the mac.

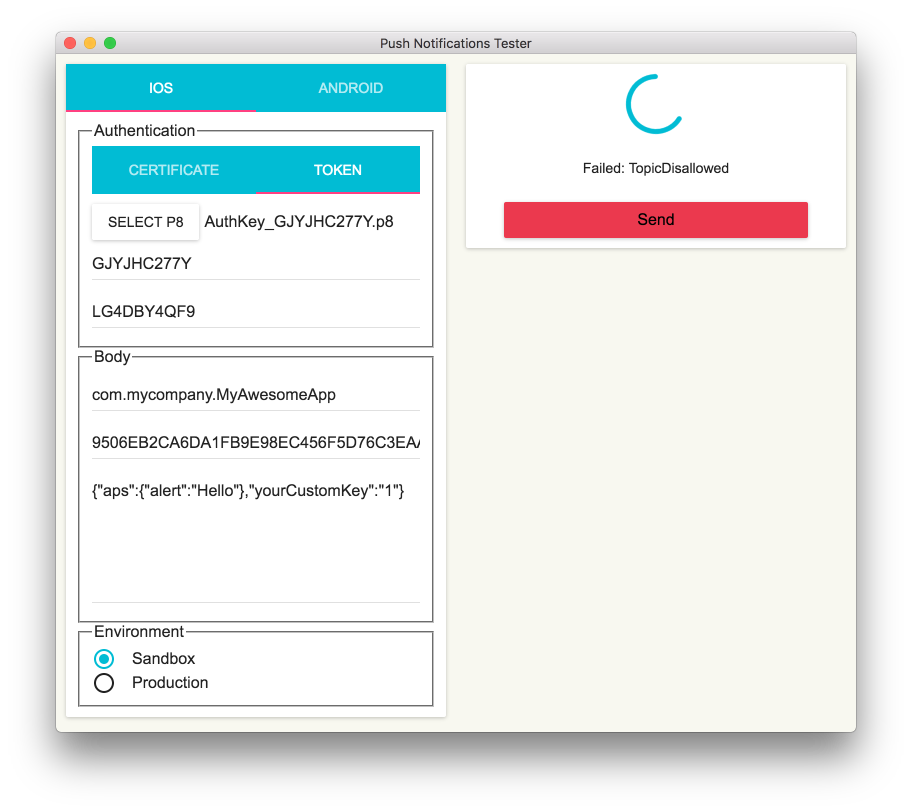

I often need to test push notification to iOS and Android apps. And I want to support both certificate and key p8 authentication for Apple Push Notification service, so I built this tool.

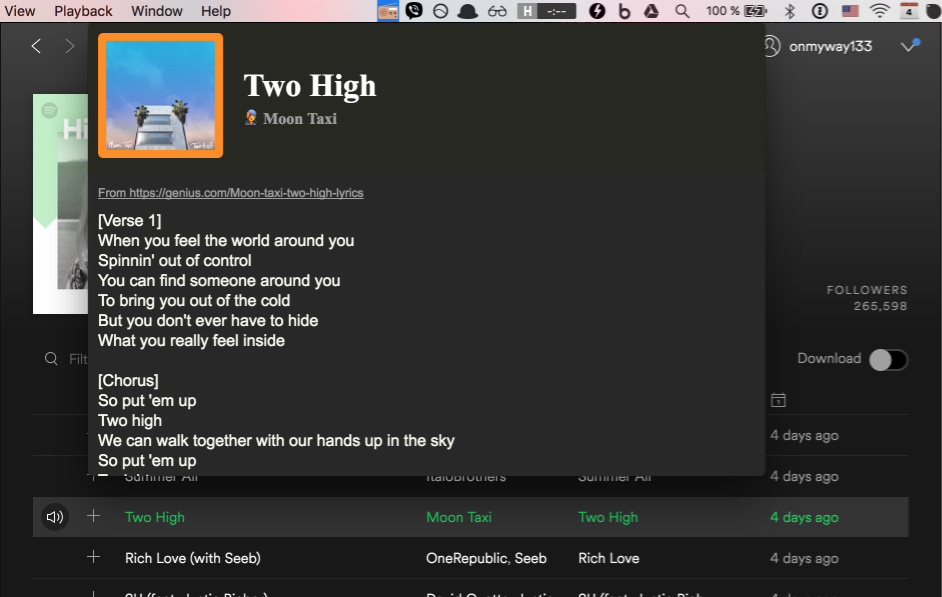

A menu bar app to show the lyric of the playing Spotify song

When I listen to some songs in Spotify, I want to see the lyrics too. The lyrics is fetched from https://genius.com/ and displayed in a wonderful UI.

GitHub Notifications on your desktop.

I use this to get real time notification for issues and pull requests for projects on GitHub. I hope there is support for Bitbucket soon.

FinderGo is both a native macOS app and a Finder extension. It has toolbar button that opens terminal right within Finder in the current directory. You can configure it to open either Terminal, iTerm2 or Hyper

This is about theme. There is the very popular dracular themes, but I find it too strong for the eyes. I don’t use Atom, but I love its one dark UI. I used to maintain my own theme for Xcode called DarkSide but now I use xcode-one-dark for Xcode and Atom One Dark Theme for Visual Studio Code.

I also use Fira Code font in Xcode, Visual Studio Code and Android Studio, which has beautiful ligatures.

I use Chrome for its speed and wonderful support for extensions. The extensions I made are github-chat to enable chat within GitHub and github-extended to see more pinned repositories.

There are also refined github, github-repo-size and octotree that are indispensable for me.

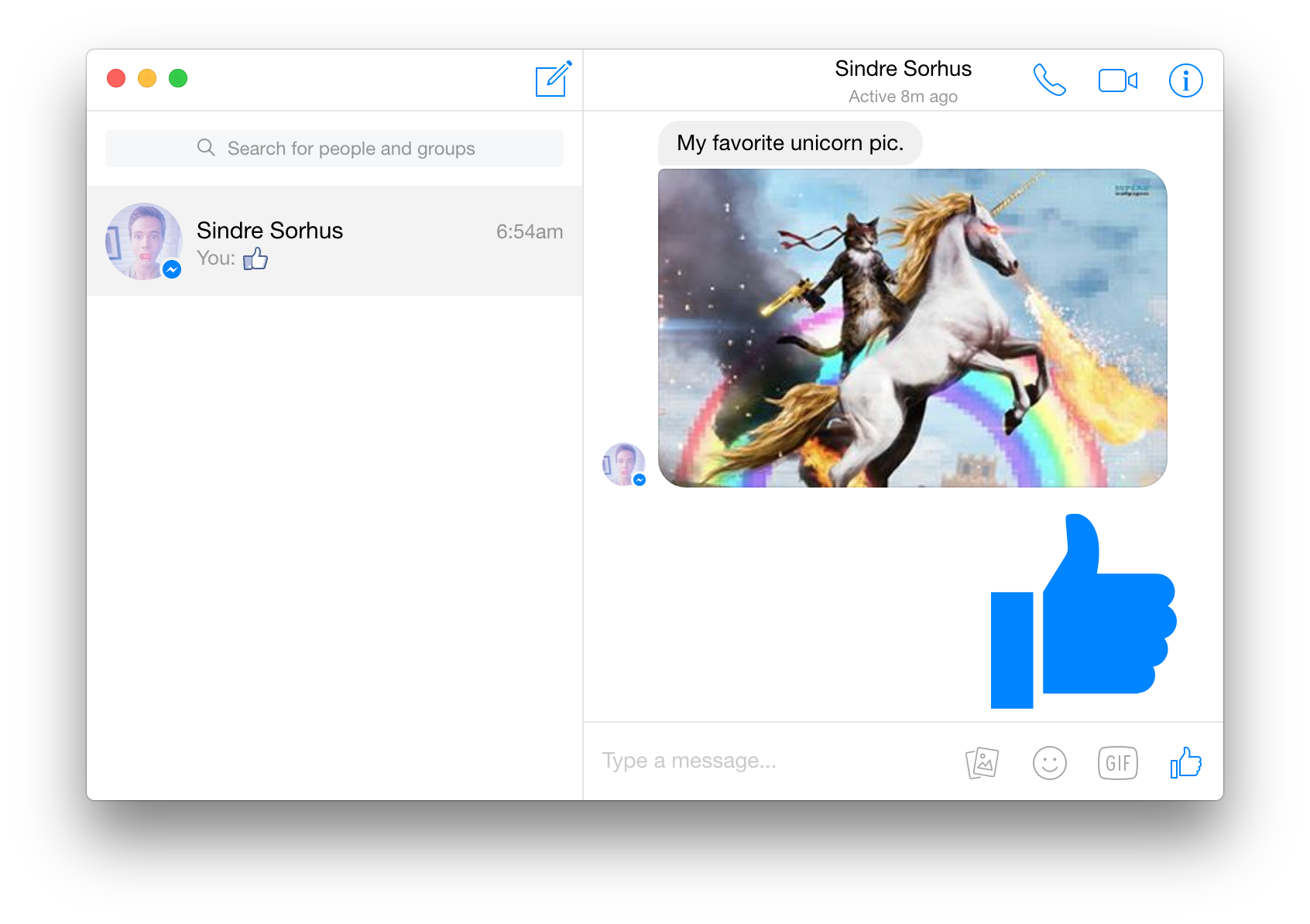

Caprine is an unofficial and privacy focused Facebook Messenger app with many useful features.

Sublime Text is a sophisticated text editor for code, markup and prose. You’ll love the slick user interface, extraordinary features and amazing performance.

Sublime Text is simply fast and the editing experience is very good. I’ve used Atom but it is too slow.

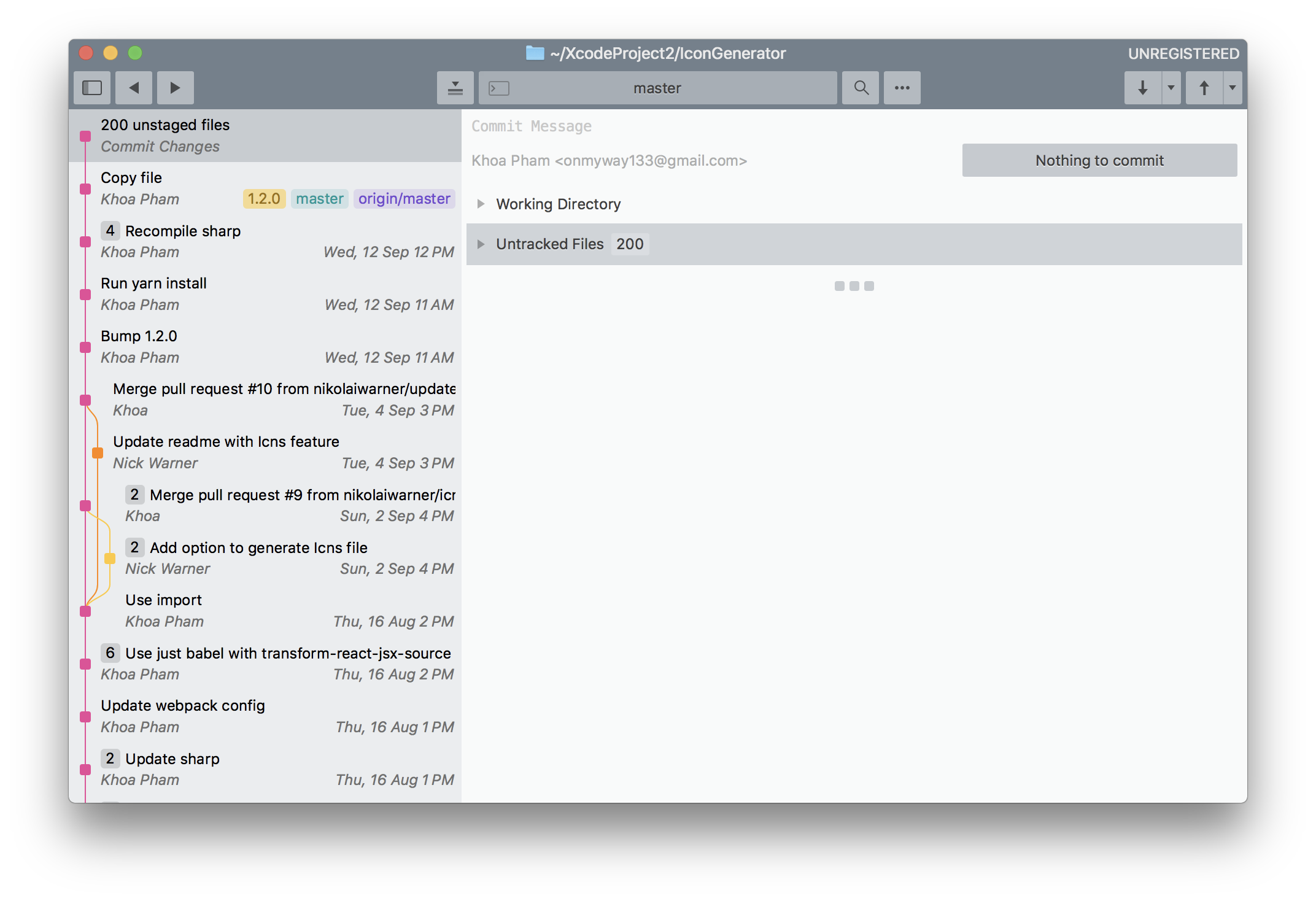

Meet a new Git client, from the makers of Sublime Text

Sublime Merge never lets me down. The source control app is simply very quick. I used SourceTree in the past, but it is very slow and had problem with authentication to Bitbucket and GitHub, and it halts very often for React Native apps, which has lots of node modules committed.

1Password remembers them all for you. Save your passwords and log in to sites with a single click. It’s that simple.

Everyone need strong and unique passwords these day. This tool is indispensable

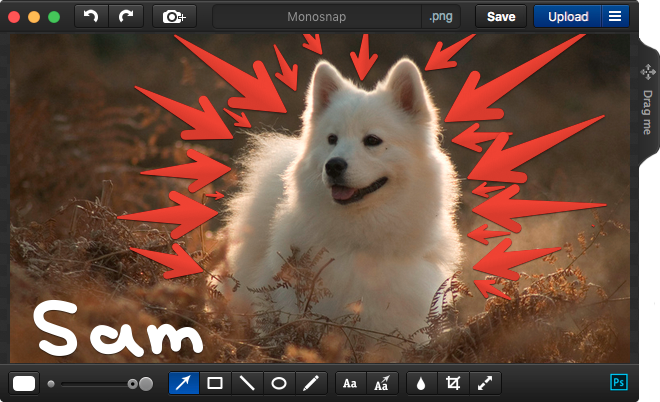

Make screenshots. Draw on it. Shoot video and share your files. It’s fast, easy and free.

I haven’t found a good open source alternative, this is good in capturing screen or portion of the screen.

iTunes or Quick Time has problem with some video codecs. This app VLC can play all kinds of video types.

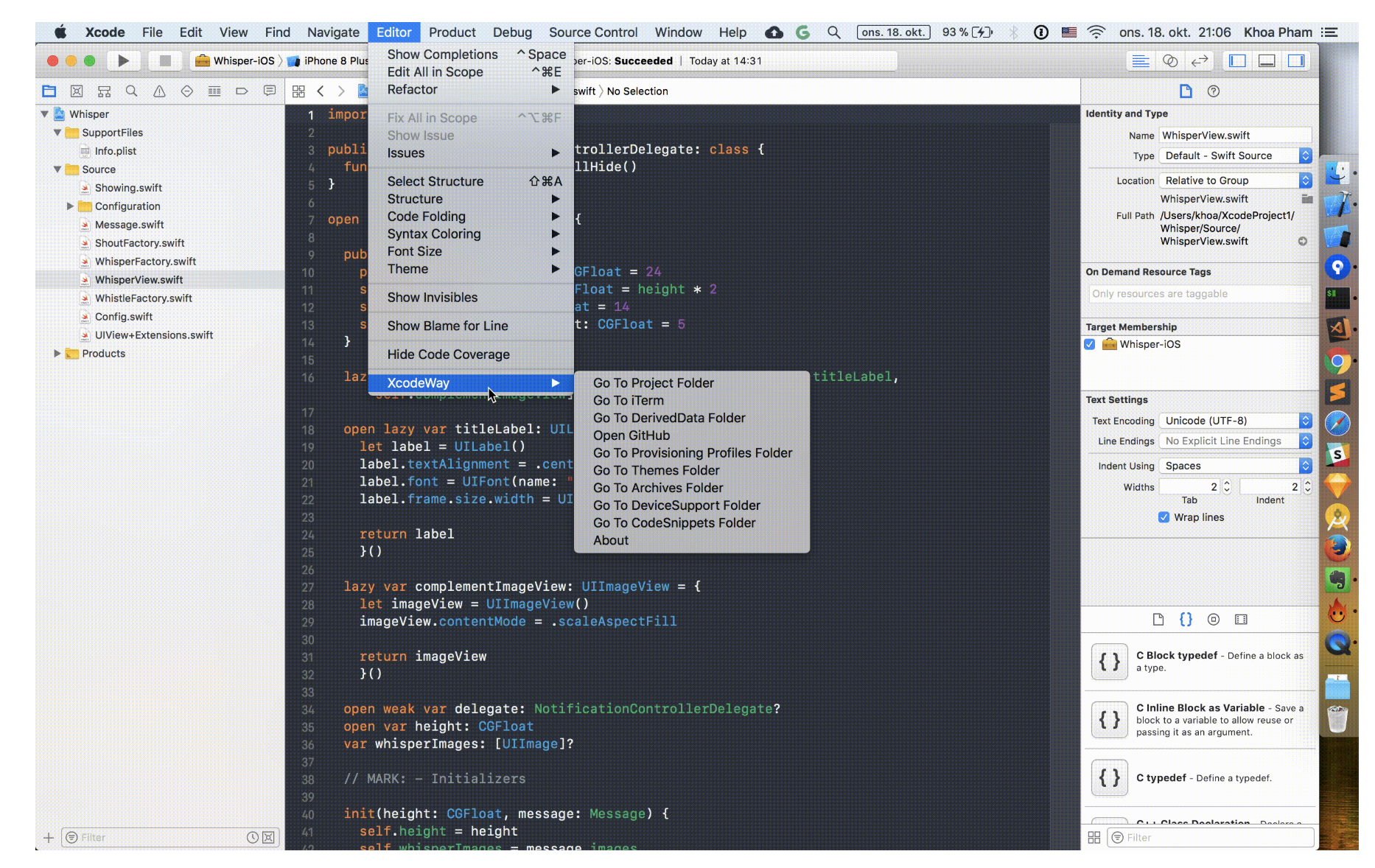

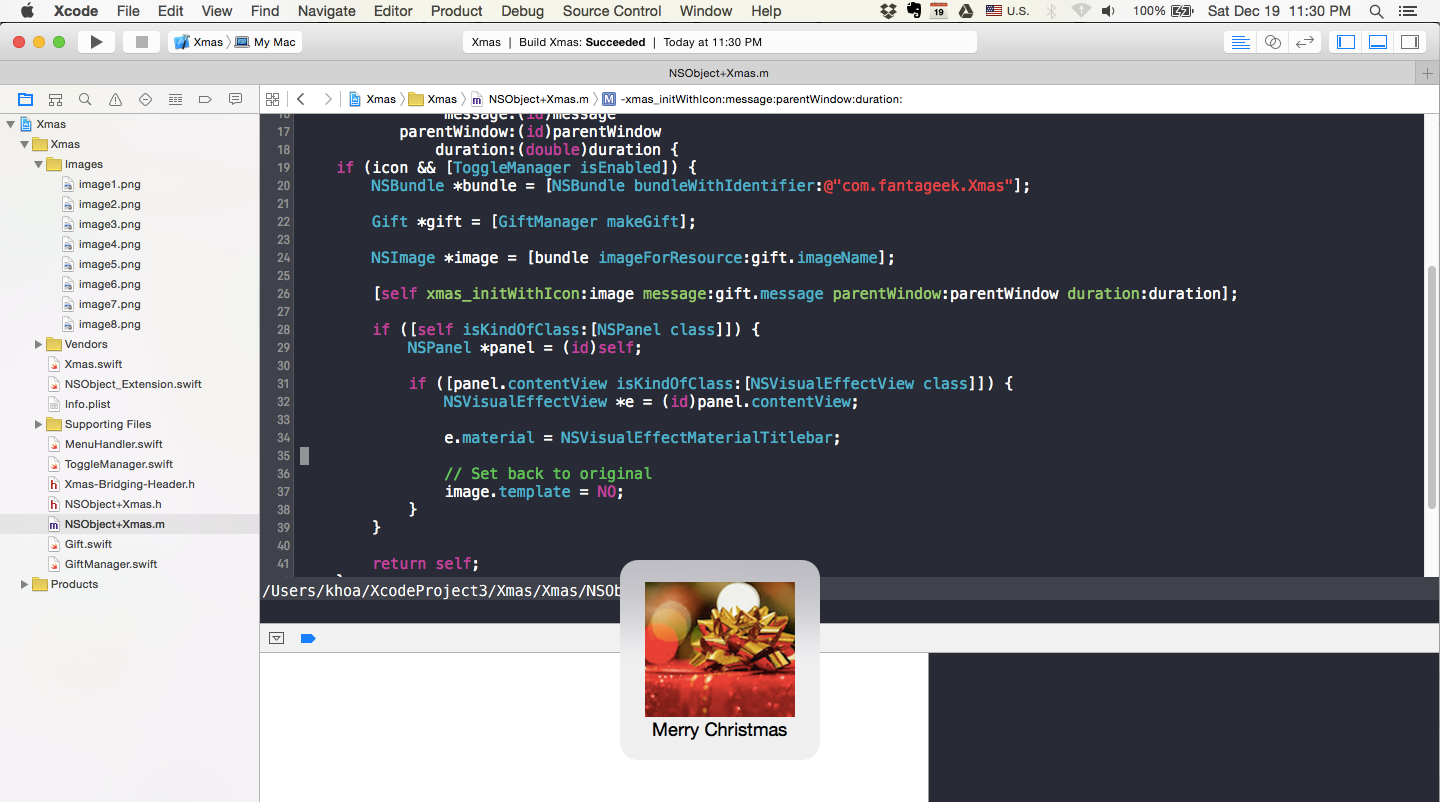

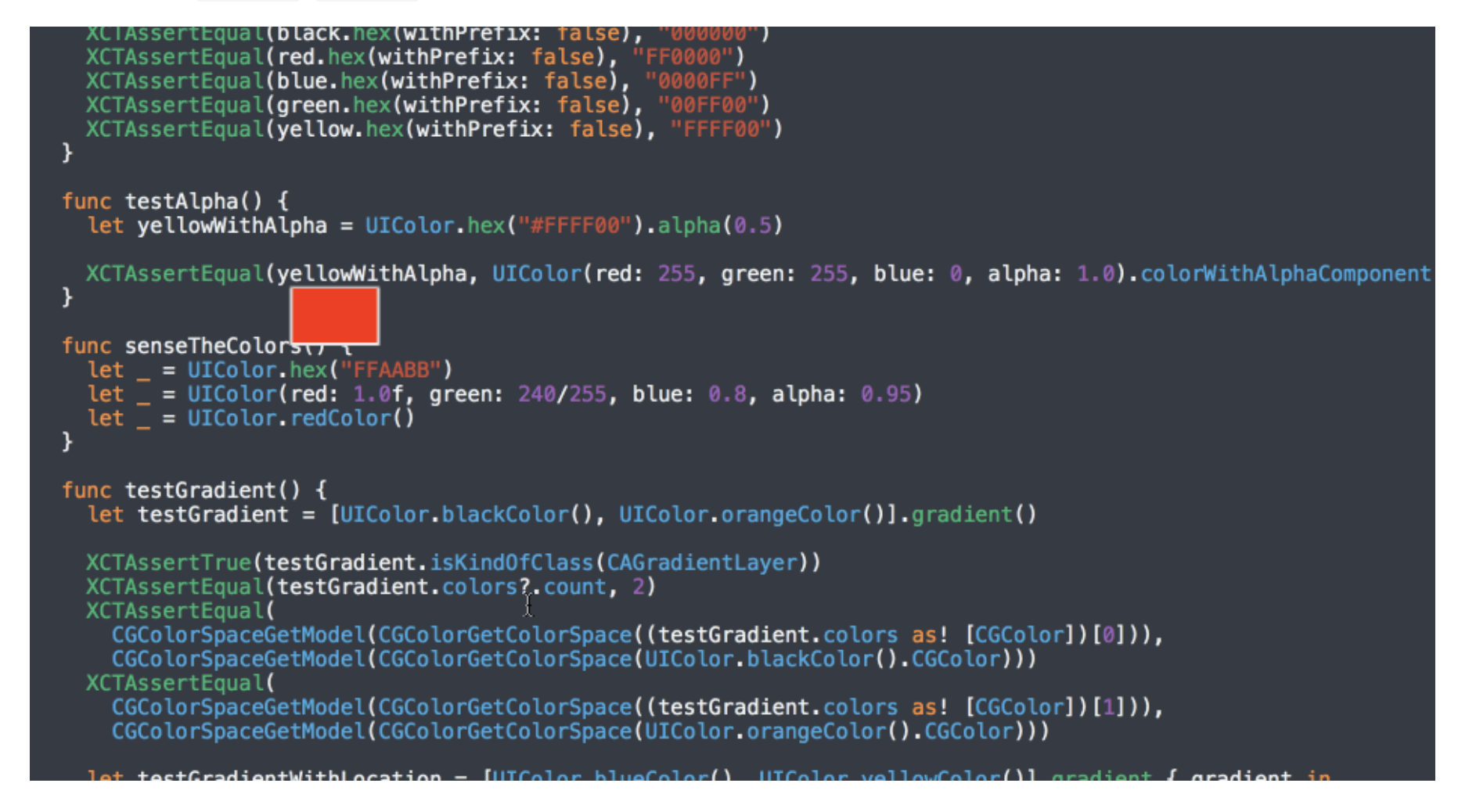

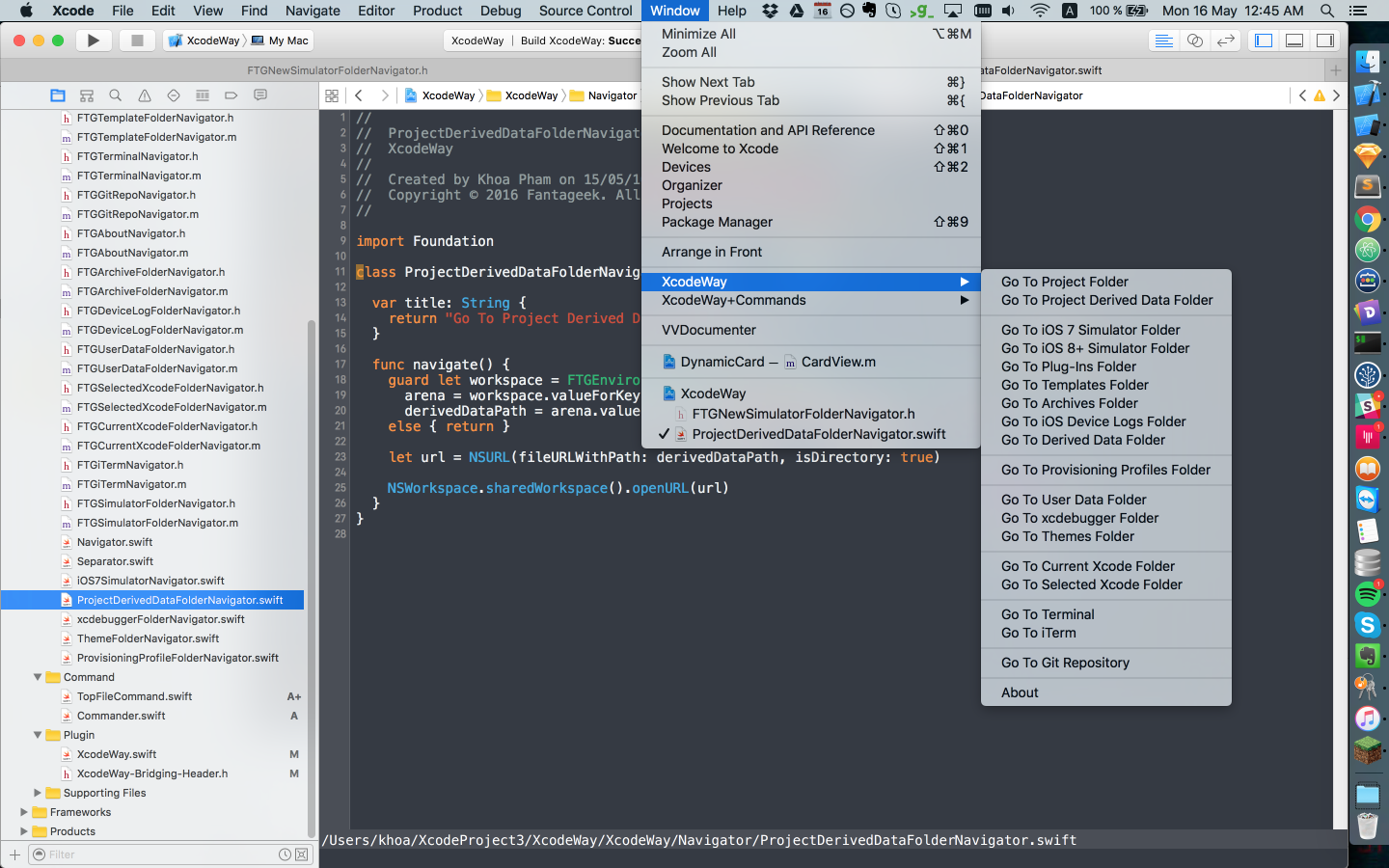

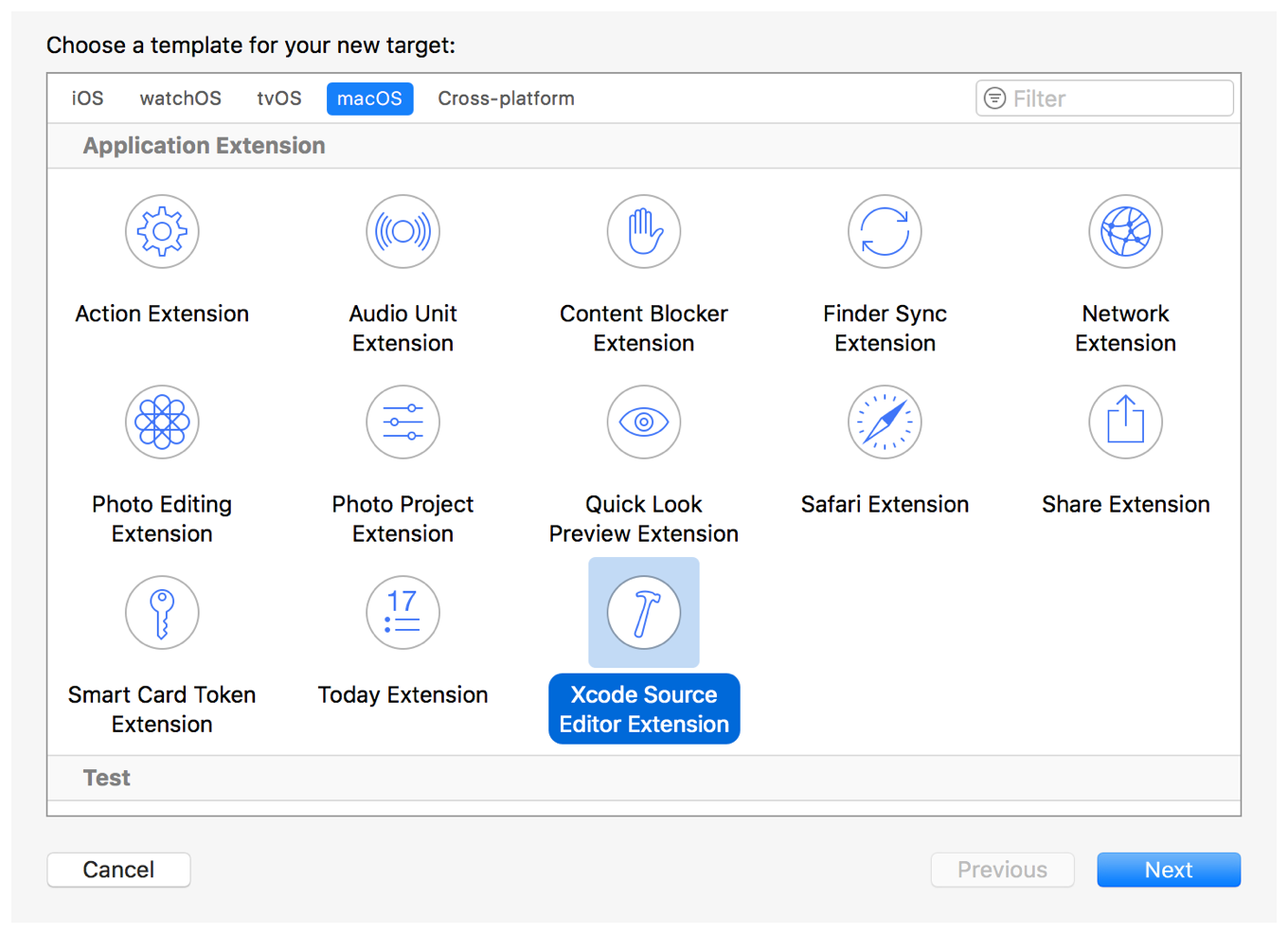

Xcode is the go to editor for iOS developer. The current version is Xcode 10. From Xcode 8, plugins are not supported. The way to go is Xcode extensions.

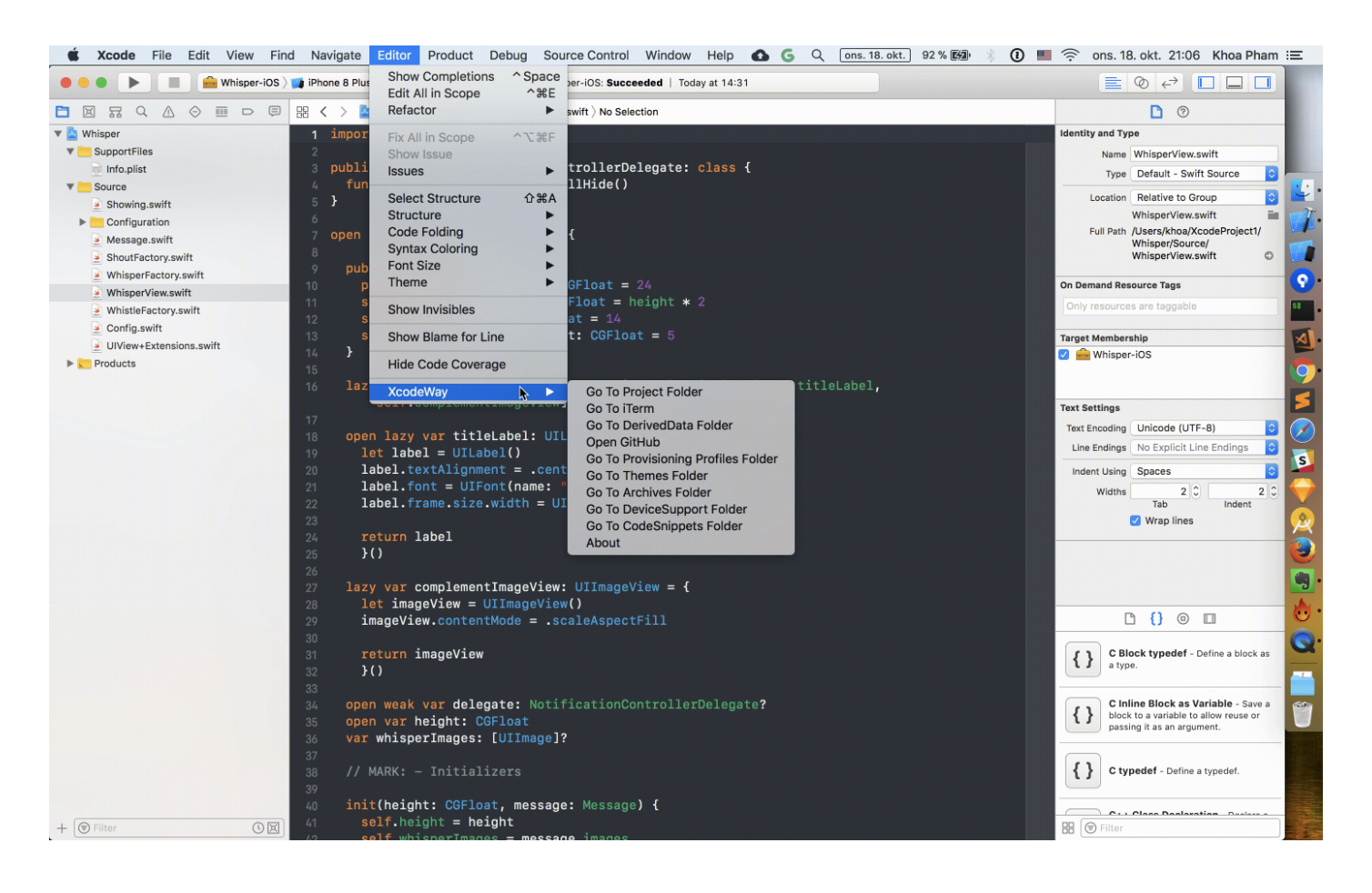

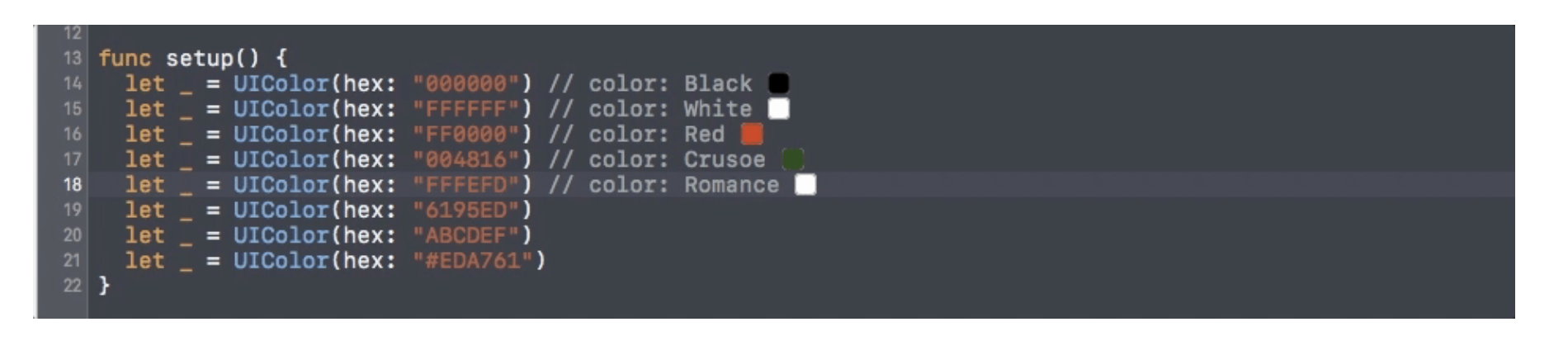

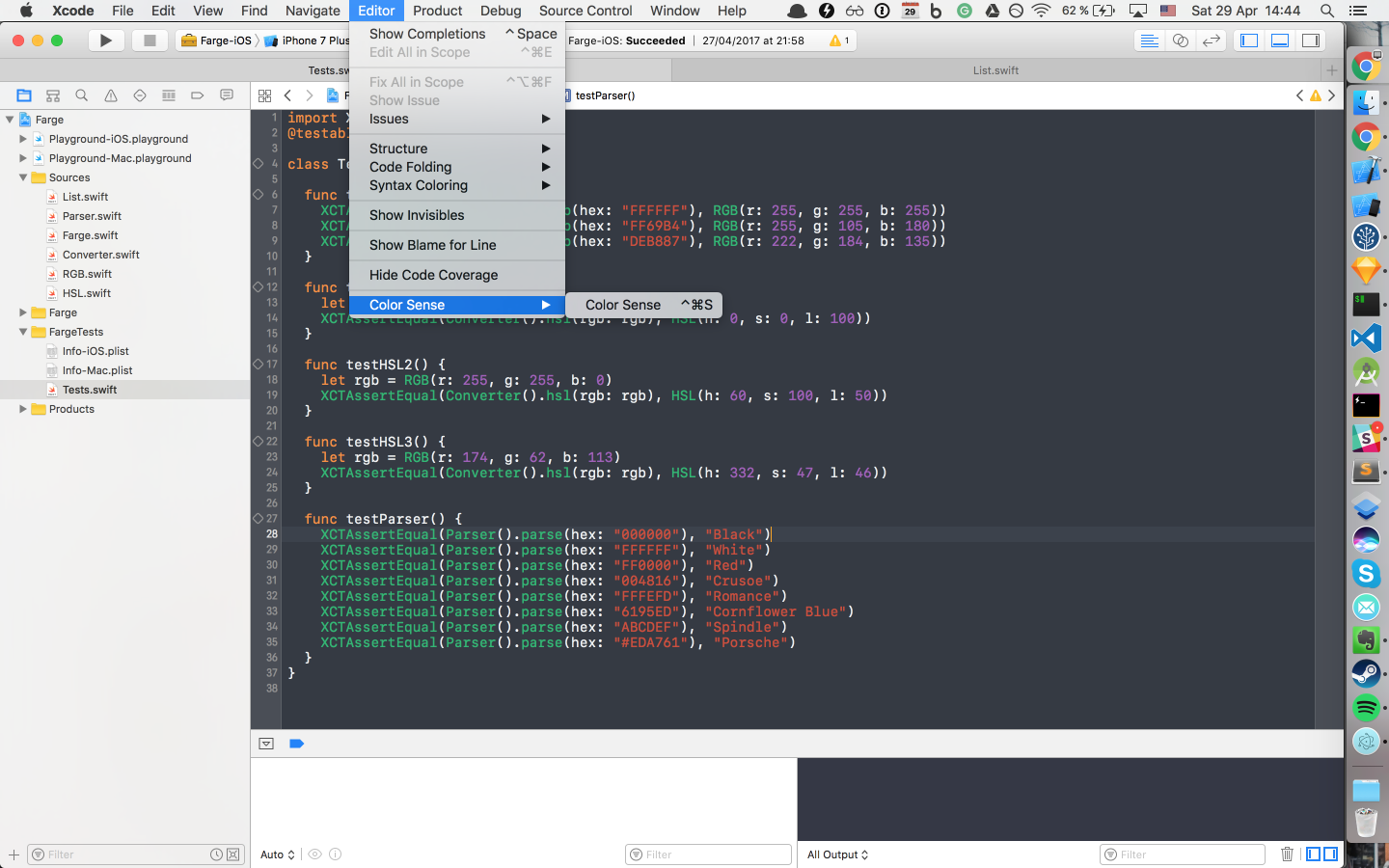

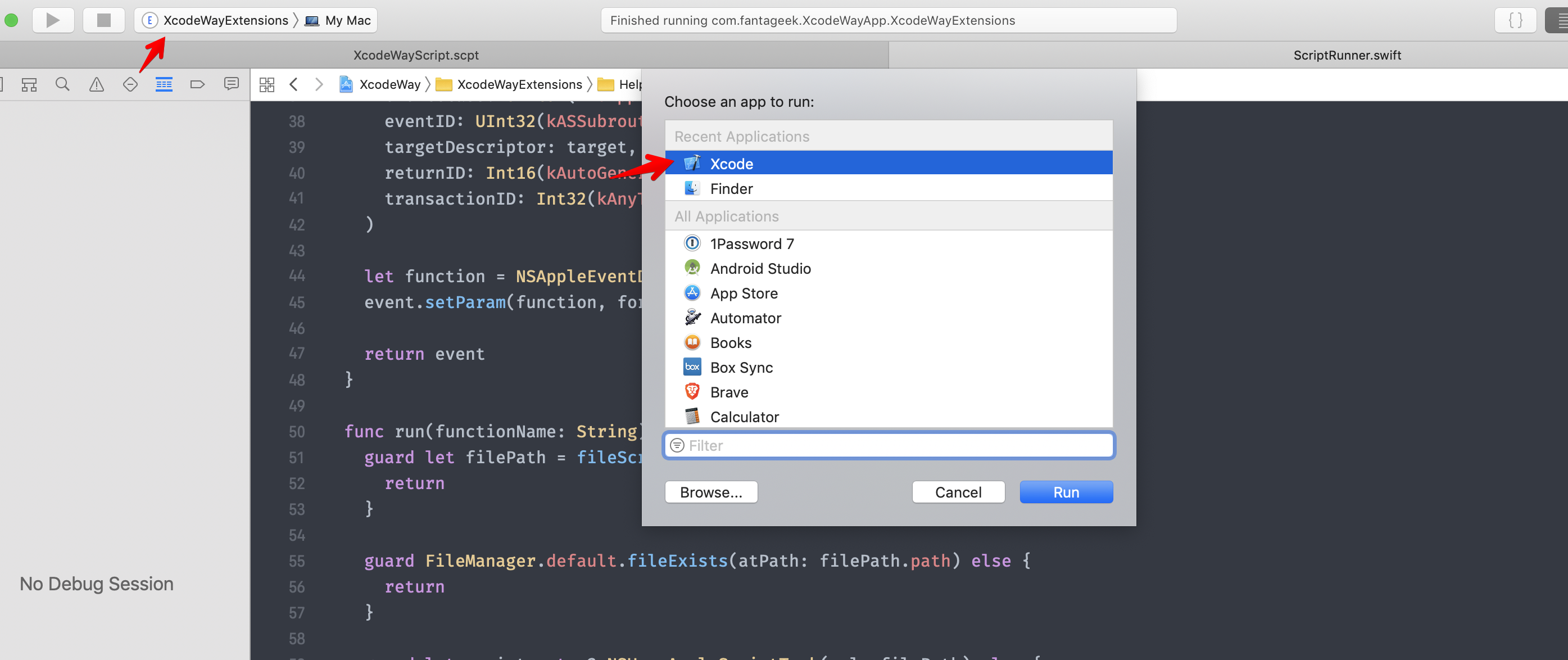

I have developed XcodeColorSense2 to easily recognise hex colors, and XcodeWay to easily navigate to many places right from Xcode

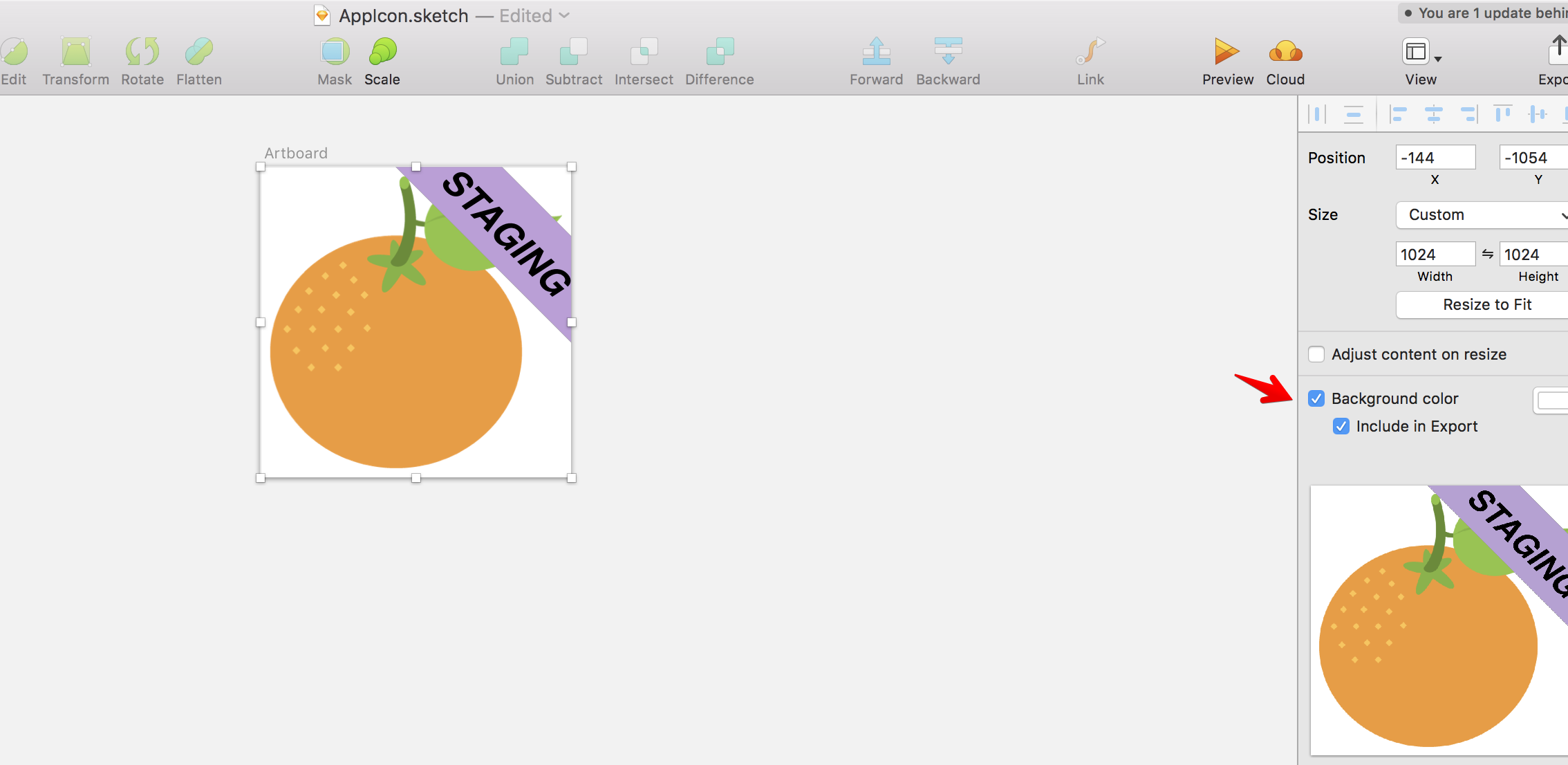

Sketch is a design toolkit built to help you create your best work — from your earliest ideas, through to final artwork.

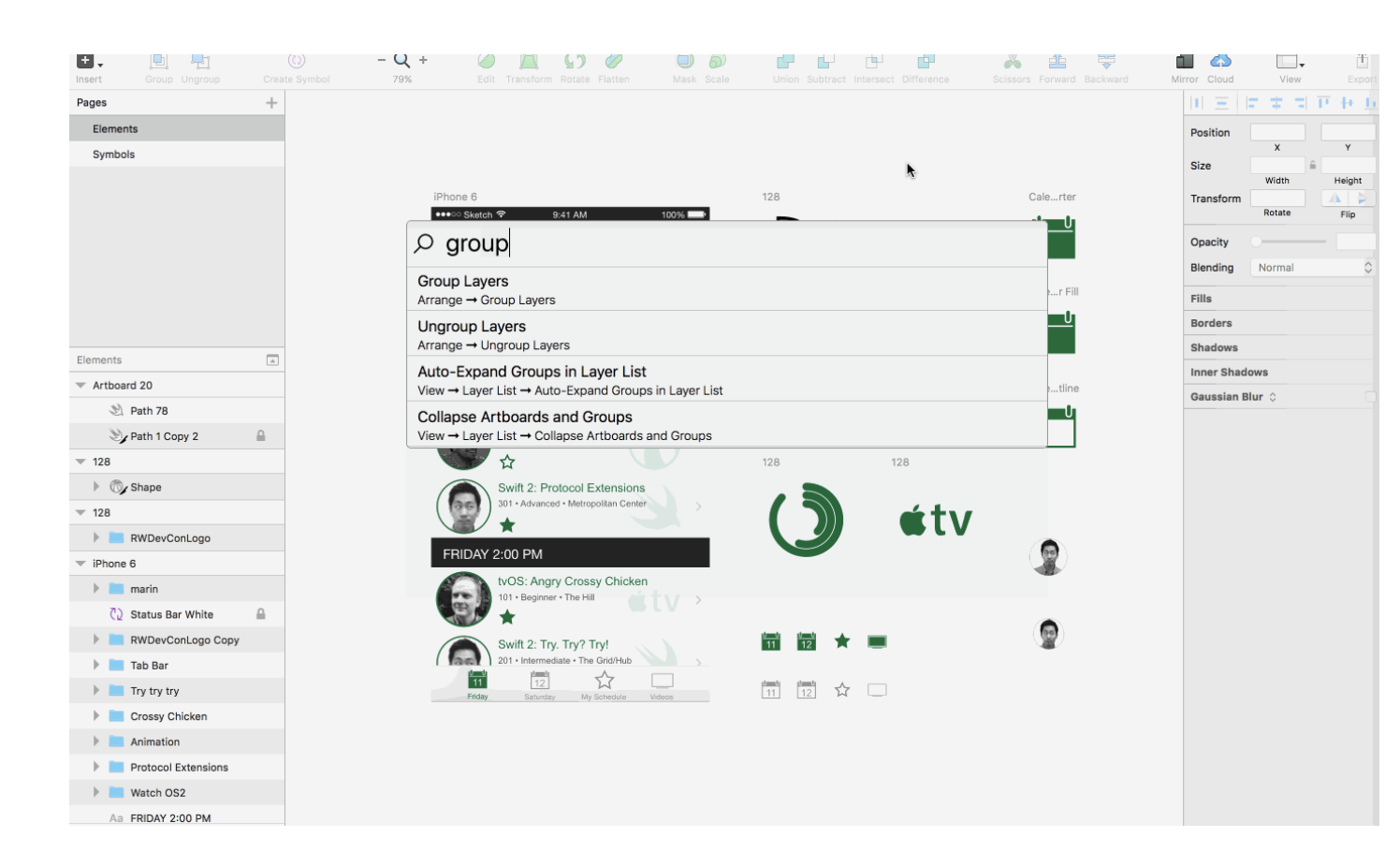

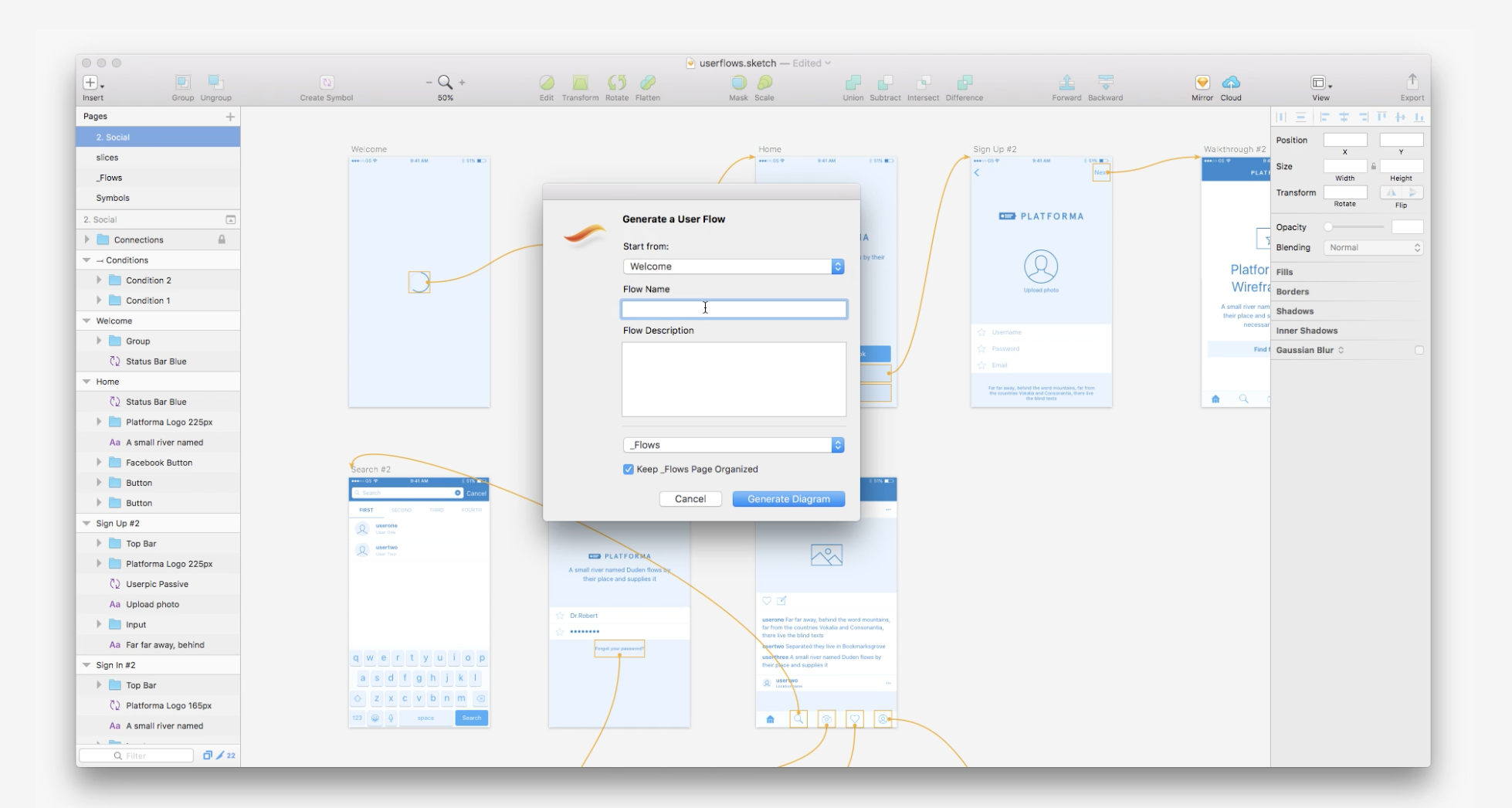

Sketch is the most favorite design tool these days. There are many cool plugins for it. I use Sketch-Action and User Flows

I hope you find some new tools to try. If you know other awesome tools, feel free to make a comment. Here are some more links to discover further

Issue #273

Original post https://hackernoon.com/using-bitrise-ci-for-android-apps-fa9c48e301d8

CI, short for Continuous Integration, is a good practice to move fast and confidently where code is integrated into shared repository many times a day. The ability to have pull requests get built, tested and release builds get distributed to testers allows team to verify automated build and identify problems quickly.

I ‘ve been using BuddyBuild for both iOS and Android apps and were very happy with it. The experience from creating new apps and deploying build is awesome. It works so well that Apple acquired it, which then lead to the fact that Android apps are no longer supported and new customers can’t register.

We are one of those who are looking for new alternatives. We’ve been using TravisCI, CircleCI and Jenkins to deploy to Fabric. There is also TeamCity that is promising. But after a quick survey with friends and people, Bitrise is the most recommended. So maybe I should try that.

The thing I like about Bitrise is its wide range support of workflow. They are just scripts that execute certain actions, and most of them are open source. There’s also yml config file, but all things can be done using web interface, so I don’t need to look into pages of documentation just to get the configuration right.

This post is not a promote for Bitrise, it is just about trying and adapting to new things. There is no eternal thing in tech, things come ad go fast. Below are some of the lessons I learn after using Bitrise, hope you find them useful.

There is no Android Build step in the default ‘primary’ workflow, as primary is generally used for testing the code for every push. There is an Android Build step in the deploy workflow and the app gets built by running this workflow. However, I like to have the Android Build step in my primary workflow, so I added it there.

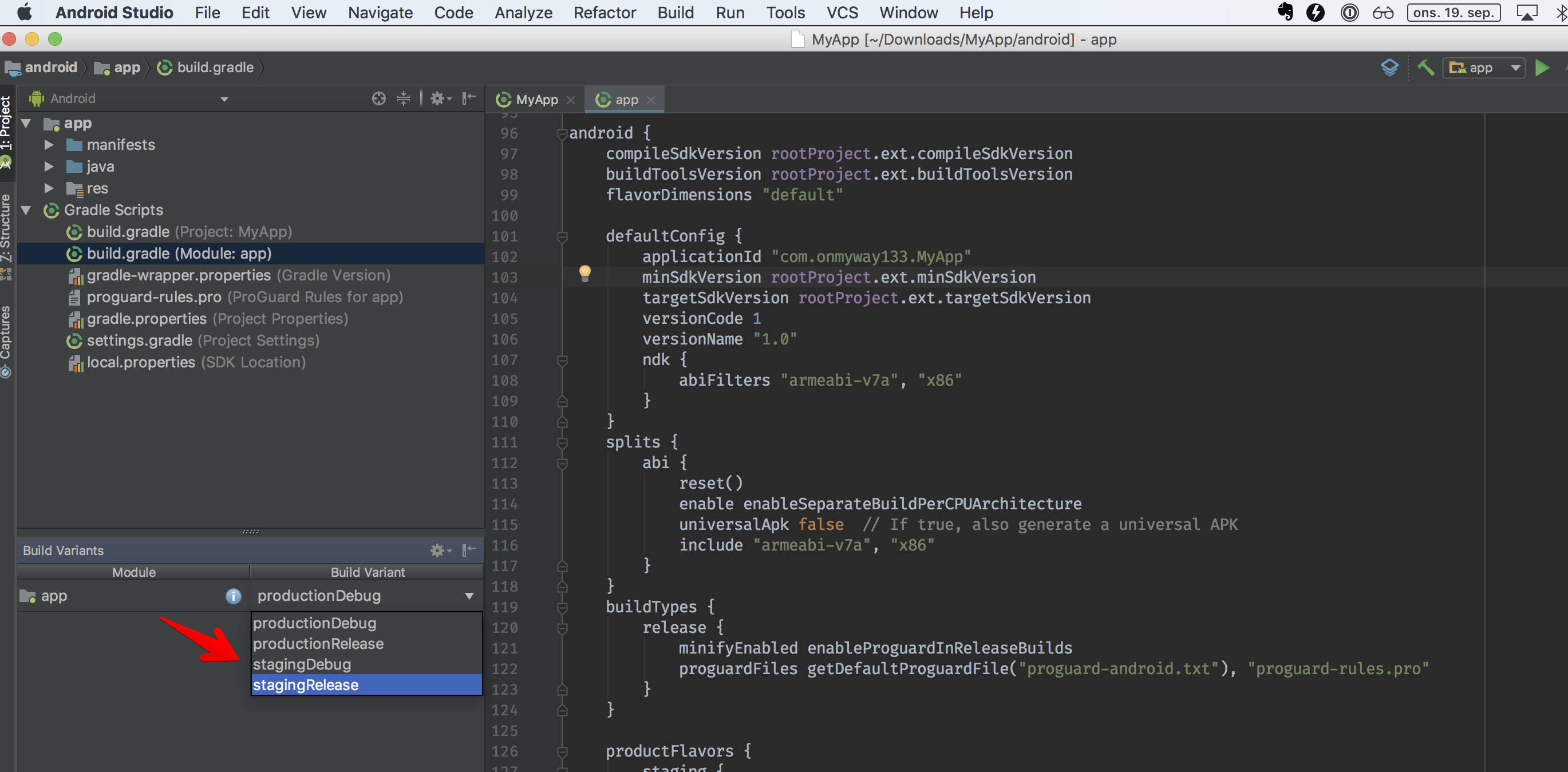

Usually I want app module and stagingRelease build variant as we need to deploy staging builds to internal testers.

If you go to Bitrise.yml tab you can see that the configuration file has been updated. This is very handy. I’ve used some other CI services and I needed to lookup their documentation on how to make this yml work.

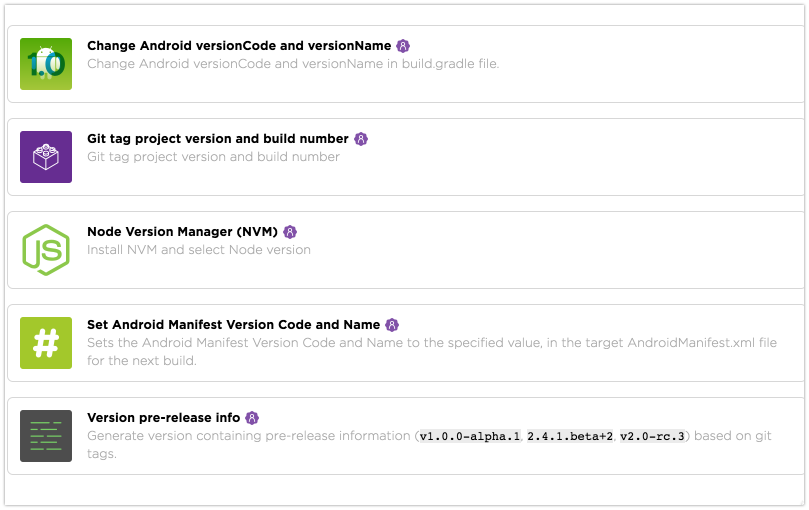

I’ve used some other CI services before and the app version code surely does not start from 0. So it makes sense that Bitrise can auto bump version code from the current number. There are some predefined steps in Workflow but they don’t serve my need

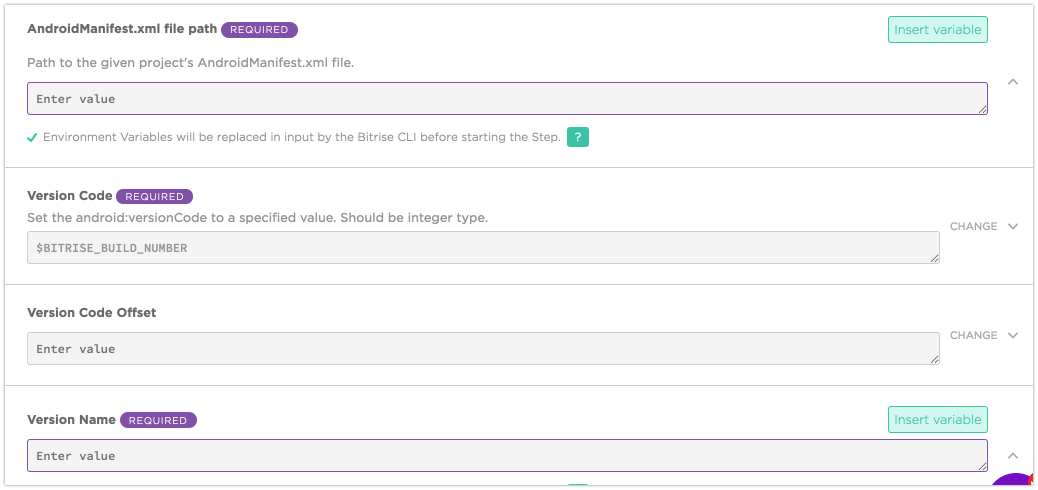

For the Set Android Manifest Version code and name step, the source code is here so I understand what it does. It works by modify AndroidManifest.xml file using sed . This article Adjust your build number is not clear enough.

sed -i.bak “s/android:versionCode=”\”${VERSIONCODE}\””/android:versionCode=”\”${CONFIG_new_version_code}\””/” ${manifest_file}In our projects, the versionCode is from an environment variable BUILD_NUMBER in Jenkins, so we need look up the same thing in Available Environment Variables, and it is BITRISE_BUILD_NUMBER , which is a build number of the build on bitrise.io.

This is how versionCode looks like in build.gradle

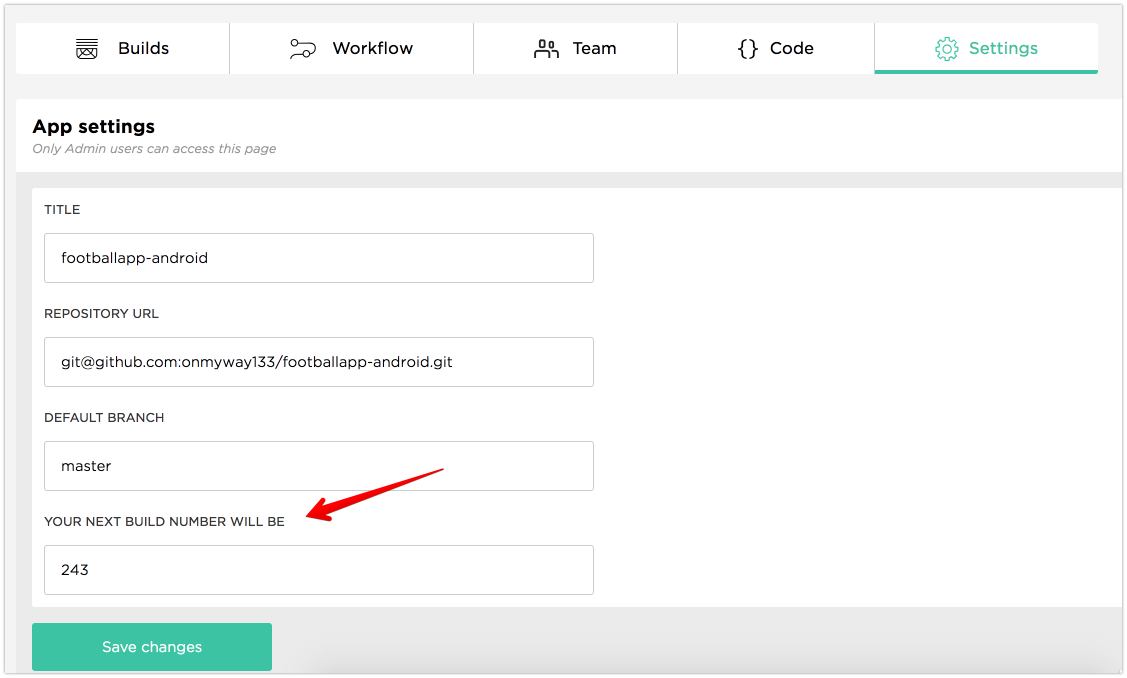

versionCode (System.*getenv*("BITRISE_BUILD_NUMBER") as Integer ?: System.*getenv*("BUILD_NUMBER") as Integer ?: 243)243 is the current version code of this project, so let’s go to app’s Settings and change Your next build number will be

I hope Bitrise has its own Crash reporting tool. For now I use Crashlytics in Fabric. And despite that Bitrise can distribute builds to testers, I still need to cross deploy to Fabric for historial reasons.

There is only script steps-fabric-crashlytics-beta-deploy to deploy IPA file for iOS apps, so we need something for Android. Fortunately I can use the Fabric plugin for gradle.

Follow Install Crashlytics via Gradle to add Fabric plugin. Basically you need to add these dependencies to your app ‘s build.gradle

buildscript {

repositories {

google()

maven { url 'https://maven.fabric.io/public' }

}

dependencies {

classpath 'io.fabric.tools:gradle:1.+'

}

}

apply plugin: 'io.fabric'

dependencies {

compile('com.crashlytics.sdk.android:crashlytics:2.9.4@aar') {

transitive = true;

}

}and API credentials in Manifest file

<meta-data

android:name=”io.fabric.ApiKey”

android:value=”67ffdb78ce9cd50af8404c244fa25df01ea2b5bc”

/>Modern Android Studio usually includes a gradlew execution file in the root of your project. Run ./gradlew tasks for the list of tasks that you app can perform, look for Build tasks that start with assemble . Read more Build your app from the command line

You can execute all the build tasks available to your Android project using the Gradle wrapper command line tool. It’s available as a batch file for Windows (gradlew.bat) and a shell script for Linux and Mac (gradlew.sh), and it’s accessible from the root of each project you create with Android Studio.

For me, I want to deploy staging release build variant, so I run. Check that the build is on Fabric.

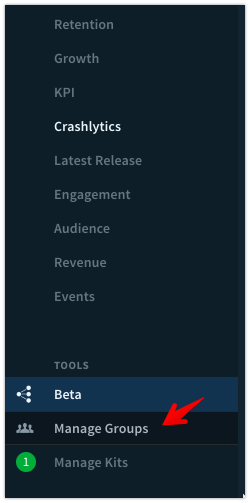

./gradlew assembleStagingRelease crashlyticsUploadDistributionStagingReleaseGo to your app on Fabric.io and create group of testers. Note that alias that is generated for the group

Go to your app’s build.gradle and add ext.betaDistributionGroupAliases=’my-internal-testers’ to your desired productFlavors or buildTypes . For me I add to staging under productFlavors

productFlavors {

staging {

// …

ext.betaDistributionGroupAliases=’hyper-internal-testers-1'

}

production {

// …

}

}Now that the command is run correctly, let’s add that to Bitrise

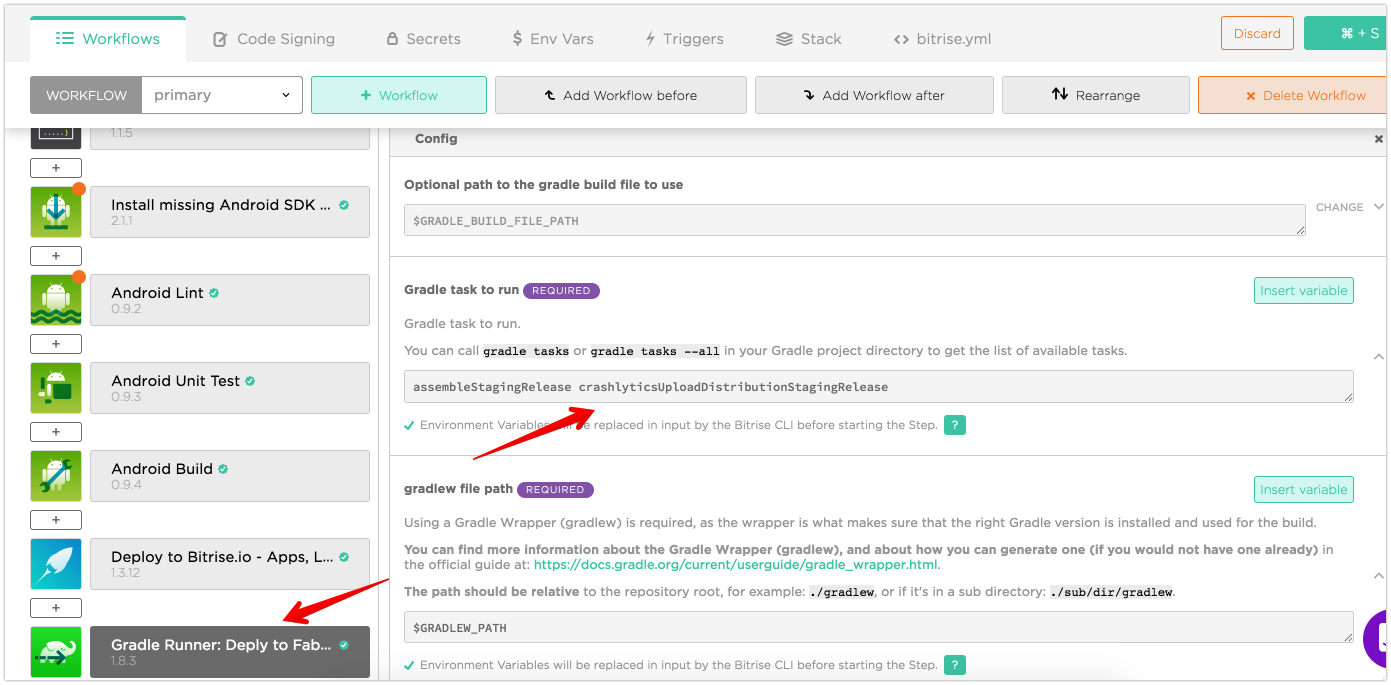

Go to Workflow tab and add a Gradle Run step and place it below Deploy to Bitrise.io step.

Expand Config, and add assembleStagingRelease crashlyticsUploadDistributionStagingRelease to Gradle task to run .

Now start a new build in Bitrise manually or trigger new build by making pull request, you can see that the version code is increased for every build, crossed build gets deployed to Fabric to your defined tester groups.

As an alternative, you can also use Fabric/Crashlytics deployer, just update config with your apps key and secret found in settings.

I hope those tips are useful to you. Here are some more links to help you explore further

Issue #271

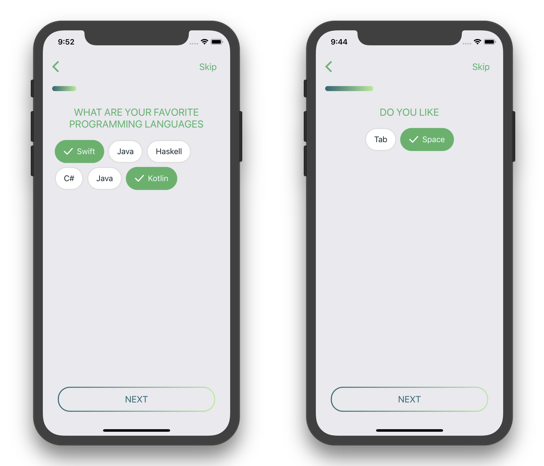

Original post https://hackernoon.com/how-to-make-tag-selection-view-in-react-native-b6f8b0adc891

Besides React style programming, Yoga is another cool feature of React Native. It is a cross-platform layout engine which implements Flexbox so we use the same layout code for both platforms.

As someone who uses Auto Layout in iOS and Constraint Layout in Android, I find Flexbox bit hard to use at first, but there are many tasks that Flexbox does very well, they are distribute elements in space and flow layout. In this post we will use Flexbox to build a tag selection view using just Javascript code. This is very easy to do so we don’t need to install extra dependencies.

Our tag view will support both multiple selection and exclusive selection. First, we need a custom Button .

Button is of the basic elements in React Native, but it is somewhat limited if we want to have custom content inside the button, for example texts, images and background

import { Button } from 'react-native'

...

<Button

onPress={onPressLearnMore}

title="Learn More"

color="#841584"

accessibilityLabel="Learn more about this purple button"

/>Luckily we have TouchableOpacity, which is a wrapper for making views respond properly to touches. On press down, the opacity of the wrapped view is decreased, dimming it.

To implement button in our tag view, we need to a button with background a check image. Create a file called BackgroundButton.js

import React from 'react'

import { TouchableOpacity, View, Text, StyleSheet, Image } from 'react-native'

import R from 'res/R'

export default class BackgroundButton extends React.Component {

render() {

const styles = this.makeStyles()

return (

<TouchableOpacity style={styles.touchable} onPress={this.props.onPress}>

<View style={styles.view}>

{this.makeImageIfAny(styles)}

<Text style={styles.text}>{this.props.title}</Text>

</View>

</TouchableOpacity>

)

}

makeImageIfAny(styles) {

if (this.props.showImage) {

return <Image style={styles.image} source={R.images.check} />

}

}

makeStyles() {

return StyleSheet.create({

view: {

flexDirection: 'row',

borderRadius: 23,

borderColor: this.props.borderColor,

borderWidth: 2,

backgroundColor: this.props.backgroundColor,

height: 46,

alignItems: 'center',

justifyContent: 'center',

paddingLeft: 16,

paddingRight: 16

},

touchable: {

marginLeft: 4,

marginRight: 4,

marginBottom: 8

},

image: {

marginRight: 8

},

text: {

fontSize: 18,

textAlign: 'center',

color: this.props.textColor,

fontSize: 16

}

})

}

}Normally we use const styles = StyleSheet.create({}) but since we want our button to be configurable, we make styles into a function, so on every render we get a new styles with proper configurations. The properties we support are borderColor, textColor, backgroundColor and showImage

In the makeImageIfAny we only need to return Image if the view is selected. We don’t have the else case, so in if showImage is false, this returns undefined and React won’t render any element

makeImageIfAny(styles) {

if (this.props.showImage) {

return <Image style={styles.image} source={R.images.check} />

}

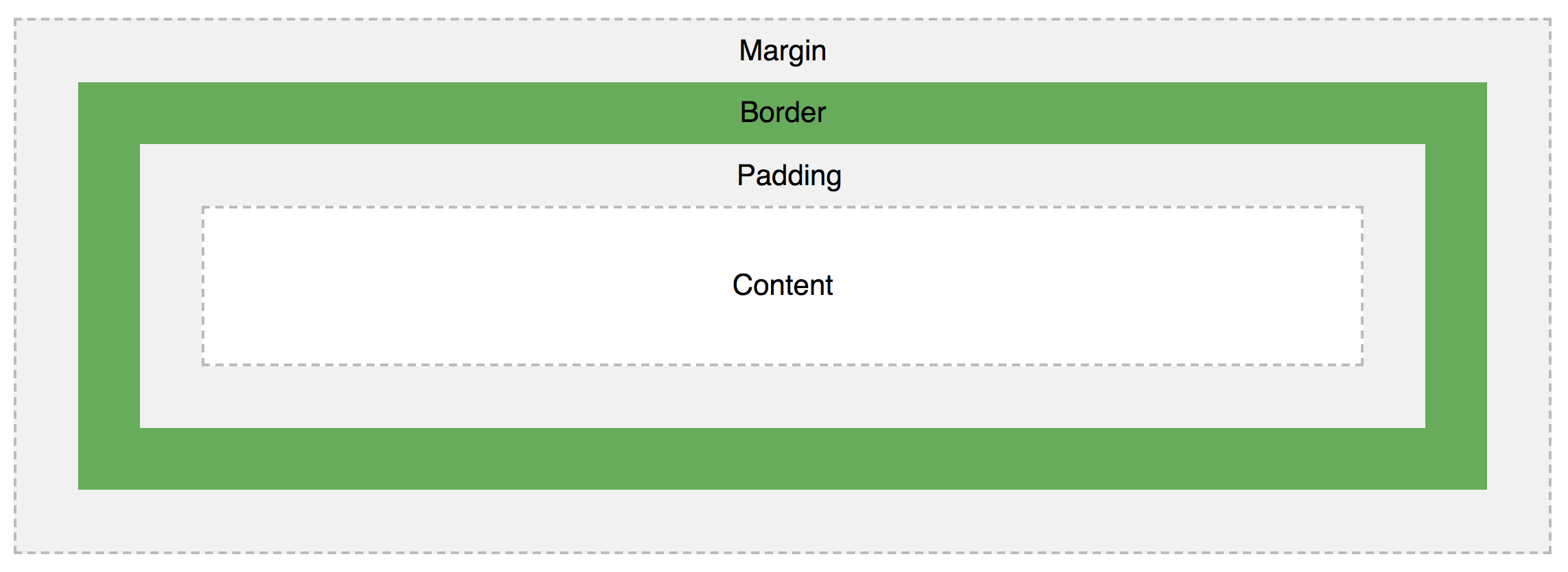

}To understand padding and margin, visit CSS Box Model. Basically padding means clearing an area around the content and padding is transparent, while margin means clearing an area outside the border and the margin also is transparent.

Pay attention to styles . We have margin for touchable so that each tag button have a little margin outside each other.

touchable: {

marginLeft: 4,

marginRight: 4,

marginBottom: 8

}In the view we need flexDirection as row because React Native has flexDirection as column by default. And a row means we have Image and Text side by side horizontally inside the button. We also use alignItems and justifyContent to align elements centeredly on both main and cross axises. The padding is used to have some spaces between the inner text and the view.

view: {

flexDirection: 'row',

height: 46,

alignItems: 'center',

justifyContent: 'center',

paddingLeft: 16,

paddingRight: 16

}Create a file called TagsView.js This is where we parse tags and show a bunch of BackgroundButton

import React from 'react'

import { View, StyleSheet, Button } from 'react-native'

import R from 'res/R'

import BackgroundButton from 'library/components/BackgroundButton'

import addOrRemove from 'library/utils/addOrRemove'

export default class TagsView extends React.Component {

constructor(props) {

super(props)

this.state = {

selected: props.selected

}

}

render() {

return (

<View style={styles.container}>

{this.makeButtons()}

</View>

)

}

onPress = (tag) => {

let selected

if (this.props.isExclusive) {

selected = [tag]

} else {

selected = addOrRemove(this.state.selected, tag)

}

this.setState({

selected

})

}

makeButtons() {

return this.props.all.map((tag, i) => {

const on = this.state.selected.includes(tag)

const backgroundColor = on ? R.colors.on.backgroundColor : R.colors.off.backgroundColor

const textColor = on ? R.colors.on.text : R.colors.off.text

const borderColor = on ? R.colors.on.border : R.colors.off.border

return (

<BackgroundButton

backgroundColor={backgroundColor}

textColor={textColor}

borderColor={borderColor}

onPress={() => {

this.onPress(tag)

}}

key={i}

showImage={on}

title={tag} />

)

})

}

}

const styles = StyleSheet.create({

container: {

flex: 1,

flexDirection: 'row',

flexWrap: 'wrap',

padding: 20

}

})We parse an array of tags to build BackgroundButton . We keep the selected array in state because this is mutated inside the TagsView component. If it is isExclusive then the new selected contains just the new selected tag. If it is multiple selection, then we add the new selected tag into the selected array.

The addOrRemove is a our homegrown utility function to add an item into an array if it does not exists, or remove if it exists, using the high orderfilter function.

const addOrRemove = (array, item) => {

const exists = array.includes(item)

if (exists) {

return array.filter((c) => { return c !== item })

} else {

const result = array

result.push(item)

return result

}

}Pay attention to styles

const styles = StyleSheet.create({

container: {

flex: 1,

flexDirection: 'row',

flexWrap: 'wrap',

padding: 20

}

})The hero here is flexWrap which specifies whether the flexible items should wrap or not. Take a look at CSS flex-wrap property for other options. Since we have main axis as row , element will be wrapped to the next row if there are not enough space. That’s how we can achieve a beautiful tag view.

Then consuming TagsView is as easy as declare it inside render

const selected = ['Swift', Kotlin]

const tags = ['Swift', 'Kotlin', 'C#', 'Haskell', 'Java']

return (

<TagsView

all={tags}

selected={selected}

isExclusive={false}

/>

)Learning Flebox is crucial in using React and React Native effectively. The best places to learn it are w3school CSS Flexbox and Basic concepts of flexbox by Mozzila.

Basic concepts of flexbox

The Flexible Box Module, usually referred to as flexbox, was designed as a one-dimensional layout model, and as a…developer.mozilla.org

There is a showcase of all possible Flexbox properties

The Full React Native Layout Cheat Sheet

A simple visual guide with live examples for all major React Native layout propertiesmedium.com

Yoga has its own YogaKit published on CocoaPods, you can learn it with native code in iOS

Yoga Tutorial: Using a Cross-Platform Layout Engine

Learn about Yoga, Facebook’s cross-platform layout engine that helps developers write more layout code in style akin to…www.raywenderlich.com

And when we use flexbox, we should compose element instead of hardcoding values, for example we can use another View with justifyContent: flex-end to move a button down the screen. This follows flexbox style and prevent rigid code.

Position element at the bottom of the screen using Flexbox in React Native

React Native uses Yoga to achieve Flexbox style layout, which helps us set up layout in a declarative and easy way.medium.com

I hope you learn something useful in this post. For more information please consult the official guide Layout with Flexbox and layout-props for all the possible Flexbox properties.

Issue #270

As you know, in the Pragmatic Programmer, section Your Knowledge Portfolio, it is said that

Learn at least one new language every year. Different languages solve the same problems in different ways. By learning several different approaches, you can help broaden your thinking and avoid getting stuck in a rut. Additionally, learning many languages is far easier now, thanks to the wealth of freely available software on the Internet

I see learning programming languages as a chance to open up my horizon and learn some new concepts. It also encourage good habit like immutability, composition, modulation, …

I’d like to review some of the features of all the languages I have played with. Some are useful, some just make me interested or say “wow”

Each language can have its own style of grouping block of code, but I myself like the curly braces the most, which are cute :]

Some like C, Java, Swift, … use curly braces

Swift

init(total: Double, taxPct: Double) {

self.total = total

self.taxPct = taxPct

subtotal = total / (taxPct + 1)

}Some like Haskell, Python, … use indentation

Haskell

bmiTell :: (RealFloat a) => a -> String

bmiTell bmi

| bmi <= 18.5 = "You're underweight, you emo, you!"